Amazon Places Product (plus more from Microsoft & Meta)

Virtual product placements, smart fabrics & speech input for VR.

Sign up to uncover the latest in emerging technology.

virtual product placements, smart fabrics & speech input for VR

1. Amazon – virtual product placement

Amazon is looking into enabling virtual product placements within media content.

For example, imagine you’re watching a new Will Smith movie on Amazon Prime. As he lifts his hand to slap a comedian, his wrist could be an “insertion point” for a brand to overlay their watch on his wrist. If the viewer is retro loving software engineer, the wrist could display a new funky Casio. If the viewer is into luxury goods, the wrist could show an Audemars Piguet. Silly example but you get the point.

Amazon will leverage its computer vision chops to detect opportunities in a piece of content for 2d/3d placements of products or branding. This will involve understanding the objects in a scene, how long they’re on screen for, and the surface of the object. For specific objects (e.g. a bottle), Amazon could then have an inventory of potential brands to show, depending on the user.

This is interesting to me because it brings together Amazon Prime with Amazon’s core e-commerce business in a tighter way. Each video in Prime becomes new ad inventory (already a $32bn business for Amazon), as well as a potential purchasing moment when the product placement links to goods on Amazon’s marketplace.

Amazon could use this revenue to recoup some of the costs of acquiring the content, as well as provide a new recurring revenue stream for the content creators.

Fascinating.

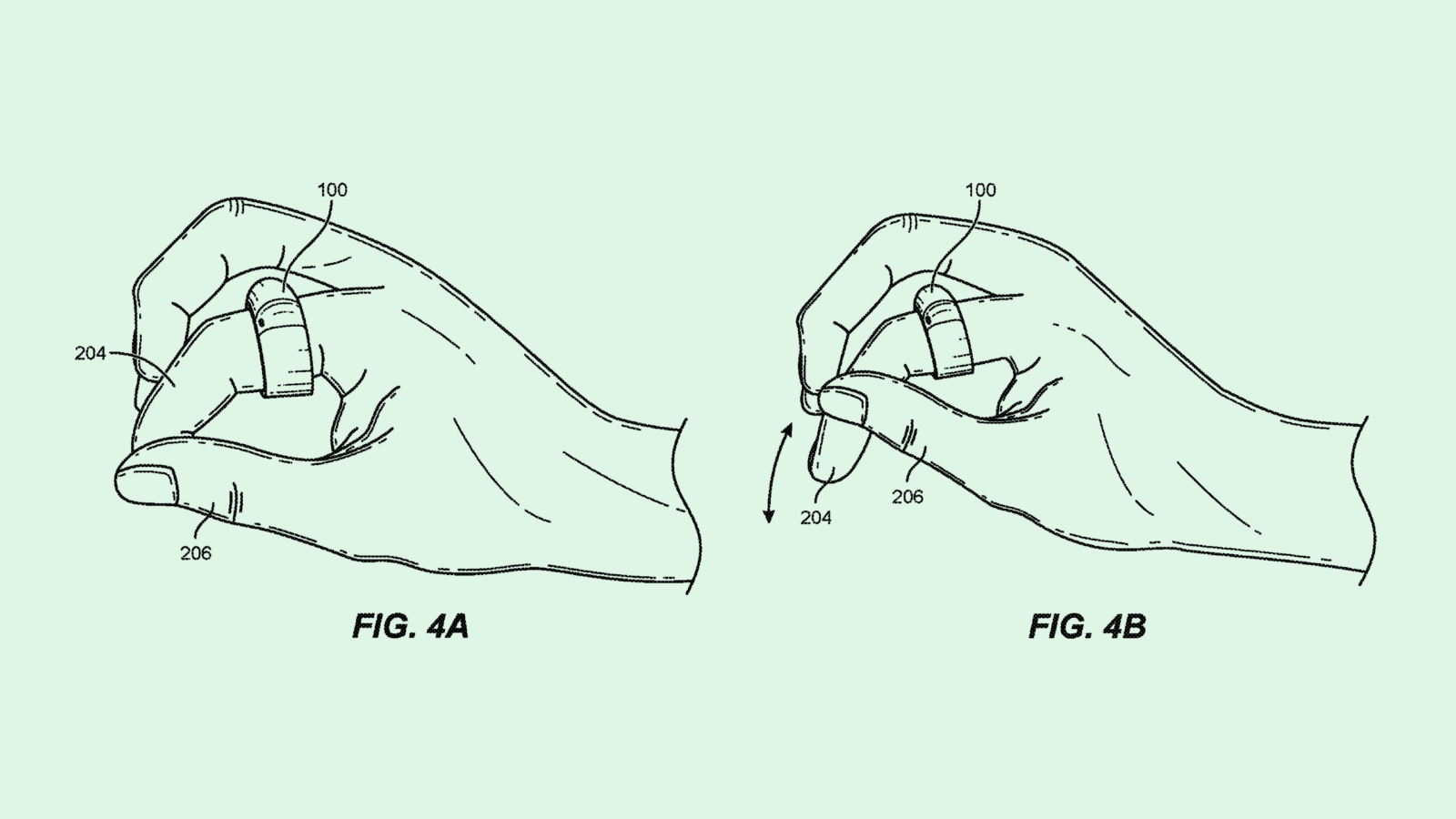

2. Microsoft – smart fabric that recognises touch & objects

“Smart fabrics” has been a trendy topic that has always been on the verge of take-off, but never quite happened.

In fact, Microsoft have been exploring this for a while. In 2019, they revealed they were working on a smart table-cloth that could detect what food was on a table and then offer a drink recommendation. Generally useless but the technological leaps required to make it happen will undoubtedly lead to something interesting.

In this latest patent filing, Microsoft is continuing to explore smart fabrics, but more through the lens of it providing new ways for people to interact with computing systems.

Microsoft specifically focus on the smart fabric being used in the pockets of trousers. In turn, the fabric could detect what objects are in a user’s pockets, and also detect a user making hand gestures in their pocket.

Here’s some of the use-cases that Microsoft imagines:

- detecting that a user has forgotten an object (e.g. your keys or you wallet as you’re leaving your home)

- interacting with a computing system in a private way (i.e. your hands are in your pocket and therefore it’s a bit more discrete than holding a device in your hand) – meh

- enabling richer gestural interactions. For example, if a user touches against their pocket, they could feel how much pressure they’re applying as their finger touches against their thigh. This would be useful when trying to interact in VR.

- contextual activity tracking notifications – e.g. your hands in your pocket when you’re in a social situation, maybe try taking it out to look more confident

- cool VR experiences where your physical pocket mirrors your virtual pocket. Imagine a game has a physical replica of a sword and when you put it in your physical pocket, you also place your virtual sword into your player’s pocket.

Generally, wearables moving beyond accessories and into clothing will result in more data points being measured, and newer gestural interactions with other computing devices. Something to watch.

3. Meta – speech input for VR

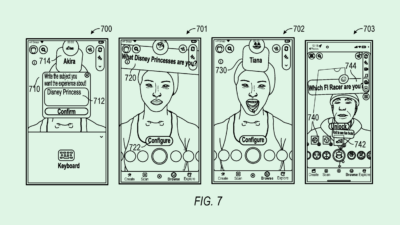

Meta is looking to enable users to provide speech inputs to control artificial reality environments.

In their patent application, Meta admit that controlling virtual reality environments through controllers can be slow and difficult. Meta’s proposed solution is to take speech inputs and then take additional data to correctly understand a user, including the direction of their gaze.

In the above example, a user might be wearing a headset looking at their living room. If they were to then say, “Add a dining table with two chairs to the right of the sofa”, Meta would understand that the user wants to add the dining table to the existing scene that includes a sofa.

In this filing, Meta explicitly looks at how speech could be used to manipulate and control objects in relation to one another – e.g. the dining table relative to the sofa. But more generally, speech interfaces could be a big unlock in VR. At the very least, it would be an improvement to the pretty clunky typing experiences.