Microsoft Gloves Up (plus more from Nvidia & Snap)

AR ads, digital gloves & robot grasps

Sign up to uncover the latest in emerging technology.

AR ads, digital gloves & robot grasps

1. Microsoft – digital gloves that provide haptic feedback

In the first issue of Patent Drop we looked at Apple dreaming up new input devices via gloves. Similarly, Microsoft is exploring building gloves that provide haptic feedback as an input device.

Microsoft’s gloves have sensors to detect the hand pose of a user, and the pressure exerted, as well as haptics to provide feedback to the wearer.

Two main use cases are explored in the filing. The first is the digital glove being a way of augmenting the use of existing input devices. For example, if a user wore the digital glove while interacting with a computer mouse, the mouse could now detect ‘pressure data’ from the glove and different applications could utilize this additional parameter in different ways.

The second use case that is hinted at in the filing is using the glove data for AR / VR applications. These systems would combine gaze tracking data with glove data. For instance, if you’re looking at an object on the screen and you then clench your fists, Microsoft’s AR / VR system would deduce that you’re wanting to apply an action to that specific object.

Digital gloves could be a killer unlock for VR / AR use-cases. It would enable a greater deal of flexibility and freedom for users in both consumer and enterprise applications, while also making the worlds feel more alive with haptic feedback.

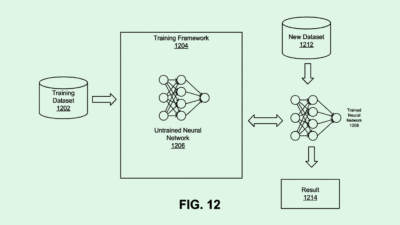

2. Nvidia – machine learning of grasp poses in cluttered environments

Nvidia is exploring how robots can figure out how to grasp objects in busy, cluttered, unstructured environment.

Most of today’s use of robotics is in highly structured assembly lines. However, this filing shows that Nvidia is looking to bring robots into our more messy, unstructured world.

Some of the methods used include:

- a depth camera that identifies objects and potential clasp points of contact

- identifying the 3D models of objects, where the ml model has been trained on opening lots of identified 3D objects

- being able to operate and make reasonable assumptions of potential grasp points for objects whose 3D models can’t be obtained

- taking into account surrounding objects, so that a robot can try to grasp an object with minimal disturbance to the other objects

What does a world look like with robots that are able to interact with objects safely in messy, real-world environments? My opinion is that the first step involves a lot more human-robot collaboration – especially for jobs that are dangerous, unsafe, extremely physically demanding etc.

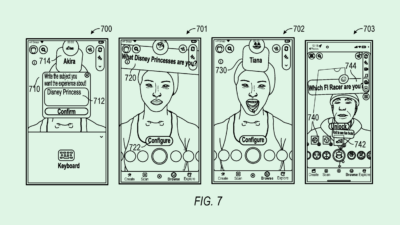

3. Snap – home based AR shopping

Normally, AR commerce experiences involve a user selecting what item they wish to ‘see’, opening the camera, and then positioning and resizing the digital item in their real world environment.

Snap want to switch up the flow so that when users open up their camera, they are automatically recommended AR elements to display, where the user can then purchase these as physical or digital items.

This is potentially interesting because Snap makes a user’s camera a canvas for displaying ads, and the ads are in theory contextually relevant to the environment – e.g. if you’re pointing the camera at your room, seeing a piece of artwork on the wall that a user could purchase is pretty cool.

By ‘owning’ the camera, in theory, Snap has the potential to be a new ad platform via AR. And if Snap successfully wins at the AR glasses battle, Snap owns a user’s physical environment in a deeper way, enabling AR ads to be a bigger play.