Adobe Gives Fashion Advice (plus more from Nvidia & EA)

Personalized fashion recommendations, machine vision in games & smart cars.

Sign up to uncover the latest in emerging technology.

personalized fashion recommendations, machine vision in games & smart cars

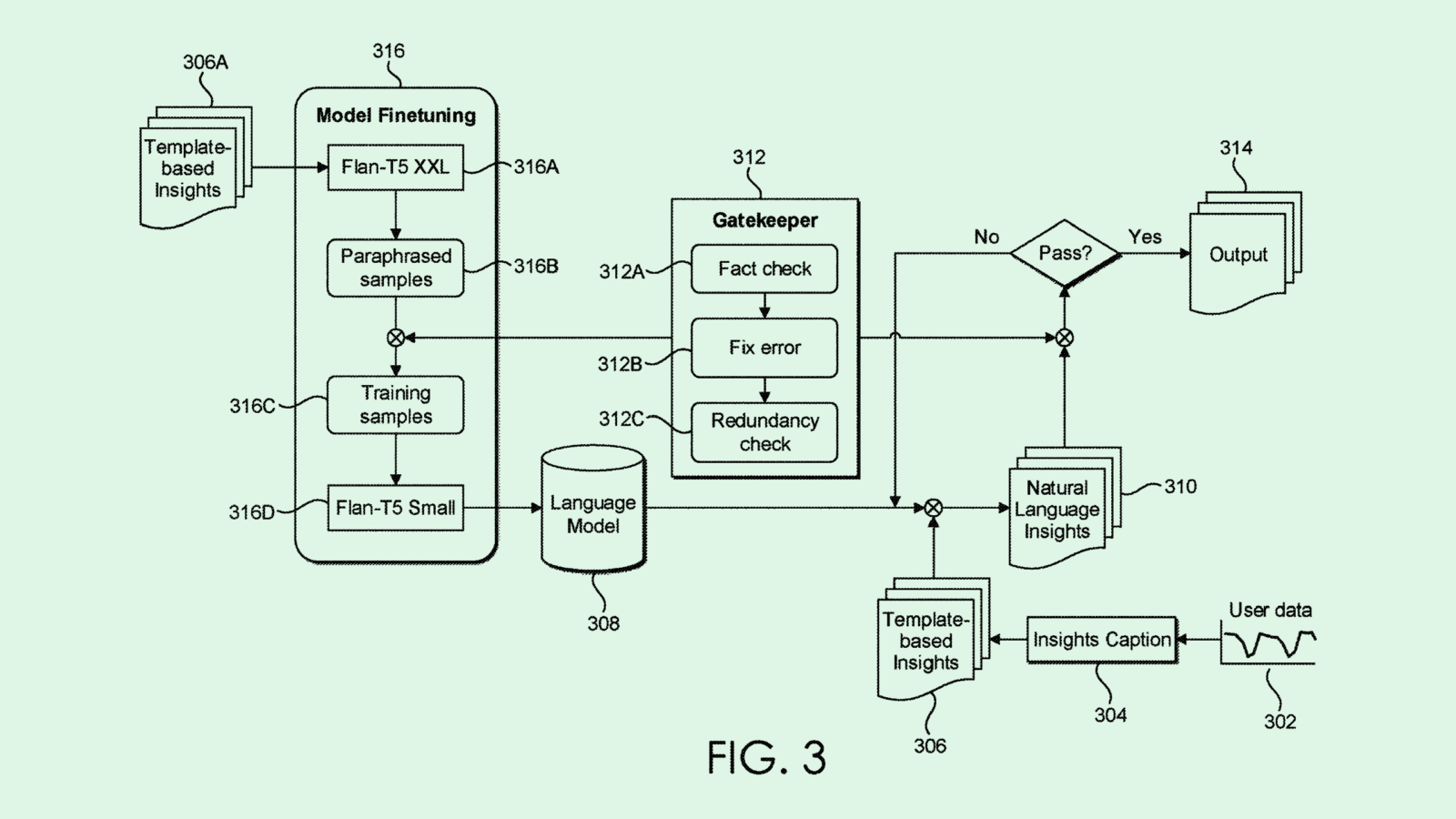

1. Adobe – personalised fashion recommendation system

Fashion recommendation systems suffer from a few core problems.

Firstly, with rapidly changing fashion trends, recommendation systems don’t usually have enough data to process and recommend items from new fashion trends.

Secondly, a lot of existing recommendation systems rely on semantic descriptions rather than visual signals to determine what’s fashionable. But there are obviously huge limitations to relying on words to ascertain fashionability.

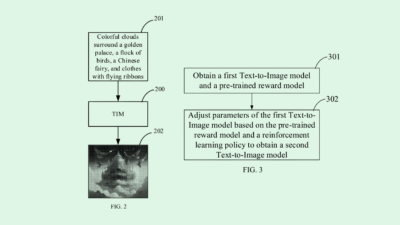

In this patent application, Adobe reveals that it’s looking at using deep-learning methods that analyse the visuals of fashion items in order to make personalized recommendations to users.

The model will work by studying user behaviour (e.g. which items are they clicking on, or spending more time looking at) and then extracting both the ‘latent’ features of the items that are capturing a user’s attention, and the latent features of a user themselves. Recommendations will then be made in a way that matches items that a specific user will find most interesting.

From studying Adobe patents over the last year, it looks like they are doing a lot of work around AI – specifically using deep learning to understand audio, video or photos. On the one hand, these could be useful for making the existing creator tools better. But with something like this fashion recommendation algorithm, perhaps Adobe is looking at leveraging its deep understanding of images into AI models that can be licensed by other companies – e.g. an e-commerce company selling clothes.

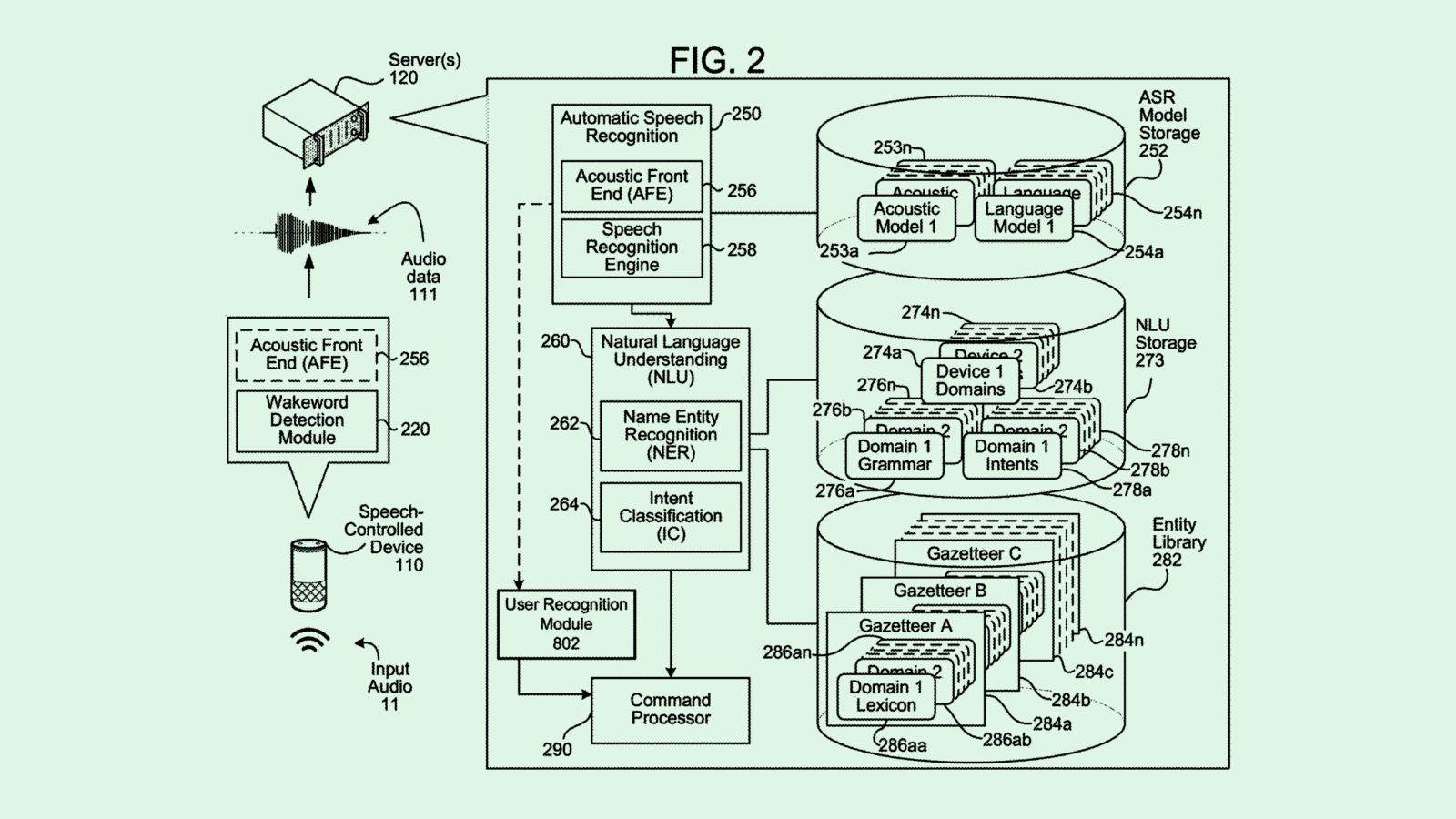

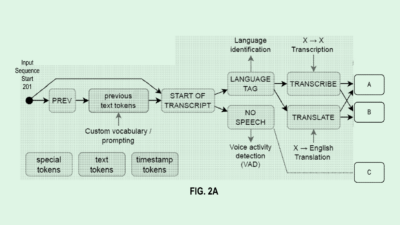

2. Nvidia – speech & image recognition for occupant commands in a car

Nvidia is looking at using gaze detection to determine where a driver is looking and then corresponds this to any voice instructions given. For instance, if a driver looks towards the sound system and says “turn it up”, Nvidia’s system will detect that the driver is referring to the volume of the entertainment system.

In this filing, Nvidia go a step further and start exploring using speech and image recognition to enable occupants to deliver voice commands in a car.

For instance, if the driver of a vehicle said “lower Sally’s window”, Nvidia’s system will detect where Sally is sat using cameras + image detection, and then lower the window where she’s sat.

Over time, Nvidia would want to build a system that’s capturing vehicle data and learning about its occupants and their preferences over time. This includes creating audio fingerprints so that the speaker is recognised purely on their voice. For example, some commands should only be followed upon if the driver is saying it.

What’s interesting about this play is that by incorporating sensors and microphone data into a car, there are a lot of data points that can be captured. For example, who is a person’s household, what is the close social network around a household (e.g. the friends who appear in the car from time to time), or what are the things they talk about.

3. EA – machine vision to make virtual sports games more realistic

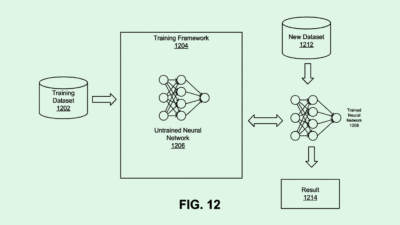

EA (developers of FIFA) is looking at using machine vision to improve the reliability of their games.

It’s going to do this by comparing virtual-world sporting events from a game, to data from real world sporting events.

For example, a machine vision model might be able to detect images from gameplay and see if it appears to violate natural laws of physics from real world footage. This could help game developers quickly identify and debug issues in gameplay.

Moreover, with nearly infinite footage of real world events existing online, machine vision could help us develop virtual games that display realistic human behaviour much more easily. For instance, in the filing, EA mention that using real world footage of a player celebrating winning the Stanley Cup could be used to automatically create a similar scene in the virtual world.

As the metaverse begins to take shape and we build out increasingly complex virtual worlds, machine vision will become an essential tool to mimicking the real world in game environments quickly and cheaply. It’s fascinating seeing EA apply this in the context of sports games.