OpenAI Patent Could Improve Voice AI Models’ Focus

OpenAI wants to work on its active listening skills.

Sign up to uncover the latest in emerging technology.

OpenAI wants to hear you better.

The company is seeking to patent a system for “multi-task automatic speech recognition.” OpenAI’s patent details a voice-activated AI model that’s able to handle tasks in several different languages.

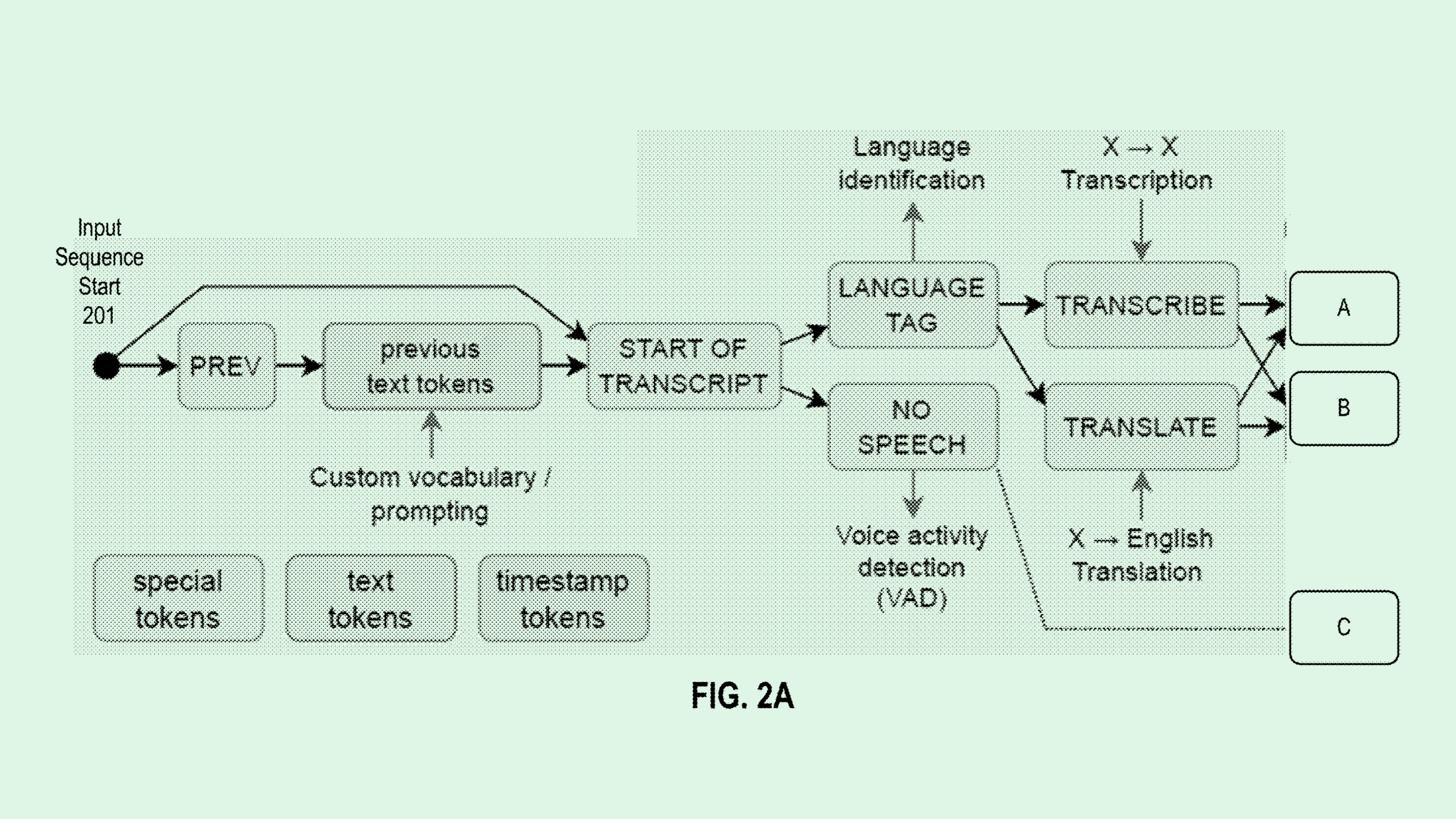

OpenAI’s tech uses a transformer model, which learns context and relationships between data, outfitted with an encoder and decoder to process streams of audio and turn them into text. The decoder is configured to pick up a “language token,” which specifies the target language for translation, as well as a “task token,” which determines the task that the audio stream is asking for.

Additionally, the transformer model is configured to understand “special-purpose tokens,” which guide it to complete specific tasks, and “timestamp tokens,” which time-align audio to text.

“There are many different tasks that can be performed on the same input audio signal: transcription, such as translation, voice activity detection, time alignment, and language identification,” OpenAI said in the filing.

This system helps the model understand relationships between audio snippets and their corresponding text to make it more efficient at translation and task performance, and uses these specific tokens to hone the model’s skills in specific contexts.

Voice-operated AI has become a priority for OpenAI. The company unveiled its advanced voice mode back in May with the announcement of GPT-4o, and released the feature to an invite-only group in July before opening it up to a wider audience at the end of September.

The model surpasses its standard voice mode, capable of handling interruptions and interpreting emotion in a user’s voice. Additionally, the company unveiled several new tools earlier this month that are capable of fast-tracking voice assistants’ development using only a single set of instructions.

However, the company has run into problems with some of its speech recognition tech. OpenAI’s transcription model Whisper has reportedly faced major issues with hallucinations, researchers told the Associated Press, something that’s particularly problematic given the model’s use in healthcare settings.

“It’s an absolute nightmare to have a medical translation tool hallucinate,” said Bob Rogers, Ph.D., the co-founder of BeeKeeperAI and CEO of Oii.ai. “The last thing you want to do is try and push out mission-critical applications and technology that’s not ready for primetime.”

But the tech in this patent (one of the very few filed by OpenAI) could be a “first step” toward making speech recognition models more robust, said Rogers. Having a one-size-fits-all approach to AI may work for some models, but for those used in critical applications, context is often key, he said. “This idea of focusing and creating tokens that control context could be a good start,” Rogers said.

Plus, a major issue with “far-ranging” models is the domino effect that can occur as it learns and grows. “You change things in one place and you get impacts in others — it’s really hard to control,” Rogers said. “Maybe focusing helps with that as well.”OpenAI wants to hear you better.