OpenAI Adds to its Small Pool of Patent Filings

Because of the massive head start that bigger tech firms have, OpenAI may “find themselves boxed out.”

Sign up to uncover the latest in emerging technology.

OpenAI wants to streamline model training.

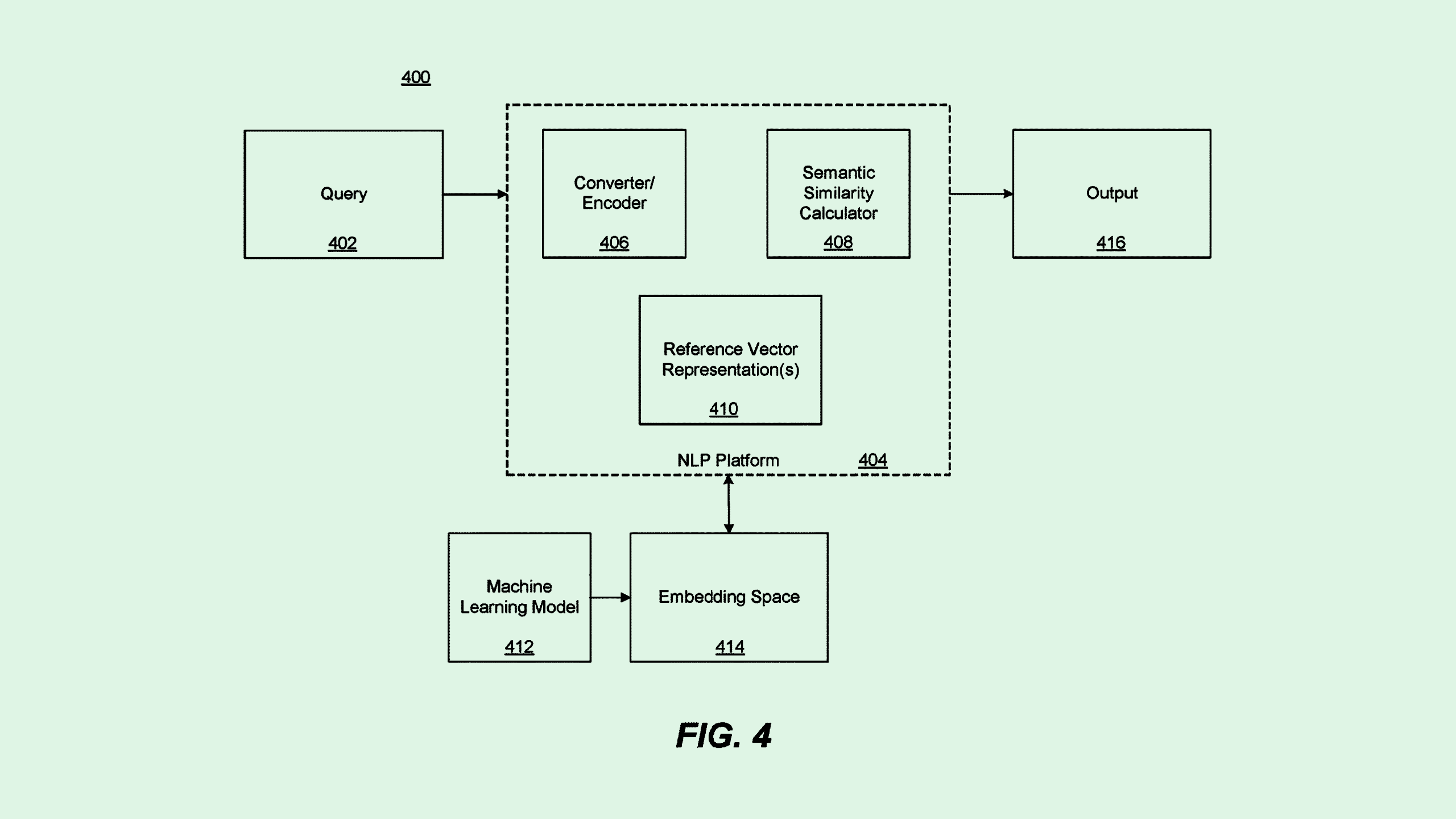

The company filed a patent application for a way to use “contrastive pre-training” to generate text and code embeddings. To put it simply, OpenAI’s filing details a way to train a model on similar and dissimilar pairs of data in order to improve its accuracy.

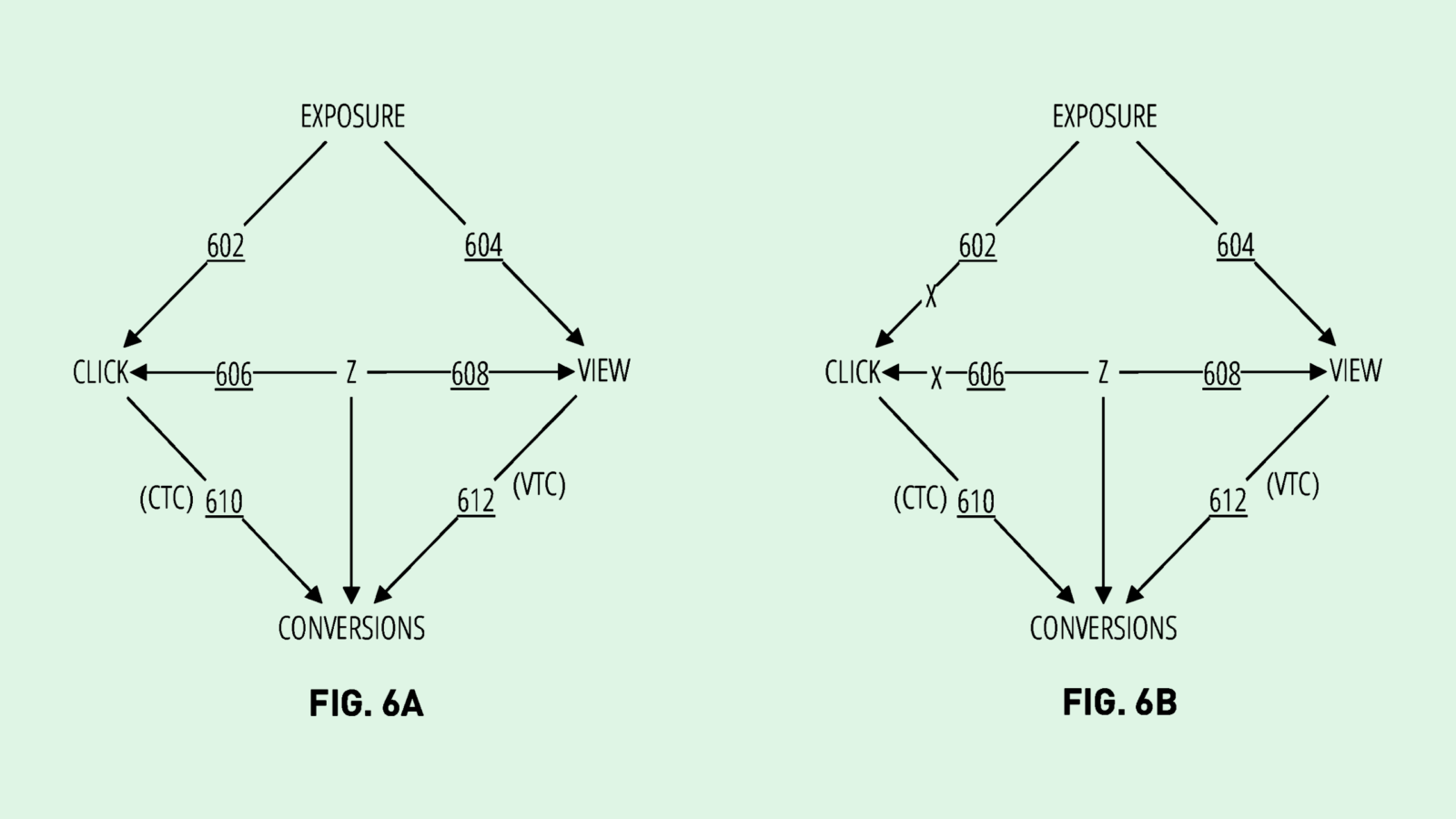

First, the system receives datasets made up of several “positive” data pairs (semantically similar to one another) and “negative data pairs” (different from one another). It then converts those into pairs into “vector representations,” which capture the essential features of a data point in a way that’s easier for a machine learning model to understand.

These vector representations are used to train an AI model to generate more vector representations; it can thereby effectively learn the similarities and differences between data points. The model is also trained to generate a similarity score between the data points.

While this sounds somewhat esoteric, this method of training allows for a more robust generative model. Using both positive and negative samples allows a model to perform better on new and unseen data, and converting data into vector representations is more computationally efficient, OpenAI noted. It’s also meant to work on large datasets and a lot of data types, making this method versatile and scalable.

Unlike Google, Microsoft, and other large AI firms, OpenAI’s patent war-chest remains relatively empty. The company has less than a dozen total patents, compared to the hundreds of patents other AI giants file on a regular basis. And there are a few potential reasons for this, said Micah Drayton, partner and chair of the technology practice group at Caldwell Intellectual Property Law.

One reason could be resources, said Drayton. While OpenAI is now an AI industry kingpin worth $80 billion, that hasn’t always been the case. The company was founded in 2015, and didn’t start filing patent applications until 2023. Giants like Google and Microsoft have decades’ worth of resources.

Drayton noted that patent litigation can add up quickly, and it may not have been a priority for the company in its early days. The other consideration is that patent applications can often take 18 months to become public — it’s quite possible many simply haven’t become public yet, he said. However, because of the massive head start that bigger tech firms have, OpenAI may “find themselves boxed out,” said Drayton.

“There’s three parties in that race: There’s OpenAI, there’s a competitor that has a war chest of patents, and there’s the [US Patent and Trademark Office],” he said. “They could be at a disadvantage, but there’s always the question of how patentable AI is in general.”

And because development is moving so quickly — and patent guidance is changing, too — Big Tech’s head start may not be as big of an advantage. “It’s possible that [competitors] don’t have a much more mature portfolio in the relevant space than OpenAI does.”