Baidu Flaps Its Lips

Baidu wants to surpass the uncanny valley in AI-generated videos.

Sign up to uncover the latest in emerging technology.

For an artist, lips are often the most difficult thing to draw. AI video programs, it seems, are facing the same problem.

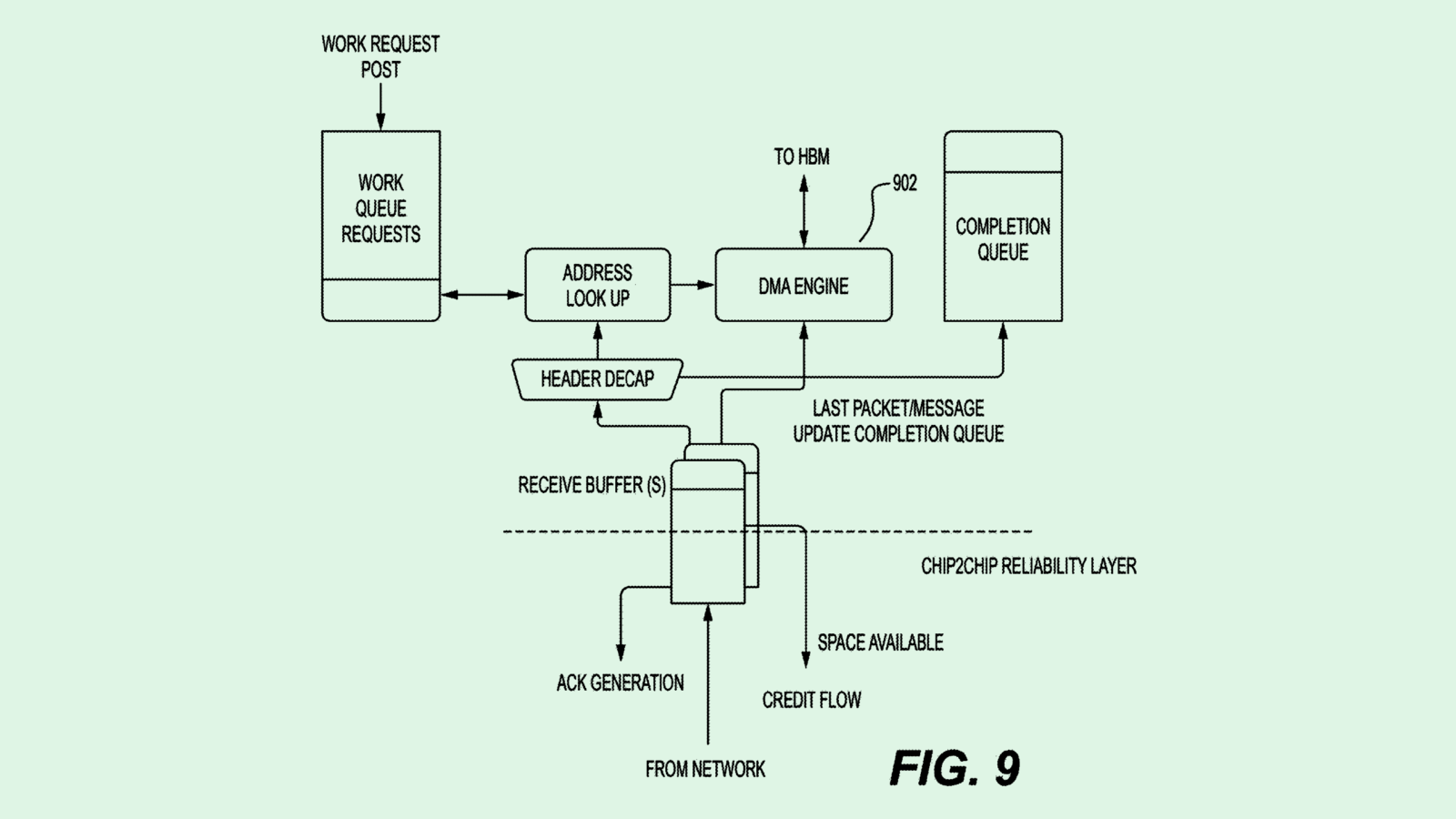

Baidu is working on tech to help mouth movements leap over the uneasy, uncanny valley feeling that AI-generated 3D videos commonly give. Baidu’s system uses two neural networks: One network to first create a 3D video from audio data, and a second network to correct and refine the lip movements created by the first network. The Chinese search giant noted that the neural networks are trained in a host of different AI concepts, such as “computer vision, augmented/virtual reality and deep learning.”

This tech relies on a machine learning concept called “principal component analysis” or the process of converting “high-dimensional data into low-dimensional data” to essentially extract the main features of the data. This system uses a neural network to perform this kind of analysis on audio data to create a 3D video from it.

That analysis then goes through a second neural network, which basically checks the work of the first network and makes corrections. The analysis is translated into a 3D video of lip movement specifically, and applied to a “pre-constructed 3D basic avatar model to obtain a 3D video with a lip movement effect.”

Baidu said in its filing that this tech allows for 3D facial renderings to be done without the use of “blendshape,” or a common way to animate facial expressions that relies on scans of a person’s face to get realistic facial movements.

“Lip movement is an important part of the 3D video generation process,” Baidu noted in its filing. “3D video generation is usually limited by Blendshape, which is mostly man-made, so the generated lip movement is lacking in expressiveness and details.”

Mouth movements are incredibly tricky to render using AI. This is because AI has not yet been able to capture the “microexpressions” in human faces, or the small movements that convey a person’s nonverbal communication and emotion, Jake Maymar, VP of Innovation at The Glimpse Group, told me. It’s the reason that animators and VFX designers so often use human subjects as models, he said: “It’s part of the recipe for getting that right.”

“It’s a very, very hard thing to capture the soul of an individual,” Maymar said. “They’re called microexpressions because they’re almost subliminal. Your brain picks up on them. That light in the eyes, that life behind a person — to capture that and then recreate it digitally is very hard.”

But developers toppling that barrier could open the door for more than just AI-generated VFX, said Maymar. An AI-based system that captures the subtleties of nonverbal communication and translates them to a VR or AR avatar in real time could mean the difference between a VR environment that people want to spend time in and one that people don’t.

“Once we unlock this capability, this will be the tipping point for remote communication,” Maymar said. “The reason I think Baidu is focused on this video technology is it’s sort of the first step … before getting into spatial or 3D representations of people.”

This isn’t the first time we’ve seen Baidu take an interest in AI-based video. The company is also seeking to patent AI-based tech that generates videos from a reference photo (though the example photos in the filing were mysteriously devoid of faces). Given the company’s previous patent activity, this latest filing likely won’t be the last seeking to perfect AI-generated facial movements and body gestures, Maymar said.

Baidu has been working hard to keep pace with competitors in the AI race overall. While the company’s initial launch of its AI chatbot Ernie was a flop, founder Robin Li announced in late May that Baidu would soon launch an upgrade to the large language model behind the chatbot. The company also announced a $145 million venture fund to back AI application startups in early June.

But standing out among key players like Google, Microsoft and OpenAI is no easy feat. Making AI-based video that actually looks good may be part of Baidu’s plan to do so.

Have any comments, tips or suggestions? Drop us a line! Email at admin@patentdrop.xyz or shoot us a DM on Twitter @patentdrop. If you want to get Patent Drop in your inbox, click here to subscribe.