Facebook Takes a Closer Look (plus more from Intel & Apple)

Facebook auto-generating personalized ads, Intel’s imagination machines & Apple’s VR keyboard

Sign up to uncover the latest in emerging technology.

Facebook auto-generating personalized ads, Intel’s imagination machines & Apple’s VR keyboard

1. Facebook – Understanding Image Concepts & Auto-Generating New Personalized Ads

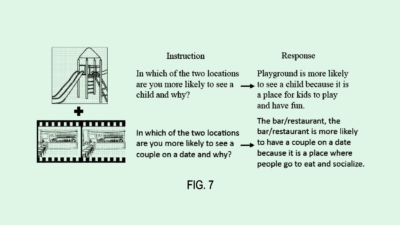

In this new filing, Facebook describes using computer vision to try to understand the concept of an image.

For example, imagine you interact with 10 pieces of content and 8 of those include images of cats. Suppose 2 of these cat images are also shown alongside dogs. Using computer vision, Facebook can understand that you are more likely to engage with pieces of content that have cats in it, maybe more so than those with dogs.

By understanding the content we’re interacting with in a more “human” way – i.e. by extracting the concepts of images – Facebook can begin to model the probabilities that a given concept is what is generating the interest.

So far, nothing shocking. Essentially Facebook’s recommendation algorithm is trying to understand what makes us engage with a piece of content through understanding the content in a more conceptual way. This is already happening one way or another.

What becomes interesting is when Facebook describe automatically generating images based on what is most likely to appeal to users. Using GANs (Generative Adversarial Networks – a form of AI), Facebook could see that a user is interested in cats wearing jackets, and then use AI to generate new images to show to users.

The next evolution of recommendation algorithms is not just showing you a piece of content that you’re likely to engage with, but generating that piece of content from scratch. In that world, platforms lift the supply constraints on niche content specific to your taste, and are no longer reliant on someone in the world creating it.

On the practical side, AI generating content personalised to peoples’ tastes is extremely useful for the ads business.

One big point of friction for the ads business is people being able to actually make the creatives for ads. A second point of friction is that there’s a limiting point for ad personalisation currently. In the ideal world, we’d be able to handcraft the perfect ad for each individual person in the world. Instead, we try to segment the world into groups (e.g. by interest, language, country, gender, race etc) and then create ads those for those specific groups.

With AI generated images, Facebook could take a company’s product and generate ads that include the product, personalised in a way to maximise the likelihood of capturing a person’s interest. Scary? Or inevitable?

2. Intel – Artificial Imagination Engine

Intel is looking into how data from sensors can be turned into 5-dimensional scenes: 3-dimensional visual representation, 1-dimensional temporal representation, and a branching dimension.

The goal is to help make the huge volumes of data generated from sensors something that is more easily interpretable by humans.

Intel want to do this by feeding sensor data into a generative adversarial network (a form of AI) to then generates a ‘scene’ with video, text and audio.

In the same way that humans remember events by using our imagination to fill in the blanks, Intel wants its ‘Imagination Engine’ to fill in the blanks of a scene that sensor data describes.

What are the potential use cases for this?

Intel describe taking the sensor data from smart buildings and generating 5D scenes to help people better understand how the building infrastructure is used. The advantage of using the 5D scene versus looking at real video data is that the imagined scene guarantees privacy (there’s no real humans), it could take up less memory, and the scene will be more intuitively queryable.

We’re building machines that dream.

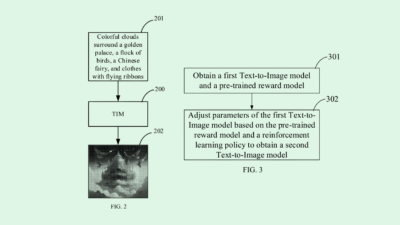

3. Apple – keyboards for VR headsets

Apple updated a patent application that was originally filed last year.

In this filing, Apple reveal that they’re thinking about keyboards that people can use while wearing VR headsets or AR glasses.

The goal is for users to be able to use input devices that are familiar and intuitive, while providing an immersive experience.

The image on the RHS illustrates how the typing experience could work. The user can see their hands typing over the top of a keyboard, while the text of what they’re typing appears in the same view.

It’s fascinating to see how companies like Apple and Microsoft are thinking about seemingly mundane interactions in a VR world, such as typing.

By enabling people to use the input devices they’re currently used to, we might soon begin to see workplace laptops replaced with VR headsets connected to keyboards.

Check out this previous issue to see how else Apple are reimagining input devices with Apple Gloves.