Ford Gets Brainy (plus more from Amazon & Netflix

Brain-machine interfaces for controlling cars, in-store pick up for orders, and more.

Sign up to uncover the latest in emerging technology.

brain machine interfaces for controlling cars, in-store pick up for orders, and more

1. Ford – Controlling vehicles with brain machine interfaces

Brain machine interfaces (BMIs) hit the mainstream when Elon Musk announced Neuralink – the company that is building a neural implant so that we can control computers with our mind.

The last place I was expecting to see a filing around this technology was with Ford, but here we are!

BMIs look to work by translating neuron firing patterns into a set of controls for a computer interface. They can be non-invasive, where sensors sit around the scalp and measure electrical activity in the brain (e.g. see Kernel). Or, they can be invasive, such as Neuralink which will have a robot implanting thousands of probes in the brain.

In this patent application, Ford reveal that they’re looking at how users could control a car’s infotainment system with their thoughts. For example, if the AC is too strong and you want to turn it down, you won’t need to fiddle around with the controls of the car. With a BMI, you would simply think about turning down the AC and it will happen.

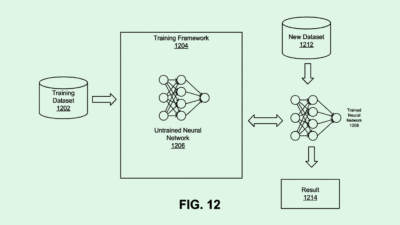

In order to get the BMI working with a user, the system would need be trained to associate specific neural activity patterns with specific actions. This would be done by getting users to perform hundreds of repetitive actions and gestures using a manual input device, and then processing a feed of neural data.

This filing is an interesting example of where BMIs can helpfully reduce the friction between thought and action – for both safety and human efficiency. Fortunately Ford isn’t considering us controlling the steering of our car with our minds!

One other take away from this filing is how much Ford is thinking about future human interactions with smart cars.

2. Amazon – determining pick-up times for customer orders

Here’s an interesting patent application from Amazon – mainly because it’s a little unclear why Amazon is thinking about this.

Amazon is creating a system for predicting accurate pick-up times for customers who want to pick up online deliveries from a store. The objective is to minimise wait-times for the customers coming to pick up their order, and for the store associates who may be waiting for the customers to arrive. Moreover, for certain items (such as perishable foods or frozen foods), a long wait time can result in the product being ruined.

This will work by Amazon creating a number of geofences around the store. When a customer enters a geofence, let’s say 10km from the store, a notification will be triggered that lets the store know that the customer is on their way. Using GPS data and traffic data, a customer’s ETA may then be predicted. This would then trigger actions in the store, such as the store associate starting to collect the ordered items. If the customer then enters another geofence that is 1km away from the store, more actions may be triggered such as the store associate beginning to collect frozen items and perishable food items.

When a customer arrives at the store, there’ll be delivery bays or drive-thru collection points for users to pick up their order.

Judging from the filing, my guess is that this could be Amazon bringing together their online ordering platform and their new physical retail presence with Amazon Fresh / Amazon Go. Amazon may be looking to enable people to order their groceries and vegetables on Amazon, and then collect it very quickly from their closest Amazon Fresh store. And this filing is Amazon’s attempt at making the process of picking up items as efficient as possible, both for the customer and for the store.

If this is the case, Amazon’s physical presence makes for an interesting flywheel. Online orders on Amazon could bring people into an Amazon physical space. Moreover, orders made in Amazon’s physical space create data for making suggestions and recommendations to users when shopping online.

3. Netflix – automatically mixing the acoustics of dubbed audio

Just a quick but interesting patent application that highlights the small things that Netflix consider when delivering a high quality user experience.

One of the most interesting aspects to Netflix’s content strategy is how it produces original localised content and then makes it relevant for global markets. For example, Lupin is filmed in France and shot in French. Yet it also became a global hit in markets outside of France. By making locally produced content that resonates globally, Netflix is able to make the most out of its library of content. Netflix can simultaneously create a library of content that resonates deeply in local markets, as well as increasing the library of relevant content for engaged users in global markets.

One important aspect to growing the market for locally produced content is audio dubbing. For example, for non-French speaking countries, people could consume Lupin by watching it with an English audio dubbing.

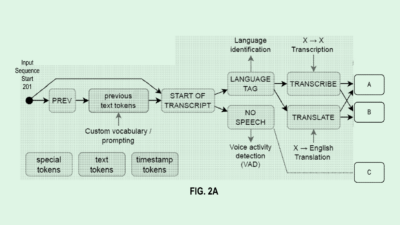

Right now, the process of dubbing dialog uses one of two different methods. The first is recording the vocals in a studio where the audio is compressed to the point where it has no echoes / reverb. The second is where an audio engineer manipulates the vocal recordings so that it reflects the audio-atmosphere of a relevant scene. On top of this, additional sounds may then be added to the audio track, such as music or sound effects.

Netflix’s patent application looks to make the process of creating audio dubbing more efficient and more realistic.

Essentially, Netflix wants to use machine learning to detect the acoustic characteristics of a scene. For instance, a scene could be recorded in an auditorium or a bedroom. Once the acoustic characteristics are identified, it will then be applied to the dubbed audio. This processed audio will then be overlaid over the original audio.

The outcome is then dubbed audio that sounds like it was spoken in the same environment as the original dialog in the scene.

This seemingly small process of making dubbed audio sound like it’s shot in the original environment has a huge impact on a user feeling immersed in a story. If it sounded purely like what’s recorded in a studio, the audio loses a lot of character and realism.

Given that creating original local content is a big part of the Netflix content strategy, automating the acoustic profiles of audio dubbing to keep a sense of realism is likely to reduce the costs and time required to take content internationally.