Intel May Save Power on AI Training

Intel may have found a way to train AI without running up the power bill.

Sign up to uncover the latest in emerging technology.

Training AI can run up a company’s power bill. Intel may have found a way to save a few bucks.

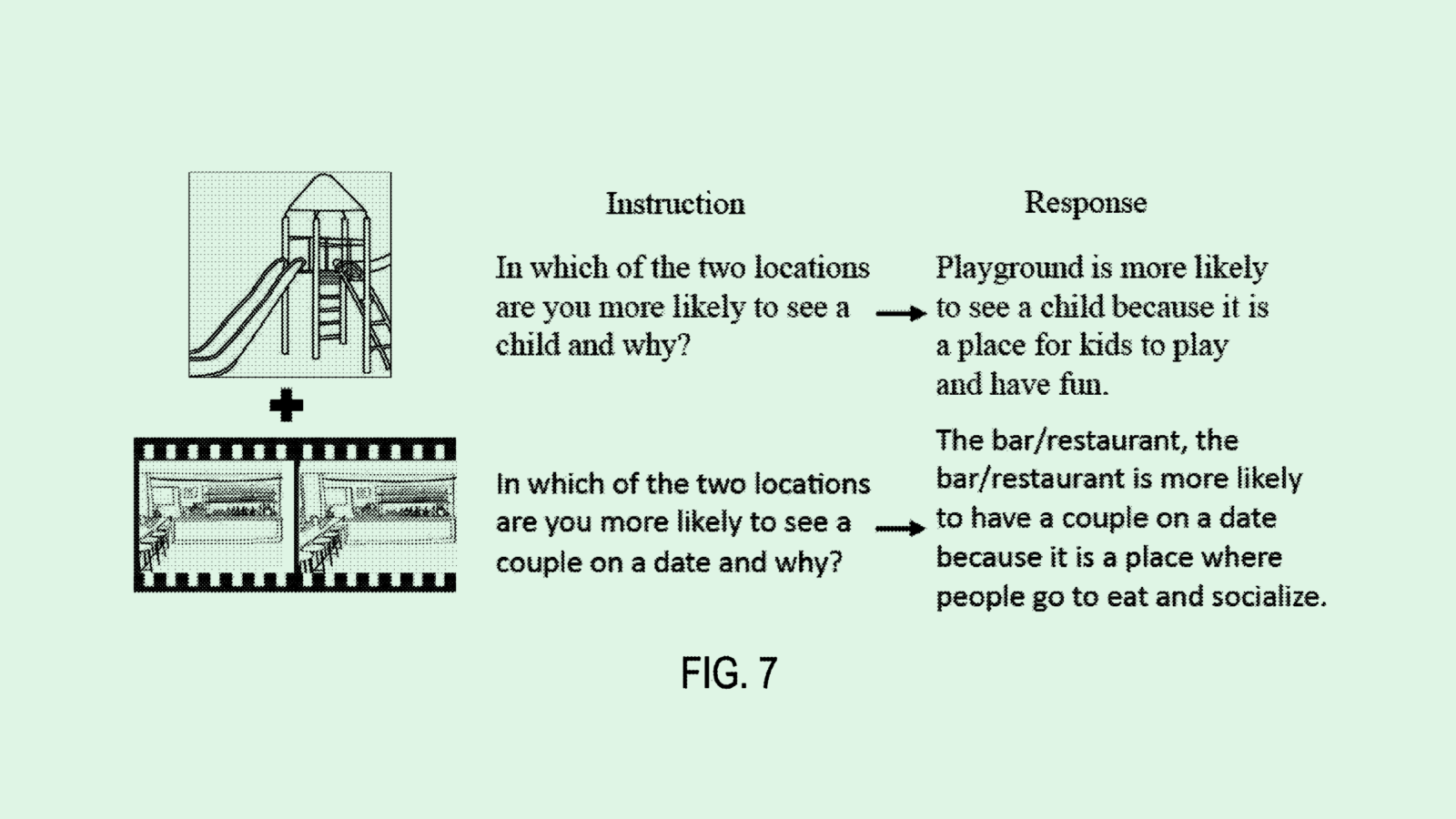

The company is seeking to patent a system for conducting “power-efficient machine learning” for images and video. This system has to do specifically with decoding images and video in machine learning training — i.e., when an AI model translates the content of an image or video into a sentence, such as a caption.

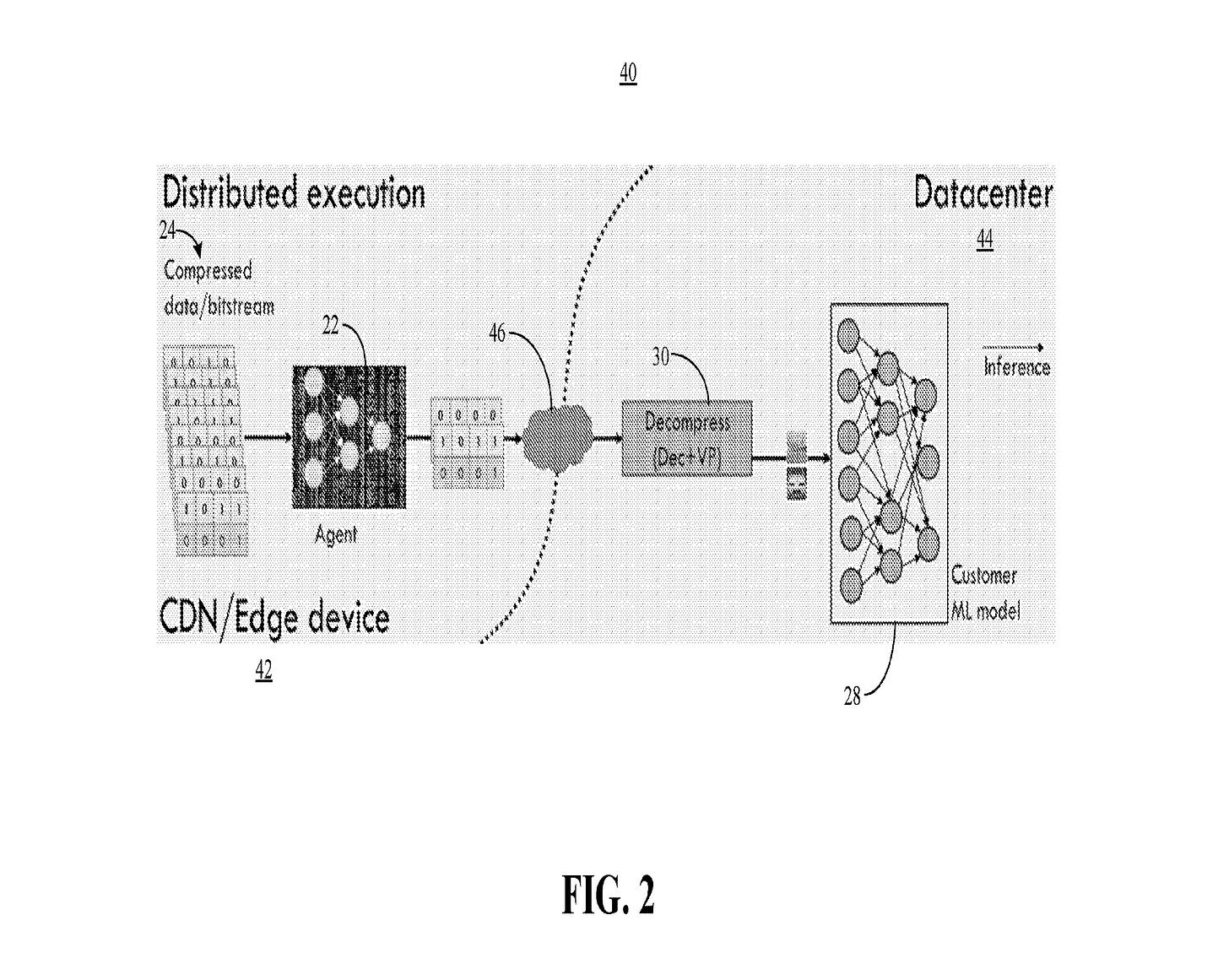

Intel’s system works by weeding out “content that would otherwise result in unnecessary decoding.” As a result, Intel noted, “only a few images are decompressed and analyzed” by the system, thereby saving power.

To break it down: Intel’s system weeds out “irrelevant” images or videos from training datasets while they are compressed (meaning, while their file size is reduced) to save the time and effort it takes to decompress those images. After those images are picked out, the system tests whether the machine learning model can still accurately do its job. If the accuracy is impacted by the training data that was withheld, the system’s machine learning “software agent” takes note and tries again.

By only feeding the machine learning model the relevant data, the system saves tons of time and energy, as image decoding is “sequential and tend(s) to be the slowest operations in the machine learning pipeline,” Intel noted. Plus, the time it takes for conventional systems to decode content that isn’t relevant “may translate into a significant amount of wasted power usage in data centers.”

According to a Bloomberg report from March, training just one model can consume more energy than 100 US homes in a year, and Google researchers found that AI makes up 10% to 15% of the company’s energy usage each year (using roughly the same amount of annual electricity use as the city of Atlanta). Because the industry is so nascent, there’s little transparency into exactly how AI is impacting carbon emissions.

Since March, the industry has grown rapidly, and it’s showing no sign of stopping. Among big tech companies, Google, Microsoft, Meta and others are practically racing to outdo each other in the sector with AI integrations for both consumers and enterprises. AI startups are receiving outsized attention from a wave of VC investors looking to jump on the bandwagon. Finding ways to create AI models without burning through power could be a critical piece of the puzzle as the exponential growth gets, well … more exponential.

Intel has a lot of catching up to do if it wants to compete with the major players in AI. The company has sought to patent plenty of AI-related inventions, and has a good handful of AI offerings already on the market. But when it comes to AI chips, the company controls a tiny fraction of the market share – less than 1% compared to AMD’s 20% and NVIDIA’s roughly 80%.

Every patent the company can secure, however, could help it better compete. Finding a way to train AI without burning up the earth could give Intel a leg up.

Have any comments, tips or suggestions? Drop us a line! Email at admin@patentdrop.xyz or shoot us a DM on Twitter @patentdrop. If you want to get Patent Drop in your inbox, click here to subscribe.