Meta Patent Uses AI, AR to Guide Users with Sensory Impairments

Meta’s recent patent aims to guide people with sensory impairments using an AI agent — but viable and long-lasting smart glasses may be difficult to achieve.

Sign up to uncover the latest in emerging technology.

Meta wants its smart glasses to be for more than entertainment.

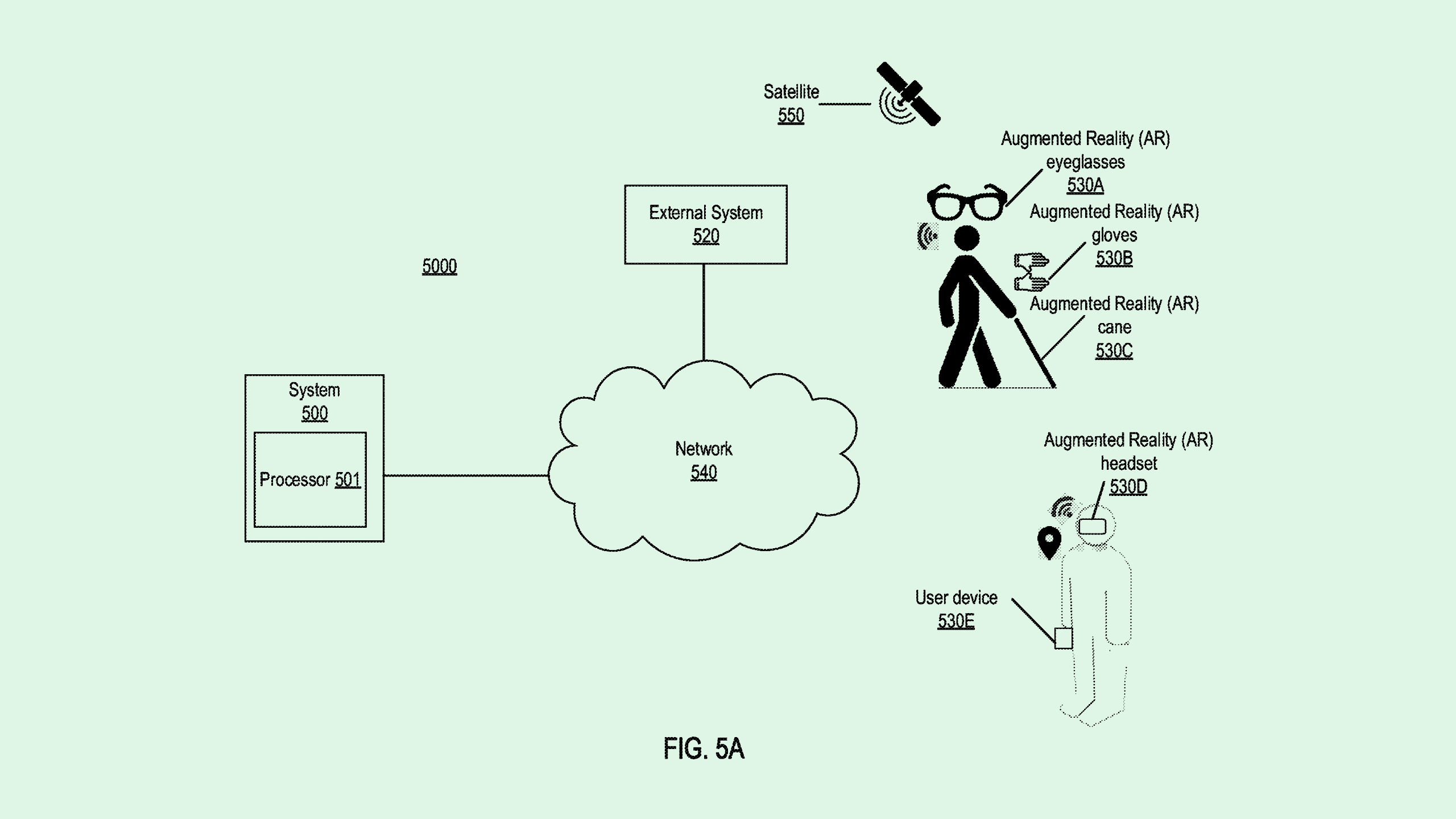

The company is seeking to patent a system for “supplementing user perception” using machine learning, augmented reality and an “artificial intelligence agent.” Meta’s tech aims to provide visual, auditory and “tactile” aids in a smart glasses’ display for people with “sensory impairments.”

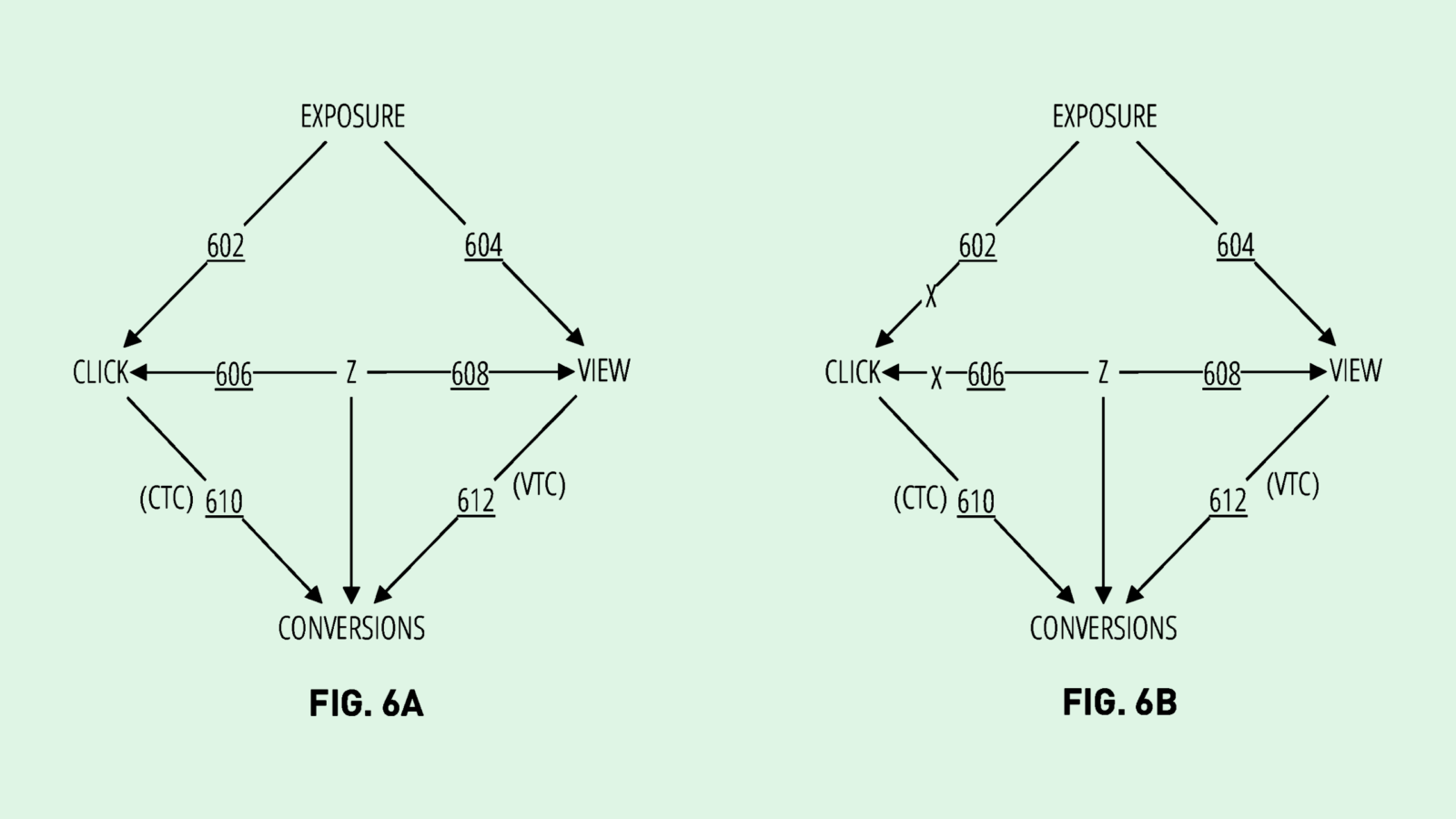

First, this system takes in data related to a user’s location, context, and setting to determine the relationships between the objects in the environment. Along with cameras and microphones affixed to the glasses, this system may collect data from a built-in accelerometer, and potentially gesture and haptic information “located on a walking cane or prosthesis,” or through a pair of gloves.

That data is then synthesized and analyzed by AI and machine learning algorithms, including performing localization and mapping analysis and image analysis, to determine risks associated with different locations or objects.

If the system determines a risk, such as a speeding vehicle or an alarm sounding nearby, the glasses would translate that. For example, if the user is visually impaired, it may output audio or tactile signals. If a user is hard of hearing, the system may provide visual signals to warn of a sound or something that may not be in their field of view.

“As a result, these techniques may provide various outputs, such as enabling users to read signs, appreciate depth and perspective, and identify objects associated with a context or setting,” Meta said in the filing.

With this patent in particular, focusing on helping those with sensory impairments may be a means to an end, said DJ Smith, co-founder and chief creative officer at The Glimpse Group.

Similar to Neuralink’s playbook (targeting its tech toward people with disabilities as a means of eventually reaching a broader audience), the wide scope of Meta’s patent may be angled toward a “bionic” future, he said. “This patent is trying to capture all of that — using technology to sense their surroundings and then inform the user of that information.”

However, another use case for context-aware smart glasses could be native advertising, said Jake Maymar, AI strategist at Glimpse. As it stands, Meta makes the bulk of its money from digital advertising, so extending that to its hardware work seems like a natural step, he said. Plus, given the sheer amount of cash that Meta’s Reality Labs is bleeding — $4.5 billion in the most recent quarter alone — the company may be interested in ways to recoup some losses.

“It’s very important to be context-aware when you’re advertising a product,” said Maymar. “You want to know where the person is, what they’re looking at, and what they’re interacting with.”

This tech seems like an extension of its current smart glasses offering in partnership with Ray-Ban, which includes an embedded AI model allowing people to ask questions about the context of their surroundings and get auditory responses. But actually getting the hardware to a point that allows for visuals isn’t going to be an easy feat, said Smith.

Because adding visuals into these devices requires so much processing power and generally sucks up battery, doing so with the current iterations of the technology isn’t entirely possible, said Smith. “They’re going to slowly incorporate the technology while maintaining a form factor that’s viable. It’ll slowly inch its way up.”

“All of the data centers, all of the processing power, all the infrastructure — that also needs time to develop,” said Smith. “It will strategically grow as the technology grows.”