Nvidia AI Watermarking Patent Could Help Separate Real from Fake

The benefits of watermarking AI are clear: “It’s preventing fraud, preventing misinformation and disinformation.

Sign up to uncover the latest in emerging technology.

Amid an ever-growing pile of AI-generated content, Nvidia wants to help discern what’s authentic.

The company filed a patent application for “watermarking for speech in conversational AI,” which creates an “audio watermark” for synthetically generated speech that isn’t perceptible to the human ear.

AI-generated content has reached a point where it’s difficult to discern between authentic and synthetic, Nvidia said in the filing. “This can be used for various malicious purposes, such as to convey untrue information or to gain access to something protected using voice recognition technology.”

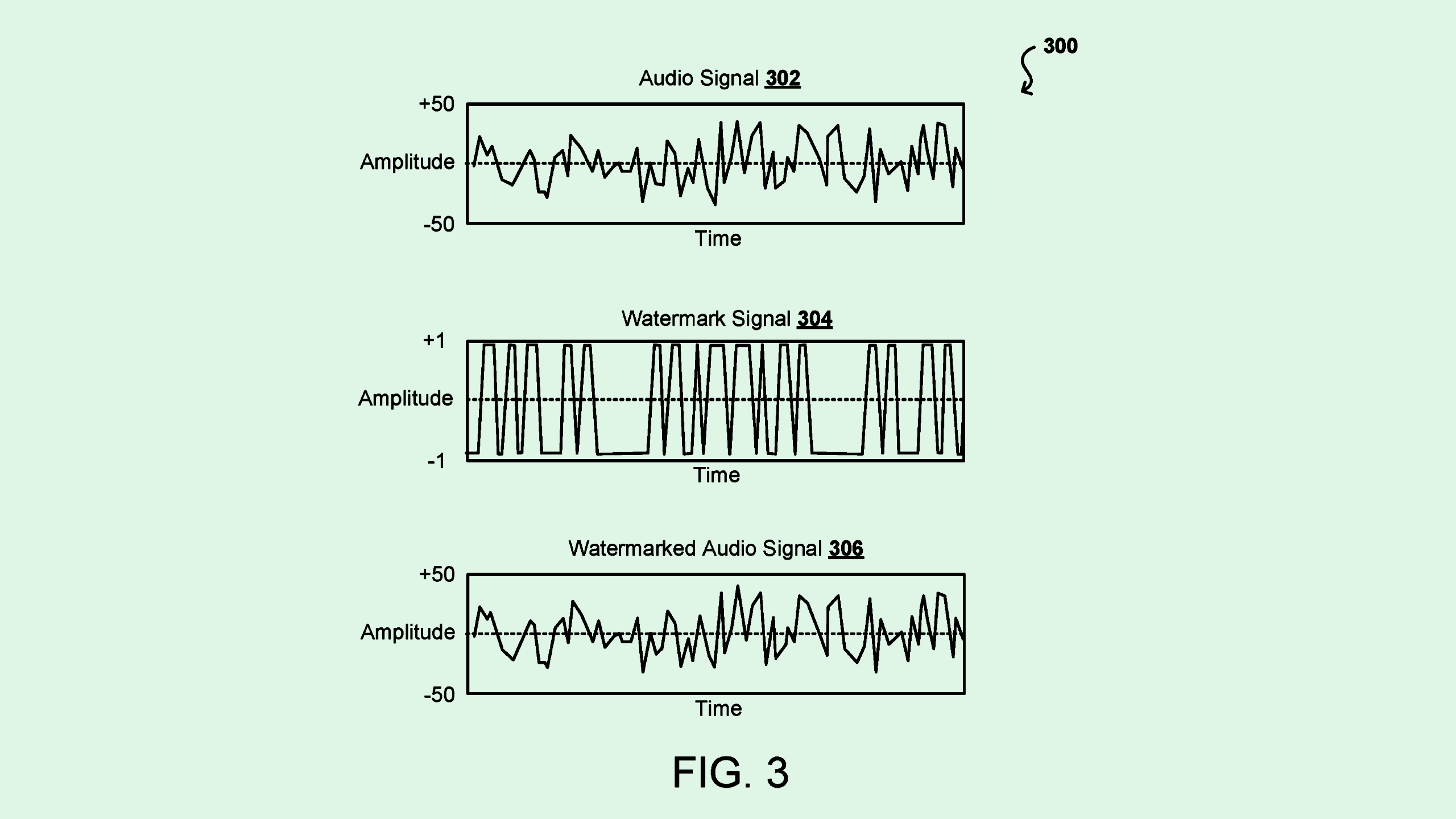

When a neural network-based text-to-speech generator creates synthetic speech, Nvidia’s tech inserts a watermark into the audio data through a process called “spread-spectrum watermarking,” which encodes digital identifiers that aren’t detectable in the sound itself.

The average listener wouldn’t notice the watermark upon playback. Instead, it’s detectable via a “key to verify presence of the expected watermark,” Nvidia said. “An entity without the key would have difficulty completely and accurately detecting, modifying, or removing watermark.”

The watermark may also be updated over time to determine whether or not the audio has been edited. Nvidia noted that this system may also be embedded within an audio player, such as Zoom or Skype, which would notify meeting participants that synthetic audio is being played (or prevent that audio from being played at all).

The sea of AI-generated content is constantly growing. And while sometimes an extra finger or uncanny voice crack can give away the true source of a photo or audio clip, it can be difficult to tell.

Watermarking, as Nvidia is proposing, may be part of the solution, said Theresa Payton, CEO and chief advisor at cybersecurity consultancy Fortalice Solutions. The benefits are clear: “It’s preventing fraud, preventing misinformation and disinformation. It’s showing you authorship and authenticity.”

And embedding watermarking tech like this into remote communication tools as Nvidia suggests could help build trust in remote interactions, she noted, whether it’s interviewing job candidates, discussing confidential information, or warding off cybercriminals.

That factor’s bound to attract interest from customers — both to Nvidia’s product offerings and the platforms that implement them. “Any platform that says, ‘We are implementing this technology to make sure that who you’re on the line with is not a deepfake,’ is going to have a competitive advantage,” said Payton.

So why do deepfakes and synthetically-generated content matter to Nvidia? Given that the company is the darling of the AI market, with its chips powering the development and growth of multi-billion parameter models, researching this tech could aim to prove a commitment to safety and ethics, said Payton.

“They offer the chip that is fueling predictive AI and generative AI, and they’re hearing the downsides of the misuse of that technology,” Payton said.

However, with every clever solution generally comes an equally clever workaround, Payton noted. “What we have to keep in mind is, every time we implement technology, cybercriminals don’t go away,” she said. “This could be one of many things that we’ll have to implement.”