Amazon Explainability Patent Could Bring Responsible AI to AWS

Amazon wants to keep its AI models on a leash.

Sign up to uncover the latest in emerging technology.

Amazon wants to make sure AI models don’t fall out of line after they’re shipped out.

The company filed a patent application for “global explanations of machine learning model predictions.” The service would explain how deployed machine learning models come to their conclusions and monitor for behavioral shifts after the models are deployed.

Amazon noted its tech aims to cut down on bias, save on computing and memory resources, and increase “the transparency and explainability of decisions taken with the help of machine learning models … which can in turn lead to increasing confidence in machine learning algorithms in general.”

This service — which is incorporated “as part of a larger pipeline of machine learning tasks” within a cloud computing environment (a.k.a. AWS) — relies on a model trained specifically to generate predictions based on individual chunks of text by breaking them down by its different attributes.

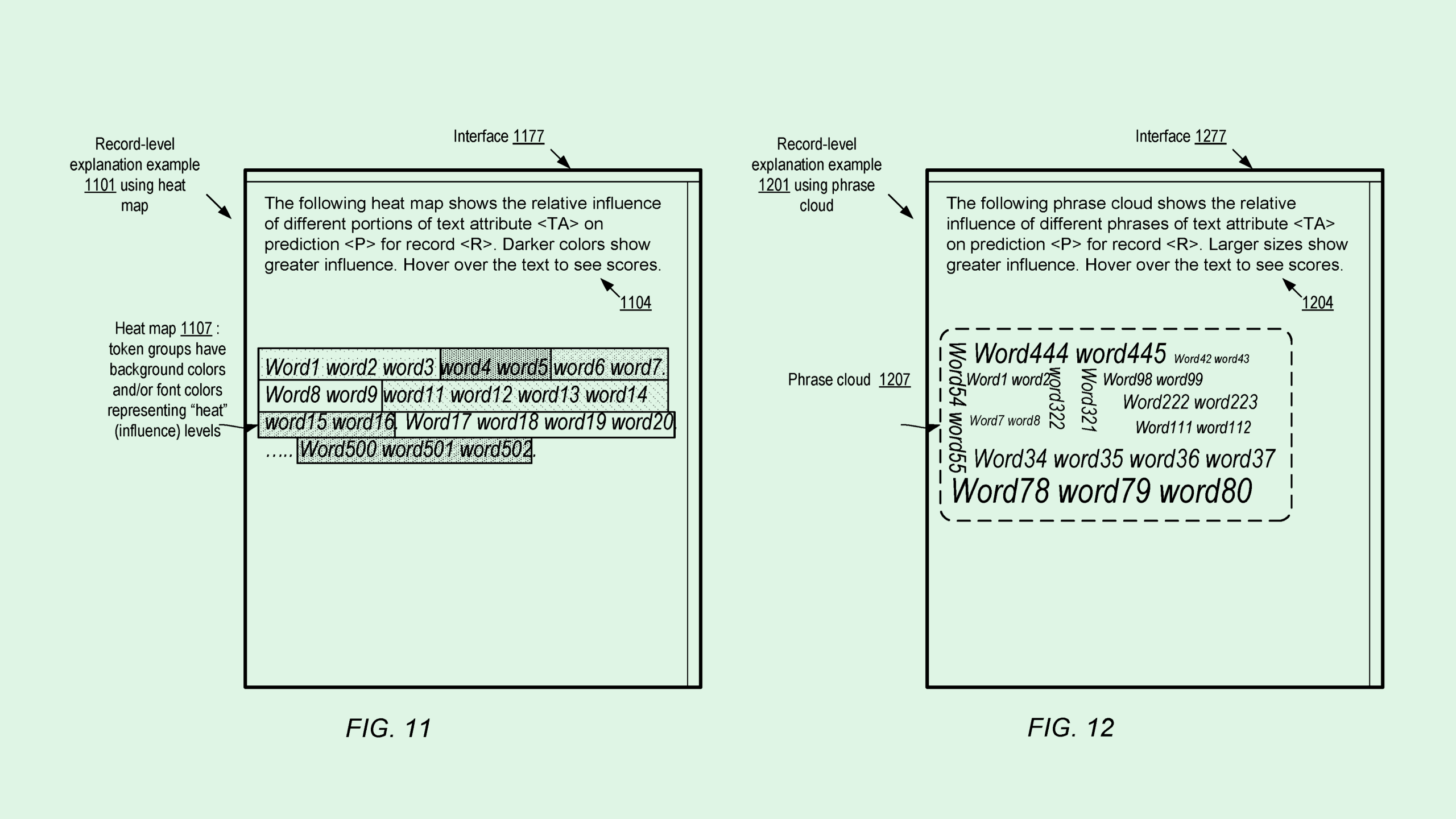

For example, this system can provide analysis based on different phrases, sentences, paragraphs, or “tokens” — words or punctuation — of a body of AI-generated text. This analysis can help a user understand how an AI model came to its conclusions.

Additionally, these capabilities can monitor more than just individual outputs; they can also inspect how a model is performing after it’s put into practice. To do so, the system collects several explanatory predictions made during a certain time frame and determines whether or not they’ve deviated too far from certain baselines for fairness and accuracy. If they have, the system may recommend re-training the model to more accurately represent certain data or attributes.

Lots of patents in recent months have aimed to make AI more ethical and responsible, oftentimes taking on issues such as bias and accuracy in the training process. Conversely, this tech aims to monitor the operation of an AI model after it’s out in the wild, ensuring any bad habits not caught in training aren’t amplified.

But despite an influx of patents like these, the breakneck pace of development and innovation may be faster than that of any guardrails put in place, said Thomas Randall, advisory director at Info-Tech Research Group — especially after companies like Meta and OpenAI cut teams focused on risk and responsible AI. The pressure to keep up with the growing demand for more — from competitors, investors, and customers alike — could lead to safety precautions being sidelined, he added.

“I think the way in which organizations are adopting these kinds of models is actually not conducive to having a strategic overview of these responsible AI principles,” said Randall. “But if an organization doesn’t have responsible AI peace of mind, especially around fairness and bias, you’re going to end up with a bad outcome.”

However, by embedding tools for observability and explainability into its vast array of service offerings, Amazon could help developers using AWS build more and worry less. The company’s cloud services offerings already lead in market share, with its sales only inflated by the AI boom. Staking a claim in responsible AI IP certainly couldn’t hurt.

“Further research into explainability and observability technologies could enhance AWS’ lead, allowing Amazon to host a diverse array of third-party models while also providing users with predictable outcomes across different models — an offering unique among big tech players,” said Ido Caspi, research analyst at Global X ETFs.