Google Wants to Use Context to Drop Wakewords

Without a wakeword, a digital assistant has to monitor its users more closely.

Sign up to uncover the latest in emerging technology.

Google’s taking screen-sharing to a new level.

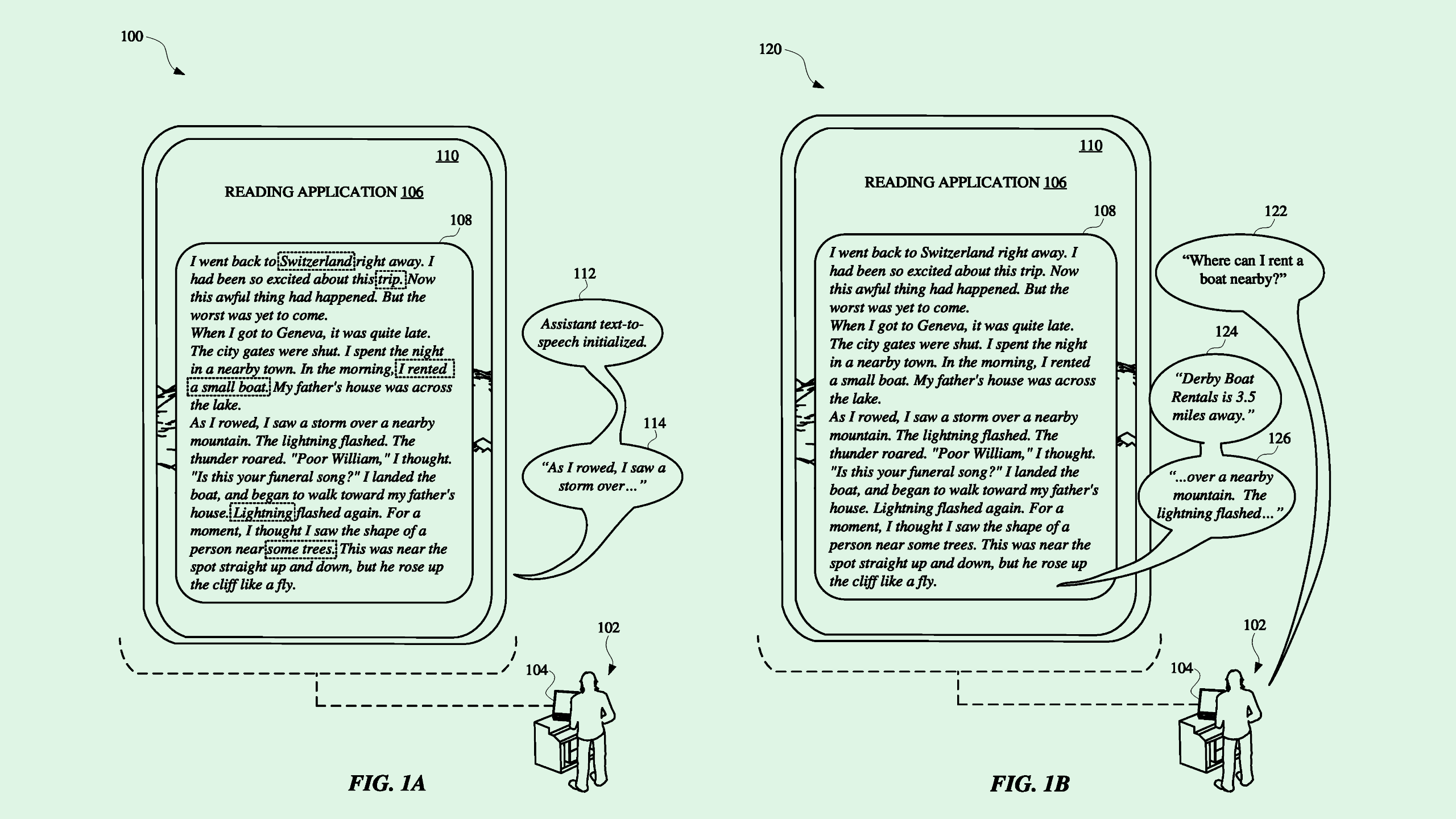

The company wants to patent “non-wake word invocation” of an automated assistant related to words or phrases a user says in response to display content. Google’s tech basically watches your screen and listens for reactions so its digital assistant can jump in without hearing “Hey, Google.”

“In various circumstances, necessitating that the user provides an invocation input can prolong the interaction between the user and the automated assistant and/or can result in the automated assistant continuing to wastefully render ongoing content, such as audio content,” Google noted in the filing.

Google’s system takes context into account by monitoring display content to identify vocal inputs and user intent that may be relevant to the content, as well as whether or not the voice request is actionable for a digital assistant. This helps it determine whether or not a user is actually talking to the digital assistant.

For example, if a user is reading an article about traveling to Ireland, and they say out loud “How much are flights to Dublin?” Google’s voice assistant may kick in with an answer.

There are a few thresholds that a user’s voice input has to meet to be picked up by this system. For one, the command has to occur within a certain duration of time after accessing the display content, and follow-up commands have to be asked within a certain time frame after the initial activation. Additionally, it will only pay attention to content that a user is lingering on for a certain period, rather than what they’re scrolling through quickly. This allows the system to preserve computational resources and not completely drain a user’s battery.

Taking wakewords out of the equation is something that Google has attempted to crack in several patents, including “hot-word free” digital assistant tech that watches you move and listens to you speak, “on-device machine learning models” that learn from false positives and false negatives in speech interactions, and “soft endpointing” for more natural conversations.

But moving past wakewords isn’t easy. Without a wakeword, a digital assistant has little to grab onto to know that a user is speaking to it. This forces it to pay closer attention to its users in several ways, whether that means watching what they scroll past or listening to their movements. Depending on where the user data processing actually takes place, that kind of attention could present privacy concerns.

By plugging away at this capability, Google may signal that it wants to create more seamless interactions between digital assistants and users. Adding more intuitive features could help it fight its long standing battle with Amazon for dominance of the smart speaker market.

Google isn’t the only company aiming to make its voice assistants more intuitive. Apple took a shift in that direction with its launch of iOS 17 in September, where it launched the capability to drop the “hey” from “Hey, Siri.” It also recently filed a patent for “directional voice sensing” using “optical detection,” which uses AI to pick up vibrations using synchronized light emitters.

Amazon, meanwhile, has long offered the one-word “Alexa” or “Echo” activation trigger for its voice assistants, and has attempted to make interaction with its speakers less clunky with things like emotion recognition and listening for “environmental noises.”