Google’s Multimodal Assistant Patent Watches and Learns

The tech highlights that AI needs multiple streams of data to really get to know its users.

Sign up to uncover the latest in emerging technology.

Google’s digital assistants may be doing more than just listening.

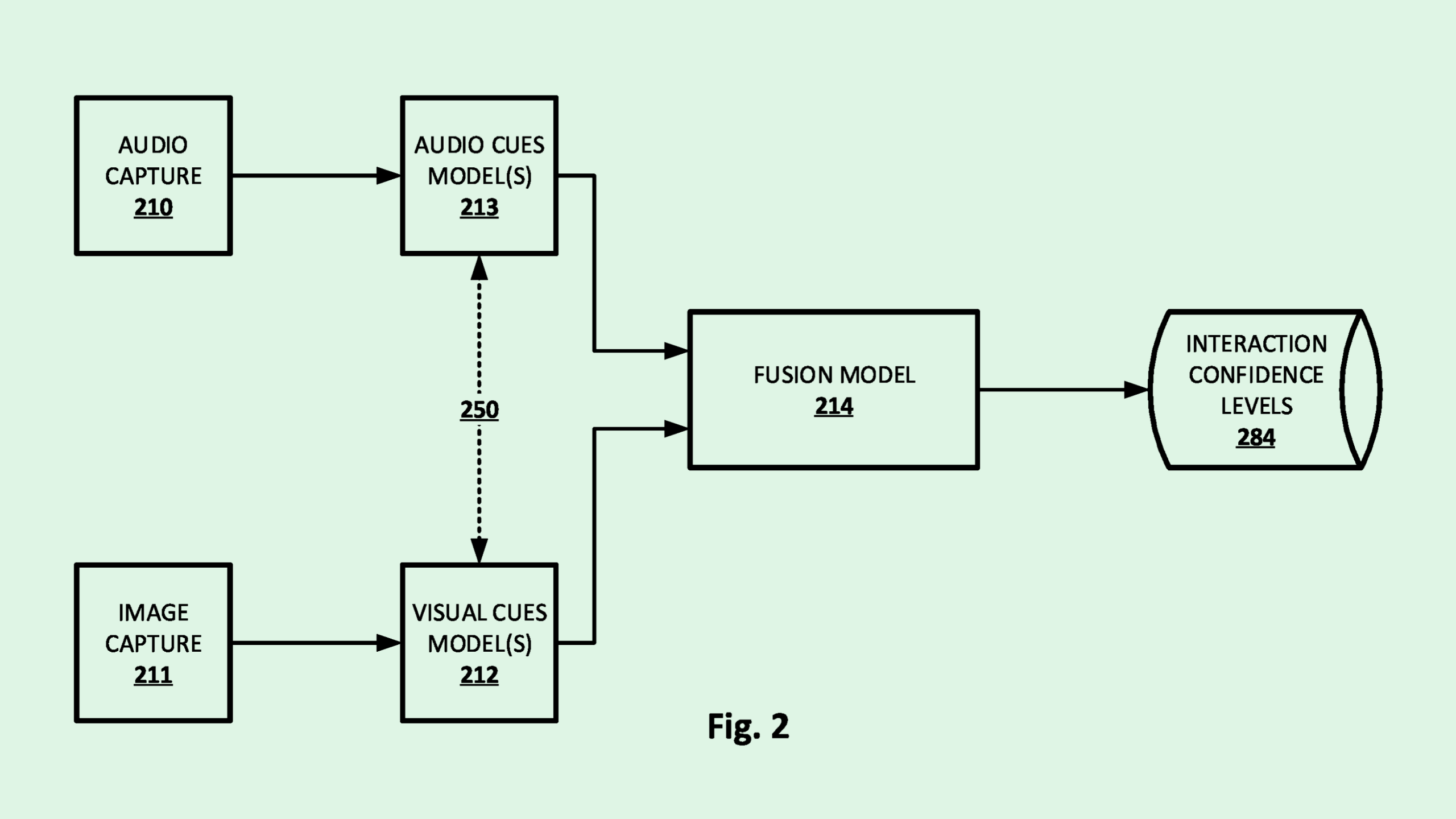

The company is seeking to patent a way to predict automated assistant interactions using “fusion of visual and audio input.” Google’s tech aims for its digital assistants to detect and act upon specific commands without needing to be explicitly invoked.

“There are various scenarios in which requiring users to utter long tail hot words before invoking an automated assistant to perform some action can be cumbersome,” Google said in the filing.

In place of needing to say specific hot words or wake words like “hey Google,” this system would allow the user to invoke a digital assistant via “hot commands,” which are physical movements or voice activity that “represent an intent to interact with an automated assistant.”

The system may catch these commands by listening passively to perform speech recognition “at time(s) other than immediately after the automated assistant is invoked,” Google noted. After listening, textual representations of a user’s speech are analyzed to figure out if the phrase is significant enough or said frequently enough to be considered a hot command.

The process is similar for visual data: Google’s system would watch and analyze image data collected through vision sensors (such as digital cameras, passive infrared sensors, stereoscopic cameras and more) and analyze movements such as gestures, facial expressions or gaze direction, or with a “visual features module” to determine if the movements indicate a desired interaction.

Finally, if the movements or phrases meet a certain threshold, they’re enrolled into Google’s system as a hot command, and used to perform specific actions when they’re said or done by the user.

While this feature could show up in smart home or speaker devices, this could also be a part of Google’s plans for its smartphones. For one thing, its phones already come equipped with a camera to pick up the visual data that the patent mentions. While smartphone makers like Apple and Samsung are hard at work adding AI to their offerings, the latest Google Pixel already offers features using the company’s AI model Gemini.

And Google has been plugging away at getting rid of hot words and wake phrases for a while. Its patent history includes inventions like non-wake word invocation of digital assistants using the context of your screen, digital assistants that collect physical data, and methods to train AI voice assistants via a global pot of training data.

This patent hints at a common theme in the world of AI development: Context is key, said Bob Rogers, PhD, co-founder of BeeKeeperAI and CEO of Oii.ai. To get as much context as possible, AI models need to be able to handle multiple streams of data, whether it be text, audio or image. For AI to get users on a deeper level – whether that means anticipating their requests or reading their emotions – is going to take more than one stream of data.

“The combination of video and audio definitely … creates a much better sense of context,” said Rogers. “In the last year, with the growth of multimodal foundational models, I think it’s starting to be kind of a no-brainer.”

Enriching AI model’s data streams is crucial for voice assistant tech to move past wake words. But one major issue these systems face is false positives, said Rogers. In trying to catch as many signals as it can to build up a bevy of invocation-free commands, these systems are bound to act on requests that a user didn’t actually make.

Another issue is privacy. While Google notes in this application that a lot of the data processing and storage is performed locally, it noted that cloud-based modules may be used for several of its components, including text-to-speech, speech-to-text, visual and audio feature modules and an “intent matcher.” And whether via your phone or your smart speaker, that means this system would be constantly siphoning up a lot of your data.

“Just saying that they’re keeping some of the stream of data on the device is probably not sufficient to guarantee privacy,” Rogers said. “They’re probably giving themselves an out there, but they’re probably streaming quite a lot of data to the cloud.”