Microsoft Coding AI Patent Highlights Ethics Concerns with Productivity Tools

A Microsoft patent for a machine learning-based coding tool underscores the potential pitfalls of relying too much on AI for productivity.

Sign up to uncover the latest in emerging technology.

Microsoft wants to reward its AI models for avoiding hallucinations.

The tech firm is seeking to patent a deep learning-based code generation platform trained through “reinforcement learning using code-quality rewards.” Microsoft’s system fine-tunes a generative AI model to produce more relevant and useful source code for developers.

Conventional code generation systems that use deep learning, which leverage neural networks to learn from large amounts of data, often fall victim to bias and quality issues, Microsoft noted, saying “this may result in the predicted output being less useful for its intended task.”

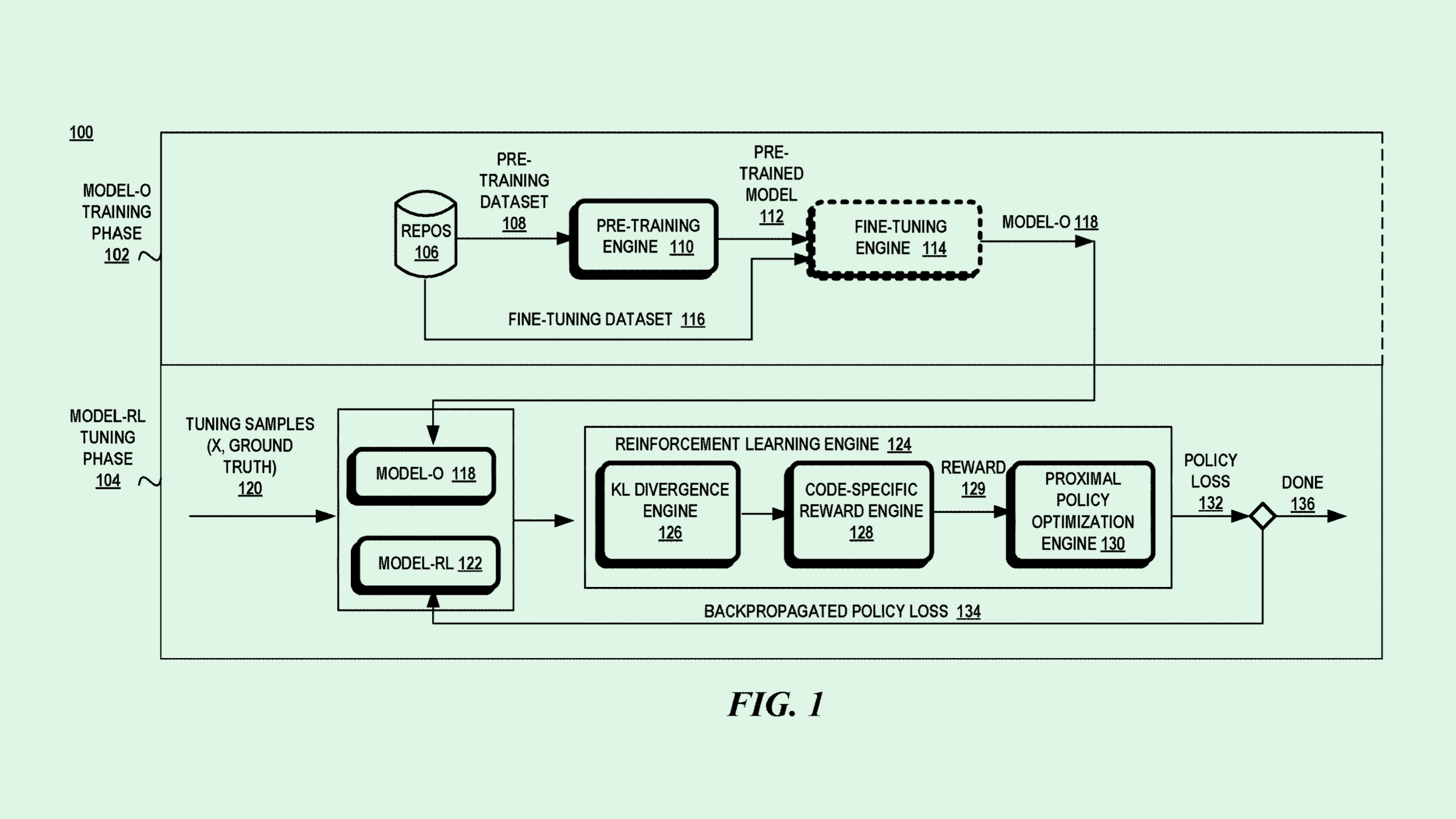

In Microsoft’s method, an initial deep learning model is trained to generate and predict source code, learning from large datasets packed with source code examples. The model is then fine-tuned to create code for a specific task using reinforcement learning, or a kind of training that rewards or penalizes the model based on performance.

Microsoft’s system would reward or penalize a model based on its scoring in several “code-quality factors.” These include proper syntax; minimal errors in compilation, functionality, and execution; readability; and comprehensiveness.

The system comes up with a metric score based on how close the predicted source code is to “ground truth code,” or code that’s known to be accurate. That score is then used to further train the model’s parameters to “take actions that account for the quality aspects of the predicted source code,” Microsoft said.

Productivity has been a mainstay of Microsoft’s AI plans. The company has gone all-in on Copilot, its AI companion, since launching it last March, and debuted PCs embedded with its AI technology in May. Microsoft-owned GitHub also offers its own AI coding tool, called GitHub Copilot.

While some fear that AI may make their jobs obsolete, these tools can be incredibly helpful if implemented properly, said Thomas Randall, advisory director at Info-Tech Research Group. In ideation, monitoring, and business analytics, many are already finding use cases for this tech, he said. And in software engineering environments, a tool like Microsoft’s could massively speed up project development.

Businesses are still figuring out the limits of these systems, said Randall. But lack of proper education on AI may be blurring the lines. “A big downside that we potentially will see, especially with users who have not been trained by the organization on how to use these tools effectively, is over-reliance,” he noted.

This is something Microsoft seems to recognize too: In an interview with TechRadar earlier this week, Colette Stallbaumer, general manager for Microsoft 365 and Future of Work, said that the company’s goal is to democratize AI, not for it to take over. “We want these tools to benefit society broadly,” Stallbaumer said. “There’s no skill today that AI can do better than us …you can either choose fear, or choose to lean in and learn.”

Learning where to draw the line is especially important when considering where AI may fail, said Randall. These models still often struggle with things like hallucination, accuracy, and bias.

While Microsoft’s patent attempts to cover these issues in code-generation contexts, one issue is seemingly missing: explainability, said Randall. Generative AI’s outputs are often created within a black box. But as AI becomes more ingrained in modern-day workplaces, Randall said, it’s important to understand how the tech comes up with its answers. With that piece missing, relying on these systems may result in inaccurate information, security issues, or accidental plagiarism.

This, too, is where training is vital, said Randall. “There is that core question of how you can train your users to interact with this tool in the right way,” he said. “That you are skeptical, that you maintain a critical thought over it, and that you’re not just accepting what it tells you.”