Microsoft’s Model Screener Could Create More Responsible AI Tools

Microsoft may want its language models to prove their worth.

Sign up to uncover the latest in emerging technology.

Microsoft wants to test its AI models’ mettle.

The company is seeking to patent a system for “model capability extraction.” Microsoft’s patent details a system that can test what a large language model can do with specific queries, aiming to help models be “fully utilized to their potential by providing a systematic way to discover capabilities possessed by the model.”

“Due to such complexity and extensive training of large models, it is often not completely clear what capabilities the model actually possesses,” Microsoft noted in the filing.

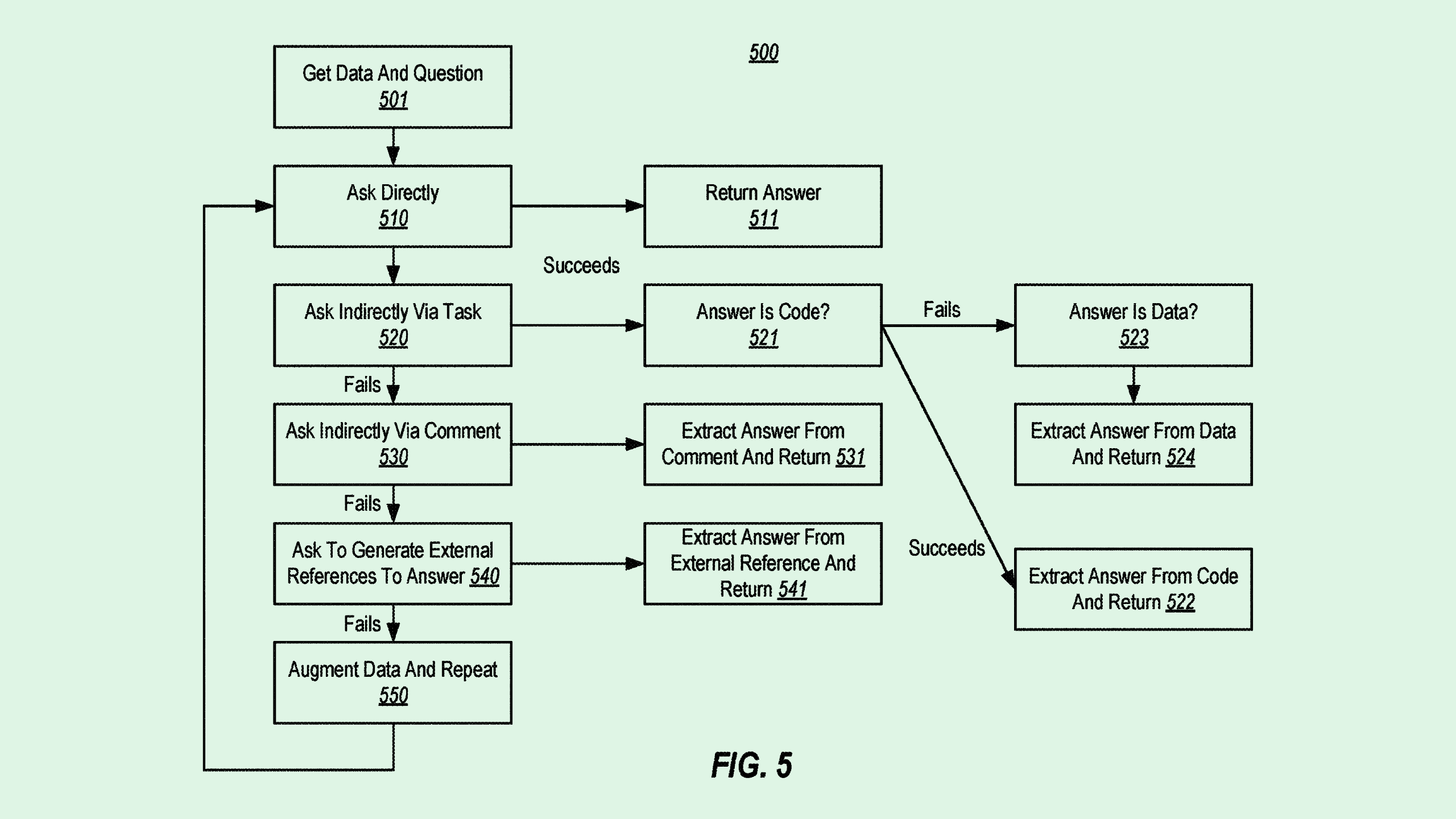

Microsoft’s tech is fairly simple: Feed an AI model certain inputs and analyze how it responds to figure out its capabilities. This system uses “indirect queries” and interactions to test its models, or ones that are meant to elicit outputs that are either not natural language at all or aren’t “semantically responsive” to the question that was asked.

For example, if you asked an AI model to perform a task, such as generating data, code, images or other media, Microsoft’s system would evaluate the result to determine whether or not the model is actually good at said task.

These tasks may be requested from the AI model multiple times by what the filing calls a “capability extraction system,” to ensure that the model can repeatedly perform certain actions with good results.

Microsoft’s patent could give the company a claim over an AI stress-tester with a wide range of potential applications, said Bob Rogers, PhD, co-founder of BeeKeeperAI and CEO of Oii.ai. The company went over several scenarios in the filing, but testing an AI model’s capabilities by having it show rather than tell could be applied to practically anything.

For example, this could test how well a model can diagnose a medical patient based on clinical notes. Applied to a niche customer service chatbot, this could test how capably it responds to specific questions. With a computer vision model, it could test how distinctly it could pick up on certain features or objects.

This could also be put to use in helping create AI that’s more ethical and responsible, Rogers noted. Microsoft’s tech could test how well an AI model handles private data without revealing it, or test whether or not a model’s output contains bias. While this doesn’t break the “black box” problem that AI models present, it could offer some assurances that an AI model is working as intended.

“We’re always trying to figure out how to get AI or machine learning to explain itself, which isn’t always the right approach,” said Rogers. “Here, they’re taking the approach of not explaining how (a model) does it, but demonstrating clearly that it can do the thing.”

This could also help deal with hallucination, when an AI model gives incorrect information based on insufficient training data. If Microsoft is testing a system’s ability to understand facts, it could help detect when an AI model starts getting things wrong, Rogers noted.

Plus, given that Microsoft is one of the biggest frontrunners in the AI race, having a “unique way of protecting their own services from liability” such as privacy problems, bias, or incorrect outputs, securing this patent only serves to help the company.