Meta’s Machine Learning AR Patent Fuses Its AI and Metaverse Bets

Despite investors’ reaction to the company’s big spending, Meta’s potential to monetize AI and its access to data may give it a leg up in the long run.

Sign up to uncover the latest in emerging technology.

Meta wants to broaden the picture.

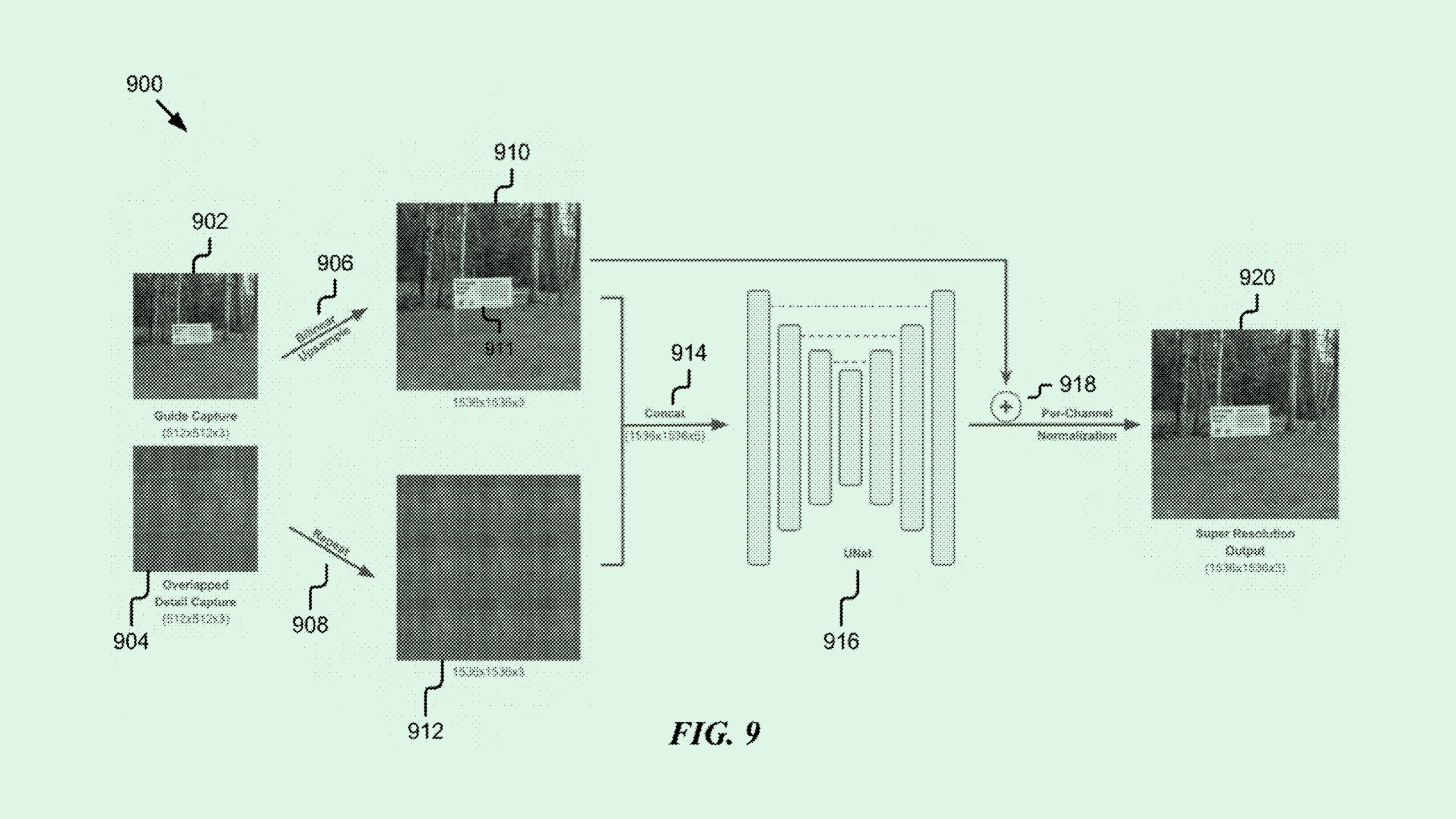

The company is seeking to patent a system for generating “super resolution images” using a “duo-camera artificial reality device.” This tech uses machine learning for artificial reality image reconstruction and generation, fusing Meta’s two main focuses: AI and the metaverse.

Artificial reality glasses have two main requirements: high-resolution imagery and small form factor, which Meta noted are “in general contrary to each other.” While “single-image super resolution,” which uses machine learning to make low-res images clearer, can achieve both goals, Meta’s patent aims to improve methods for doing so within the context of a pair of smart glasses.

Meta’s process first takes images from multiple cameras positioned on a pair of glasses, the primary being a “guide camera” with a wider field of view and lower resolution, and one or more secondaries being “single detail cameras” with a narrower field of view but higher resolution. The filing noted that there may be up to nine single-detail cameras positioned around the frames.

The images taken by the guide camera are “upsampled,” or increased in resolution without losing quality. Meanwhile, the images from the single-detail camera are “tiled,” in which multiple perspectives of a scene are combined into one image. This makes it so that the “resulting overlapped detail image can capture the scene in high quality from all different views.”

This image data is then fed to a machine learning model or neural network, which reconstructs a final view that smooths out a high-quality field of view of the entire scene. The result is a much smoother, low-latency viewing experience.

Meta has been working hard to keep up with the rest of Big Tech’s AI drive. The company released an early version of Llama 3, the latest version of its open-source large language model, in mid-April and has started embedding AI throughout its social media experiences.

The company’s push is far from over. In its recent earnings call, Meta noted that capital expenditures would “increase next year as we invest aggressively to support our ambitious AI research and product development efforts.” The company bumped up its spending guidance from $30 billion-$37 billion to $35 billion-$40 billion. Investors were none too pleased with the lavish spending plans, with shares tumbling 15% on the news, cutting its market cap by billions.

As it stands, Meta’s monetization strategy and timeline for its AI are “somewhat vague, particularly in comparison to major tech competitors who are already monetizing generative AI services via cloud computing,” said Ido Caspi, research analyst at Global X ETFs.

But the company’s broad user base across its family of apps gives it plenty of opportunities to make money from these models, Caspi noted. Plus, its access to robust data sets and its open-source approach can only benefit it in the long run. “We anticipate that Meta will emerge as one of the frontrunners in the AI race,” Caspi said.

This patent also signals that its mixed-reality technology could be a potential use case for its AI research, said Jake Maymar, AI strategist at The Glimpse Group.

Similar to its AI work, the company has invested billions into its metaverse technology with little revenue to show for it. But using machine learning to create a clearer picture and bring in new augmented reality capabilities, while simultaneously slimming down the form factor from headsets to glasses, could be the company’s plan to make the metaverse experience more “frictionless,” Maymar said.

“The biggest hurdle for AR is the form factor,” said Maymar. “Ultimately, the first company that takes machine learning, computer vision, and neural networks and starts applying it to vision in a way that’s fashionable – it’s going to be a no-brainer.”