Snap Might Use AR to Make Reading Fun

The company’s patent for “enhanced reading” using AR glasses could create visualizations of what you’re reading.

Sign up to uncover the latest in emerging technology.

Snap wants to help you get lost in a book.

The social media firm is seeking to patent “enhanced reading with AR glasses.” Snap’s filing describes a system that essentially creates real-time AR visuals while a person is reading a book.

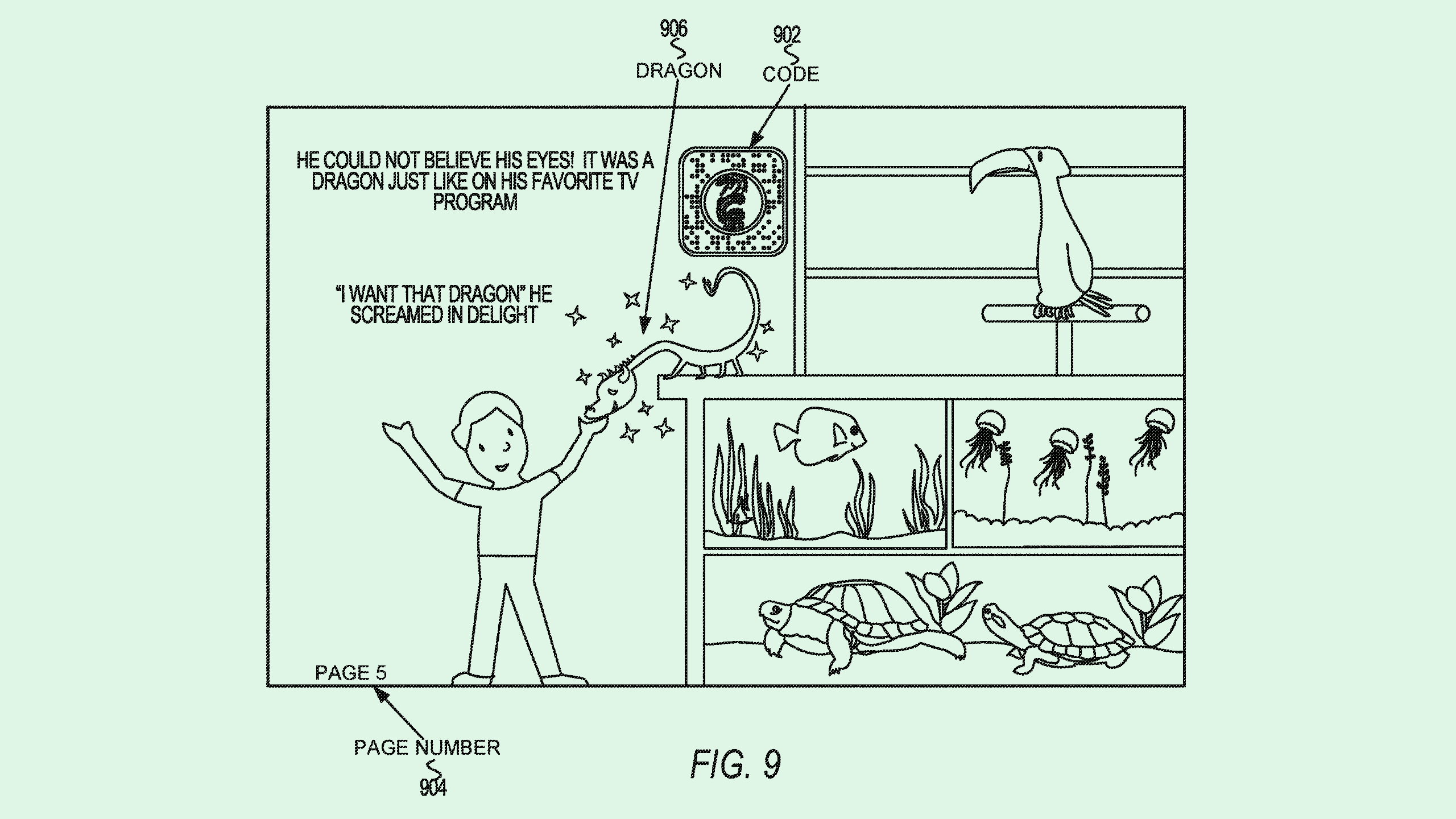

“The reader uses a wearable electronic device such as AR glasses that identify the printed codes included in the text and seamlessly provides virtual objects within a mixed reality environment for the reader to interact with while reading,” Snap said in its filing.

In order to bring pages to life, Snap’s system captures images of whatever page you’re on to identify when the pages include QR codes that contain “code modules” corresponding to different interactive AR or VR objects or experiences.

One example Snap lays out is using AR experiences in a science textbook teaching about gasses, allowing users to “interact with the supersized gas molecules to understand the relationship between volume and pressure.”

When it comes across a code, these mixed reality objects will activate and show up in the user’s display for the “estimated reading time duration” of the page. The system may estimate this reading time depending on the reading habits of the user. Users can also shut off these experiences with explicit input, such as a handwave.

After activating the virtual object, the system will enter sleep mode as a way to conserve energy, and reactivate when it needs to capture another QR code and activate another object. Users can also manually wake up this system with an “external user interface,” which in this case may refer to the Snapchat app.

One major problem that AR device developers face is the fact that these devices lose power quite fast. This patent aims to address that issue in two ways, both by using sleep modes to activate only at optimal times, as well as only focusing on a single task, rather than attempting to multitask.

“In my opinion, we don’t need multitasking in this particular (device) just yet,” said Mithru Vigneshwara, product director at AR firm QReal.io. “It doesn’t need to have everything loaded at the same time. Let’s get some really good singular use cases before we move on to multitasking.”

AR headsets and glasses face a number of obstacles in scaling and adoption, said Vigneshwara, short battery life being one of them. However, these devices also face issues with a limited field of view and a somewhat bulky user experience, he noted. “Some of these glasses aren’t necessarily seamless,” he said. “Field of view is still relatively small. That’s an extremely hard problem to solve.”

Because of these limitations – as well as the sheer cost of these devices – adoption of AR experiences will likely remain tied to smartphones for the foreseeable future, he said. “I’m a firm believer that (AR) will have to kind of be accessible via phone, because not everybody is going to have these devices,” he said.

That said, Snap has been working on smart glasses for years now, releasing its first pair of Spectacles in 2016. The company has sought patents for AR devices ranging from gaze tracking to prescription spectacles to AR contact lenses. Plus, it may be looking to ramp up production of its Spectacles project: In December, the company was awarded a $20 million grant from the State of California that will go towards expanding manufacturing of its AR devices.