Adobe Looks to AI to Fight Fake News

Adobe wants to fight disinformation one PDF at a time with it’s latest patent filing.

Sign up to uncover the latest in emerging technology.

Adobe is working on its own way to fight disinformation.

The tech firm filed a patent application for “fact correction of natural language sentences” using data tables. Adobe’s method uses multiple AI models to break sentences down into pieces to analyze their accuracy.

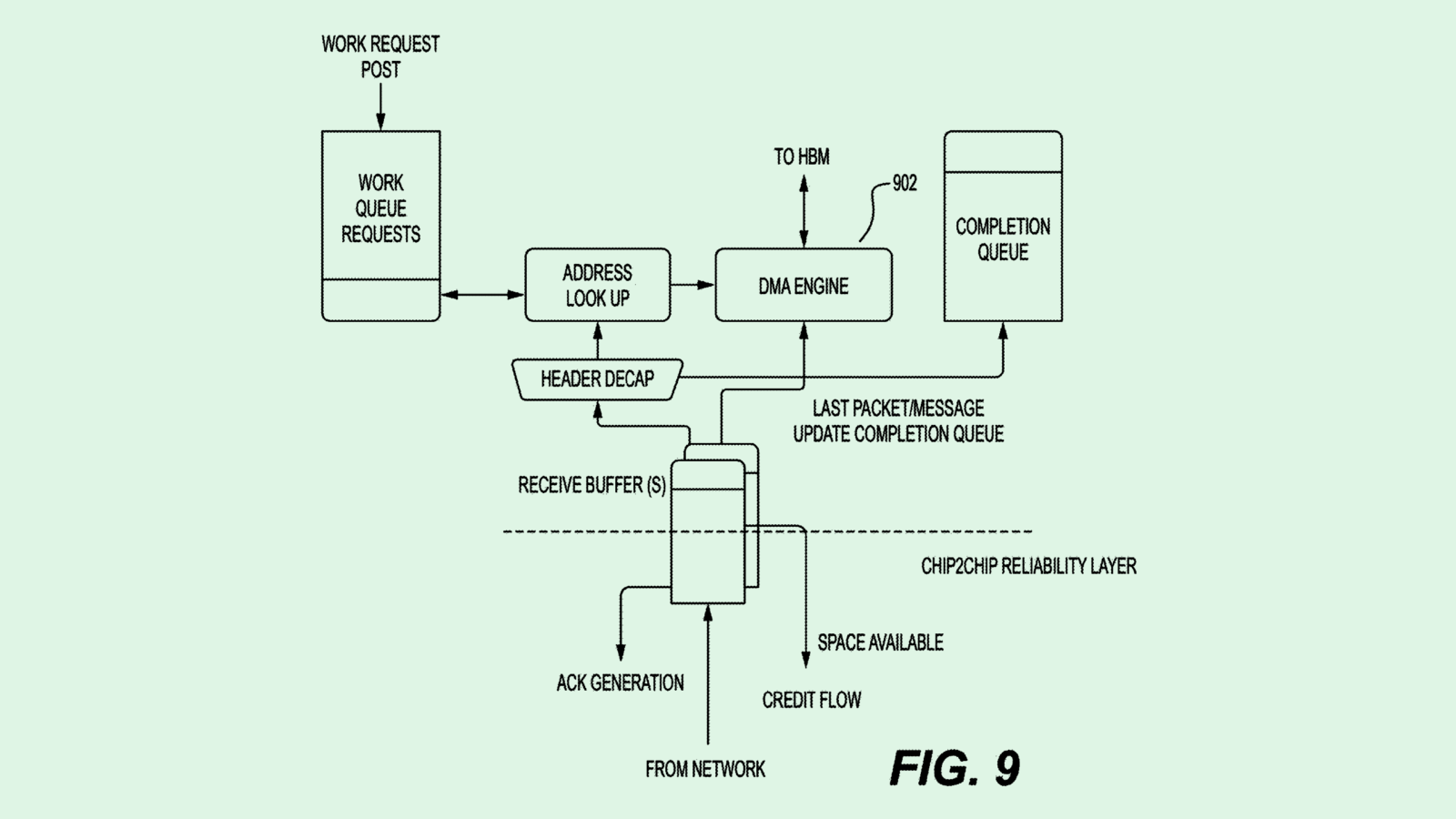

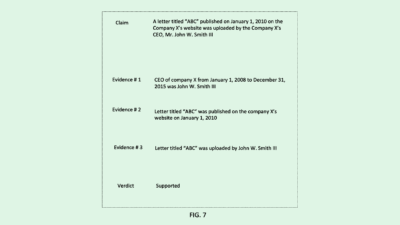

First, this system would “tokenize” elements of a sentence it was fed, breaking them down into a few words or phrases to analyze individually. By using the first of several machine learning models, a “data table” with information relevant to that in the sentence is then identified. These data tables may be “pre-processed” by the fact correction system, meaning this system can be modified to take on specific topics.

Using a second machine learning model, this system deciphers if any tokenized elements of the sentence render it false. Finally, the words or phrases identified as false are then “masked,” and a third AI model predicts a new word or phrase that would make the sentence more accurate.

Adobe noted that determining the accuracy of news and identifying disinformation in environments like social media can be difficult. The rapid-fire way that things spread on these platforms makes stopping disinformation even more arduous. “Existing solutions have limitations and drawbacks, as some act only as verification systems that can only indicate whether a statement is true or false,” Adobe noted.

Big tech firms like Meta, Google and TikTok each have their own policies fighting misinformation. But the AI algorithms behind those platforms have also helped the misinformation problem prevail, said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University.

The goal of these firms to keep users engaged has created “an objective function which says maximize user engagement with no consideration of what is factual or non-factual, and has done a lot in promoting misinformation and extremist content,” he said.

But this patent is from Adobe, a software firm, rather than a platform that provides the tinder to make misinformation spread like wildfire. At first glance, the company’s interest in this tech doesn’t necessarily make sense. However, Green said, “It might be the actual distance between them and the problem that has given them the desire and motivation to do something like this.”

However, tech like this could be quite lucrative for the company: Adobe could certainly license a system like this to other platforms, or integrate it to use in an PDF app like Acrobat, creating a powerful fact-checking tool that could quickly find faults in everything from academic papers to legal filings.

Plus, this isn’t the first time we’ve seen Adobe look at tools that seem altruistic. The company filed a patent for a computer vision-based “diversity auditing” tool. However, something that both these patents have in common is unintended consequences.

Like all AI-based systems, systems like these are only as good as the data they’re built on, and can be leveraged for the opposite of their intentions, Green said. “The main vulnerabilities of course would be making sure the data is correct in the first place, and then making sure that data doesn’t get hijacked or corrupted at some point,” he said.