Facebook Wants to Know What You Love (plus more from Amazon & Ford)

Taste profiles of users, sentiment analysis from alexa, & autonomous vehicles that message pedestrians.

Sign up to uncover the latest in emerging technology.

taste profiles of users, sentiment analysis from alexa, & autonomous vehicles that message pedestrians

1. Facebook – determining user affinities for brands

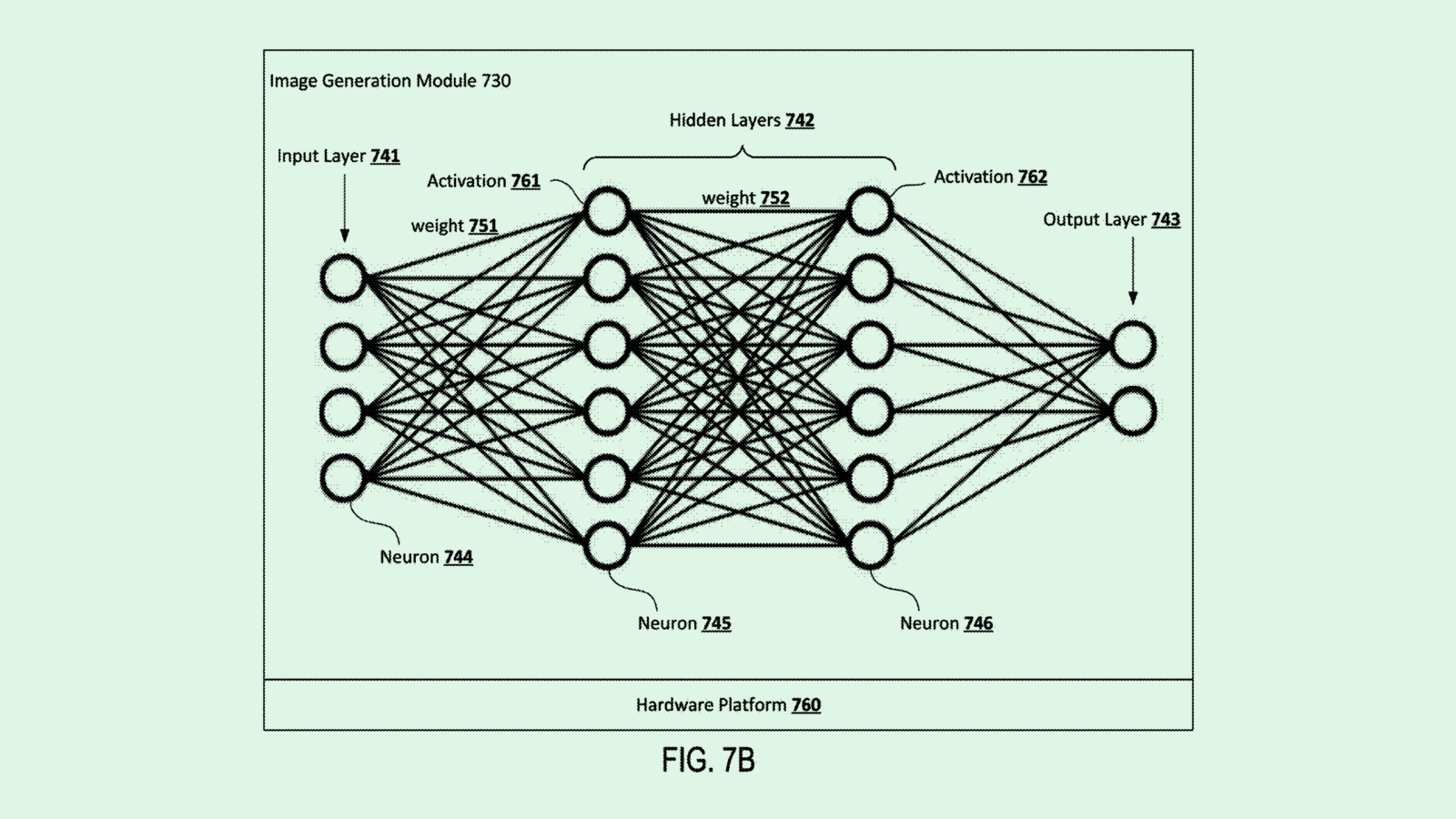

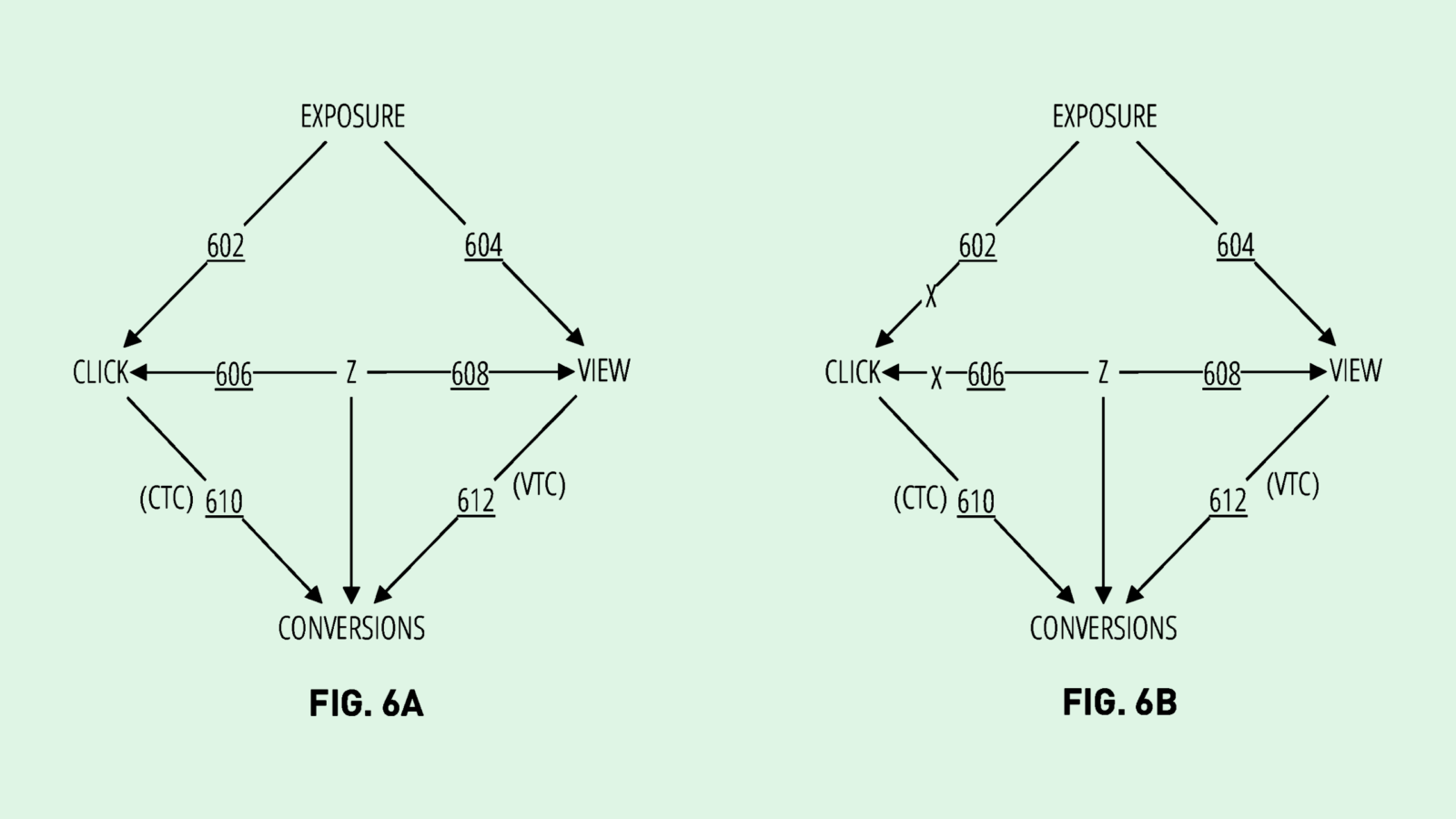

Facebook (‘Meta’) wants to analyse the images we interact with in order to understand the brands / products we love, and in turn update taste profiles of each user.

As outlined in the filing, up until recently, Facebook determined the things we like in two ways: 1) what we explicitly highlight as our interests (e.g. following hashtags on Instagram); and 2) looking at the information attached to a piece of content we interact with (e.g. accounts tagged or caption).

Facebook’s next step is to understand our affinities from the images we interact with, even if there is no information associated with it.

To do this, Facebook will identify items within images we interact with, and then use machine learning models to estimate the probability that the item is associated with a particular entity (e.g. is this t-shirt from SUPREME?).

While this isn’t particularly surprising, the implications are pretty interesting. For instance, when we post a selfie on Instagram Stories, Facebook could be analysing our photos to understand the brands we’re wearing, the items we own in our rooms, and then creating a taste profile of us. These items could help place us into relevant sub-niches for more targeted advertising or help inform the feed algo on what content to show us.

This filing is another example of a maxim I’ve come to believe in while doing Patent Drop – if a piece of data can be extracted and analysed, it eventually will be. The marginal cost of understanding a person deeper is getting lower through technological advances (in this instance, computer vision), while the rewards to that understanding is high – more targeted adverts or higher user engagement.

2. Amazon – sentiment aware voice interface

Most voice recognition interfaces currently work by converting your speech into text, and then processing the words to try to understand a user’s command.

Amazon want to take their voice interface to the next level by understanding a user’s sentiment when they speak to Alexa.

The use-case outlined in the patent application is pretty relatable to anyone who’s interacted with an Alexa. Let’s say you told Alexa to “open YouTube”, but it understood it as ‘open U2’ and starts playing a U2 album.

You’d probably get frustrated and repeat the command to Alexa, maybe slightly altering the intonation.

By detecting the sentiment in a user’s voice, Alexa could recognize that it made a mistake and ask a clarifying question – “Did you want to open U2?” – instead of just repeating the action again. If the user responds no, Alexa could ask the user to rephrase the request, or even suggest an alternative that phonetically sounds like the initial request – “Oh, did you mean YouTube?”

In order to detect sentiment, Amazon will look at a number of different data points. Firstly, it will look at what a user is saying exactly. For instance, if a user tells Alexa to “shut up”, Alexa would associate these insults as indicators of frustration or anger. Secondly, it will study the acoustic characteristics of what’s being said. If a user starts speaking in an elevated tone, or makes sounds such as “ugh”, this may indicate anger. Lastly, Amazon could study image data captured by a camera to look at a user’s facial expression.

While studying a user’s sentiment might make the UX of interacting with Alexa less frustrating, we’re also giving Amazon a peek into our emotional worlds. For instance, when we make a request, Amazon could understand where we’re at psychologically whenever we make that request. Let’s say you’re having a bad day and you tell Alexa to order some wine. When hearing your voice, Alexa might detect that you’re feeling unhappy. So when it takes your wine order, it might make some other suggestions of products to buy that might lift your mood – “Would you like me to order an ice cream with that?”.

Combining sentiment analysis with e-commerce could make a powerful recommendation engine.

3. Ford – autonomous vehicles communicating with road users

Ford is thinking about how autonomous vehicles could communicate with road users that are impacted by the movements of a car – for instance, pedestrians, cyclists, bikers etc.

In the patent application, Ford outline that they’re looking to use augmented reality to communicate the movements of an autonomous vehicle to road users that are on their mobile. For instance, if you’re about to cross a road, your smartphone could tell you that the vehicle is not going to stop. Ford is looking to do this by integrating with 3rd party map providers such as Apple Maps & Google Maps.

This might seem unnecessary at first reading. For instance, it’s generally considered unsafe to be on your phone while crossing a road etc lol.

But this is pretty interesting for a number of reasons. Firstly, the integration with maps providers is a smart way for autonomous vehicles to be able to communicate with nearby pedestrians. Ignoring the augmented reality component, our maps could in theory take a lot more of a proactive role in keeping us safe if it starts to communicate with vehicles.

Secondly, when we have AR glasses, the augmented reality warnings from autonomous vehicles is extremely useful. If a vehicle is about to turn into your direction, you’ll be notified of it happening before you see the vehicle turning. Having this kind of communication system is smart because it also takes a preventative approach to reducing the number of accidents that could occur with autonomous vehicles. We become less reliant on the vehicles being able to detect and quickly respond to potential accidents, because a communication layer is opened up between the vehicles and the people at risk of being in an accident.

It’s fascinating thinking about the UX of these future interactions – this patent application gives a small peek into a wider system of thought we’ll have to consider as we build out these technologies.