Humane Creates Wearable Lasers (plus more from Nvidia & Ford)

laser projecting wearables, synthetic audiences & autonomous delivery vehicles

Sign up to uncover the latest in emerging technology.

laser projecting wearables, synthetic audiences & autonomous delivery vehicles

1. Humane – Laser Projecting Wearable

If you haven’t heard about Humane, check out this teaser video released a few weeks ago.

Humane is a new computing company started by Apple OGs – Imran Chaudhri and Bethany Bongiorno. The company has been shrouded in mystery for a while, but hype is building up around what they’re cooking up.

To peel back the mystery a little, I dug into an old patent filing that was made public in April 2021. I’ll also be including Humane in the list of companies I’m tracking.

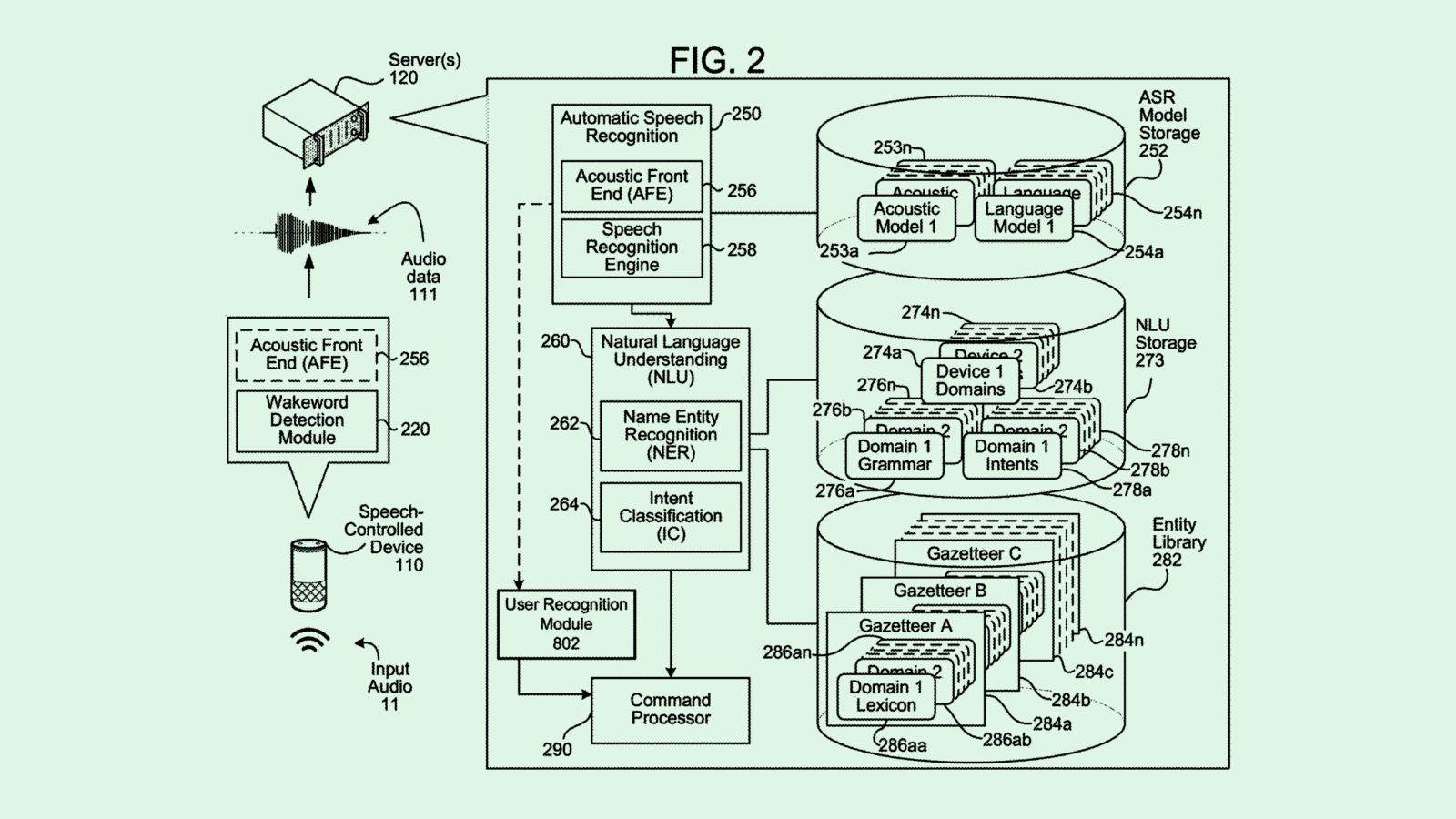

So Humane looks to be building a computing device that is worn on clothing (e.g. a magnet on a shirt), projects an interface onto surfaces, and uses cameras / microphones to capture context, and a depth sensor to track hand gestures.

Reading about some of the imagined use cases might make this come to life a bit.

Firstly, the device can easily and quickly start capturing photos and videos from the perspective of the user. Humane is planning for people to use touch gestures or audio commands to start capturing an event. Imagine you’re at a concert watching Kendrick Lamar perform. You touch your device that’s attached to your shirt and you can start recording the performance, while also keeping your hands free. The form-factor of a clothing wearable rather than ‘glasses’ feels like the device can embed itself more deeply into everyday life.

One curious use-case that Humane mention in the filing is using the camera to capture deeper transaction data. So if you were making a purchase, the Humane device could capture audio and visual context around the transaction – the people involved, the items, the location. Not entirely sure why this would be useful to the user, but I can imagine it being valuable data that payment companies and ad platforms would love to mine.

Other interesting use-cases with the Humane device revolves around the laser projector.

For example, imagine you’re cooking. The camera on the device could identify the objects on the kitchen counter. Then the projector could insert instructions on what to do with each ingredient – for instance, showing exactly how thinly to slice a piece of meat.

Or let’s say you’re walking. The device could project turn-by-turn instructions onto your palm, rather than you needing to stare at your phone.

So, is this a big deal?

There’s been some criticism:

My take is it is important. The approach to providing an AR experience via laser projectors instead of glasses feels more ‘humane’. We generally retain eye contact between people, more control over when it’s on, and the form-factor provides a constraint against visual overload.

More broadly, I think we need competing ideas for what future computing interactions should be – especially ones that aren’t coming from FAANG companies. Between the Stem Player and the Humane device, it feels like we’re at the start of a renaissance moment for consumer hardware. And at this moment, we ought to be rethinking our relationship to screens, our optionality with when to be ‘present’ in the natural world and when not to be, and how we move with our devices.

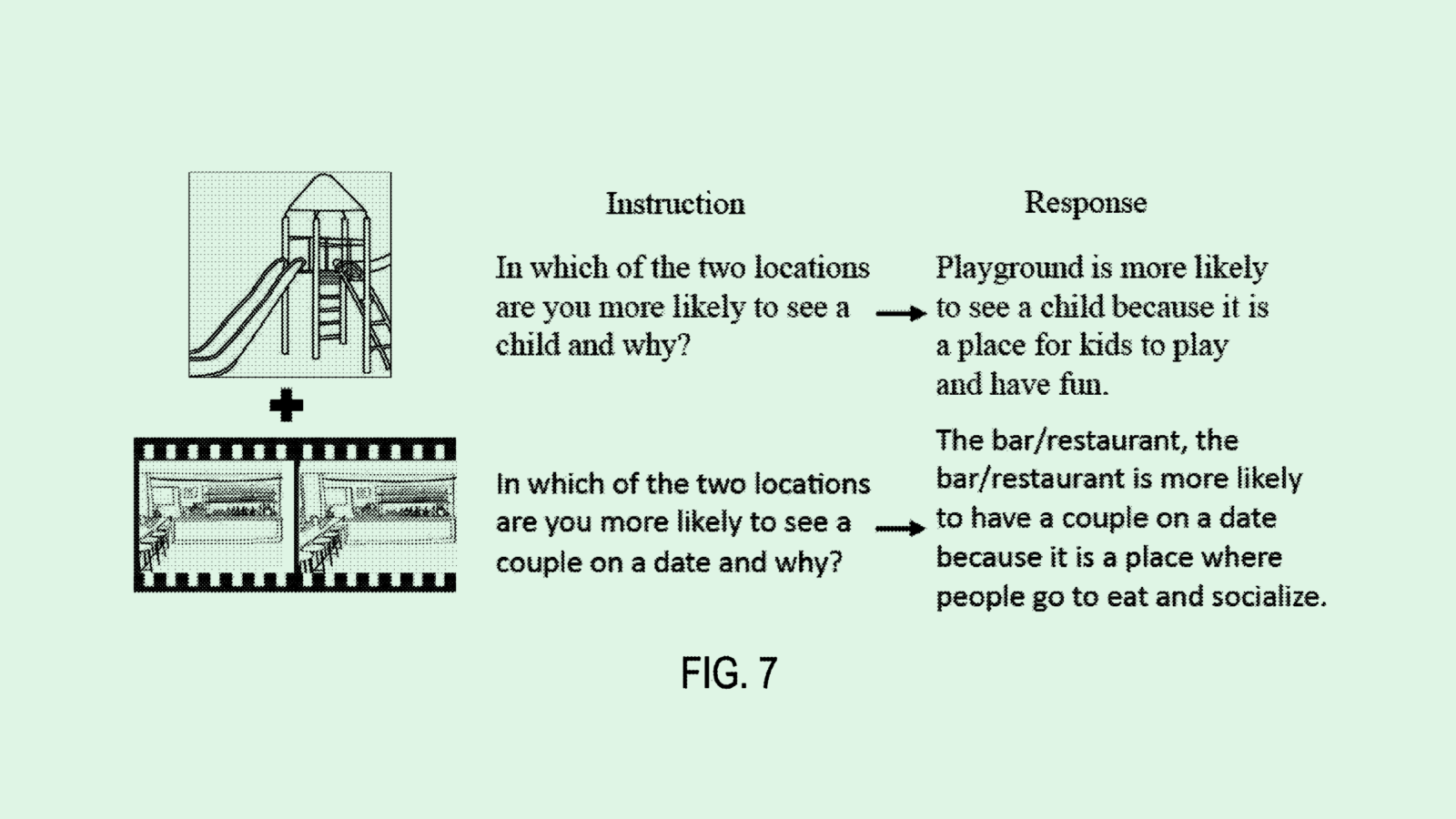

2. Nvidia – synthetic crowd responses

This is a pretty fun application.

Nvidia is looking to generate real-time, synthetic crowd responses to heighten the experience of events (e.g. a sports game), using sensors and event properties. The output is an audio signal that attempts to emulate how a real crowd would react.

The audio could be generated at an event itself, or for remote viewers of an event.

This application looks to be inspired by the pandemic where sporting events had disallowed fan attendance, but maybe lacked some of the ‘oomph’ that real audiences provide.

Nvidia’s machine learning model would use inputs such as: ball position, player positions, specific in-game events (e.g. penalties), the current score, and more.

Besides prepping for the next pandemic, Nvidia’s technology could be extremely useful for gaming environments (e.g. FIFA crowd reactions), or enhancing remote gaming competitions (e.g. an e-game championship).

3. Ford – smart containers for moving goods

Ford is re-imagining how autonomous vehicles could be used to deliver goods to people.

The vehicles will contain containers that have heating / cooling capabilities, security features to minimise the chance of thefts, assistance for loading / unloading, and GPS tracking for positional awareness.

When someone puts an item in a container, the container will be programmed to include data such as the customer name and what the item actually is. When the container reaches the customer’s address, the customer will be presented with a code to access the container, and a map to be able to identify the correct container. If a container is improperly tampered with, an alarm will sound and a notification will be sent to a third party.

The ability for the smart vehicle to have programmed climate control options, secure delivery of items and autonomous shipping, means that the vehicles could be an efficient means of delivering items for both consumers and businesses. With the vehicles not needing a driver, costs of delivery also come down significantly. In fact, it’s entirely possible that the whole delivery journey (from loading to unloading) doesn’t require any staff. Users could insert their item, insert the instructions, and then the vehicle will notify the recipient when their package is ready for retrieval.