Intel Patent Could Help Build Security Trust in Enterprise AI

Intel’s recent patent could help track down when AI slips up after it’s shipped out.

Sign up to uncover the latest in emerging technology.

Intel wants to give its AI a thorough inspection.

The company filed a patent application for a method to “verify the integrity of a model.” Intel’s system aims to ensure that machine learning models are secure after they’ve been deployed.

“After the model is deployed to the cloud service provider, an attacker can tamper with the model so that the model that is distributed to the implementing devices is not the same as the trained model,” Intel said in the filing.

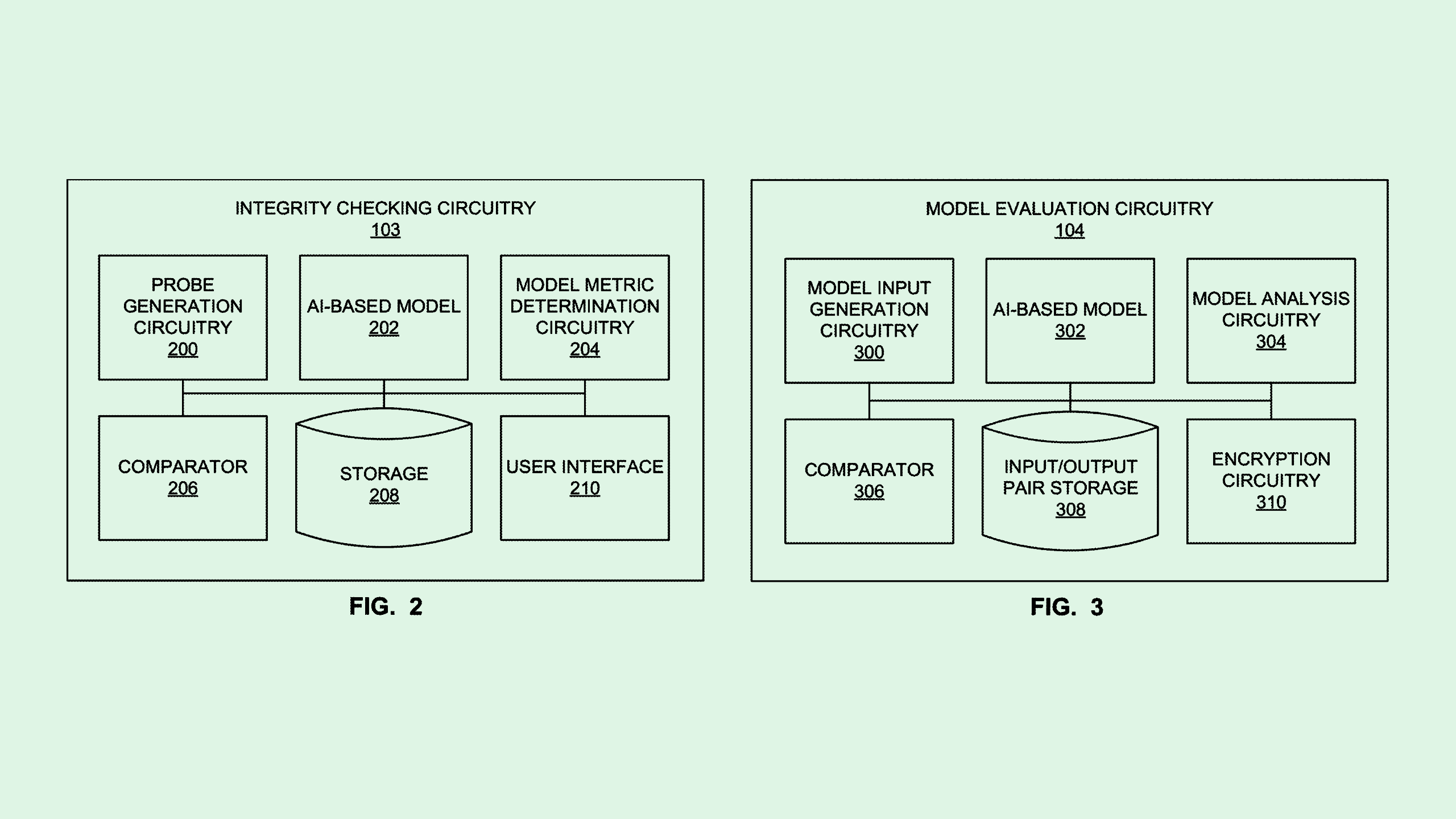

Intel’s tech method puts AI models through two phases of evaluation: one performed offline, and another performed online in a “trusted execution environment.” In the offline phase, a model is fed a number of inputs meant to “excite” its neurons, which comprehensively tests its capabilities. The outputs the model creates are then stored to be used as a reference.

In the online testing phase, those inputs are fed to the model again, and its outputs are compared to the reference responses it created in the offline phase. If the values don’t match, Intel noted, there’s a chance the model has been tampered with.

Since AI models often have multiple layers, Intel’s filing notes that this system uses a tactic called “layer-specific probing” for additional security. This means that this testing method is performed on the model layer by layer to make sure each one works as it should and no security flaws slip through the cracks.

Intel’s tech hits on a growing issue as AI adoption continues its meteoric rise: observing models after deployment to make sure they don’t slip up. “This is what CIOs should be thinking about – that they need to have a trusted environment for AI,” said Brian Jackson, principal research director at Info-Tech Research Group.

These capabilities may be useful to test for more than just tampering, Jackson noted. It could help keep a close eye on model degradation, such as when AI starts to hallucinate or exhibit bias.

And in order to establish trust in AI, technology leaders are “going to have to show the business users that there’s a lot of safeguards in place, that there’s a lot of precautions taken, that they’re constantly measuring the outputs and making sure that they’re in line with expectations,” Jackson said.

But AI development and deployment may be happening too fast for the safeguards to keep up, he said. AI tools are being rapidly adopted, not only by larger organizations and enterprises, but by the broader public via easily accessible large language models. Some of these tools have already shown evidence of hallucination and data leaks.

“There’s questions about what data is leaking out and getting into models that are publicly available, and perhaps even what business processes are being affected, because people are making decisions based on AI models that are out there in the world,” Jackson said.

Tackling this problem could help Intel differentiate itself from competitors — especially as it’s being trounced in the AI chip market by the likes of Nvidia. This could be an “extension of a strategy that Intel has deployed in other places,” said Jackson, by showcasing that their processors have built-in security that can make AI safer.