Nvidia Catches Cheaters (plus more from Uber & Microsoft)

Cheating detection, misusing scooters, and emotional understanding & generation

Sign up to uncover the latest in emerging technology.

Cheating detection, misusing scooters, and emotional understanding & generation

1. Nvidia – cheating detection using neural networks

With the rise of e-sports and significant cash rewards for tournament winners, the cost of cheating in games is more significant than ever.

There have been a number of high-profile incidents for e-sports cheating. For instance, in 2019, e-sports athlete Jarvis Kaye was banned from Fortnite for posting a video where he was using an aimbot which gives users perfect accuracy when shooting.

In this latest patent application, Nvidia propose using neural networks to help determine the probability that a user is cheating when playing a certain video game.

One approach that Nvidia outline is looking at the video data of a live-streaming player, and correlating it with the gameplay. For example, if a user looks away from the game display during which time a number of actions are performed in the game, this would indicate that the actions are highly unlikely to be performed by that player.

Another approach that Nvidia is exploring involves analysing gameplay video clips and trying to detect any anomalies. If there is any anomalies, machine learning will be used to attempt to tag the type of cheating that could be causing the anomaly. The types of cheating include:

automatic aiming: bots that give users perfect accuracy when shooting

wall hacking: where players can see through walls to easily detect enemies

recoil reduction: enabling better and faster shooting by minimising recoil

unauthorised map visibility: users could see the full map of a game and see where all of the players are, gaining an unfair advantage

Another interesting approach that Nvidia is considering includes utilising biometric data as an input to a model to detect cheating. This could include camera data, audio data from a microphone, and data such as heartbeat and breathing patterns. Ignoring the question of ‘how’ Nvidia would capture this data, a player’s input could be compared to the changes in heart rate and breathing. For example, if rate of input accelerates without a corresponding change in heart rate or breathing, this may be an indicator that a bot is being used.

While there is likely to be a lot of false positives, Nvidia is also considering building a historic profile of player history that records any decisions made about cheating. If a player has already been determined to cheat, then a future instance of possible cheating may be less likely to be discounted as a false positive.

Overall, this filing is interesting to me because it highlights how gaming is eating the world – whether it be media, entertainment, social and even as a profession. Capturing cheating and punishing those players is increasingly important. And as gaming environments are prime contenders to be homes to the metaverse where we live, breathe and work, capturing cheating will become even more crucial.

2. Uber – detecting non-compliant use of micro mobility vehicles

Right now, it’s pretty difficult for micro-mobility platforms to know when a user is breaking the rules. For instance, in many cities, you’re not supposed to ride electric scooters on the pavement. But with GPS data only being accurate to around 3 metres, it’s difficult for the platforms to be able to decide if a user is using the vehicle correctly. Or in other instances, users might park their vehicles in an illegal space.

In order to better detect non-compliance, Uber is looking at using cameras on the vehicles to take images of the surrounding environment. These images can be cross-checked with maps that indicate where parking is permissible. Using machine learning, Uber will then estimate whether a vehicle is parked against the rules.

If a vehicle is deemed to be parked illegally, Uber will then send a notification to the user telling them that the vehicle is improperly parked. If a user doesn’t correct it, the user might be penalised for it.

Similarly, when it comes to riding a vehicle, image data will be used to determine if a user is breaking the rules. For instance, if there are lots of pedestrians in direct view of the vehicle, there’s a high probability that the driver is riding on the pavement. To alert the user, Uber could use audio alerts from the vehicle itself, notifying the user of what they’re doing wrong and the consequences for not fixing it.

If this system gets implemented, its interesting to think of the privacy implications of having a fleet of micro-mobility vehicles riding through cities, capturing image data of cities, drivers and pedestrians on the streets. Will there be an outcry or is this just a necessary outcome of humans interacting with portable and connected devices?

3. Microsoft – intent recognition and emotional text-to-speech

Text-to-speech is extremely useful in scenarios where users can’t or don’t want to focus on reading text from a screen. For instance, if someone sends you a text message while driving, having the message read out to you is safer than you being distracted from the road.

However, having messages read out to you in a neutral tone doesn’t feel natural and actually makes it difficult to listen along.

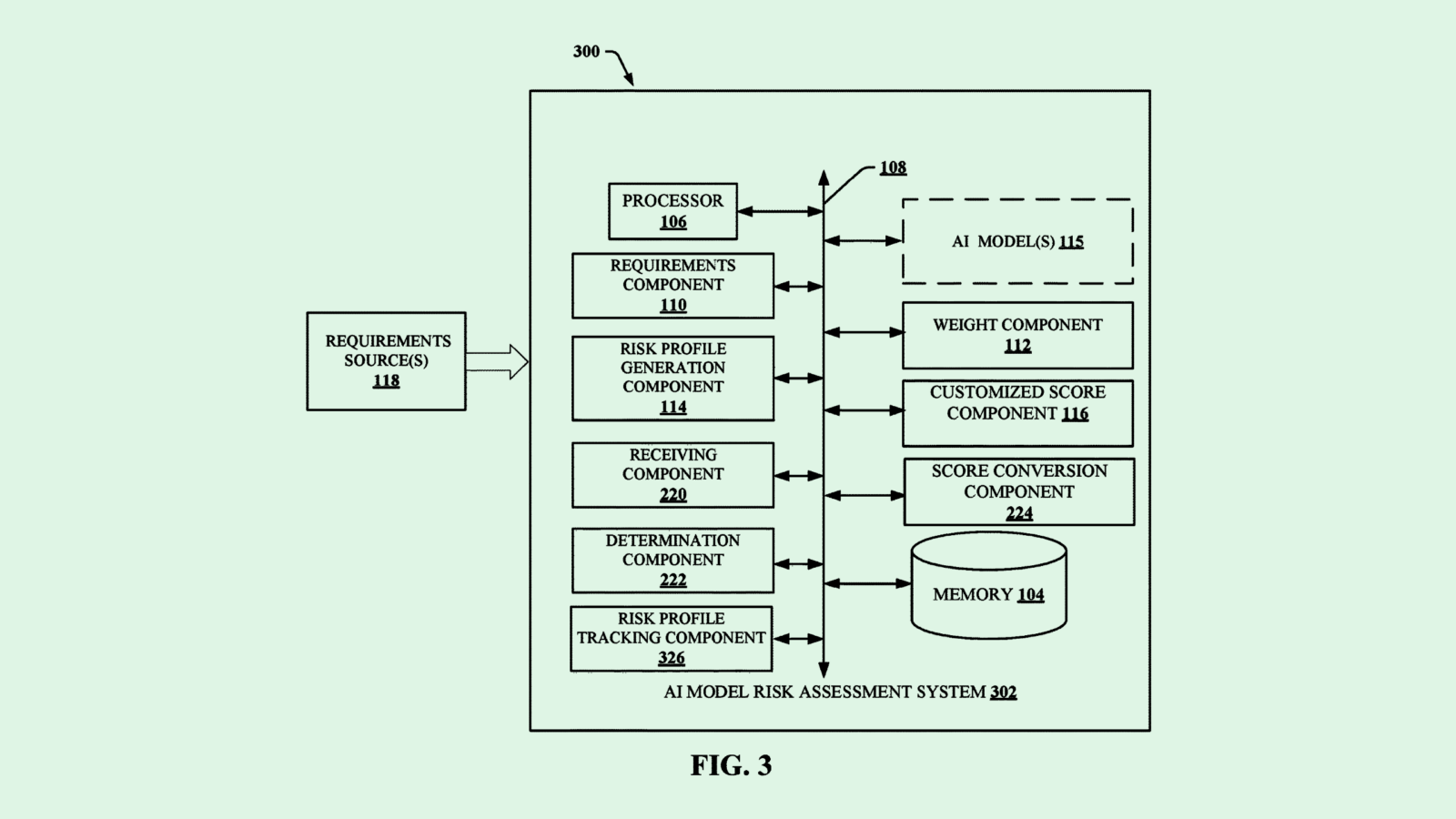

Microsoft is working on two things that are inter-related.

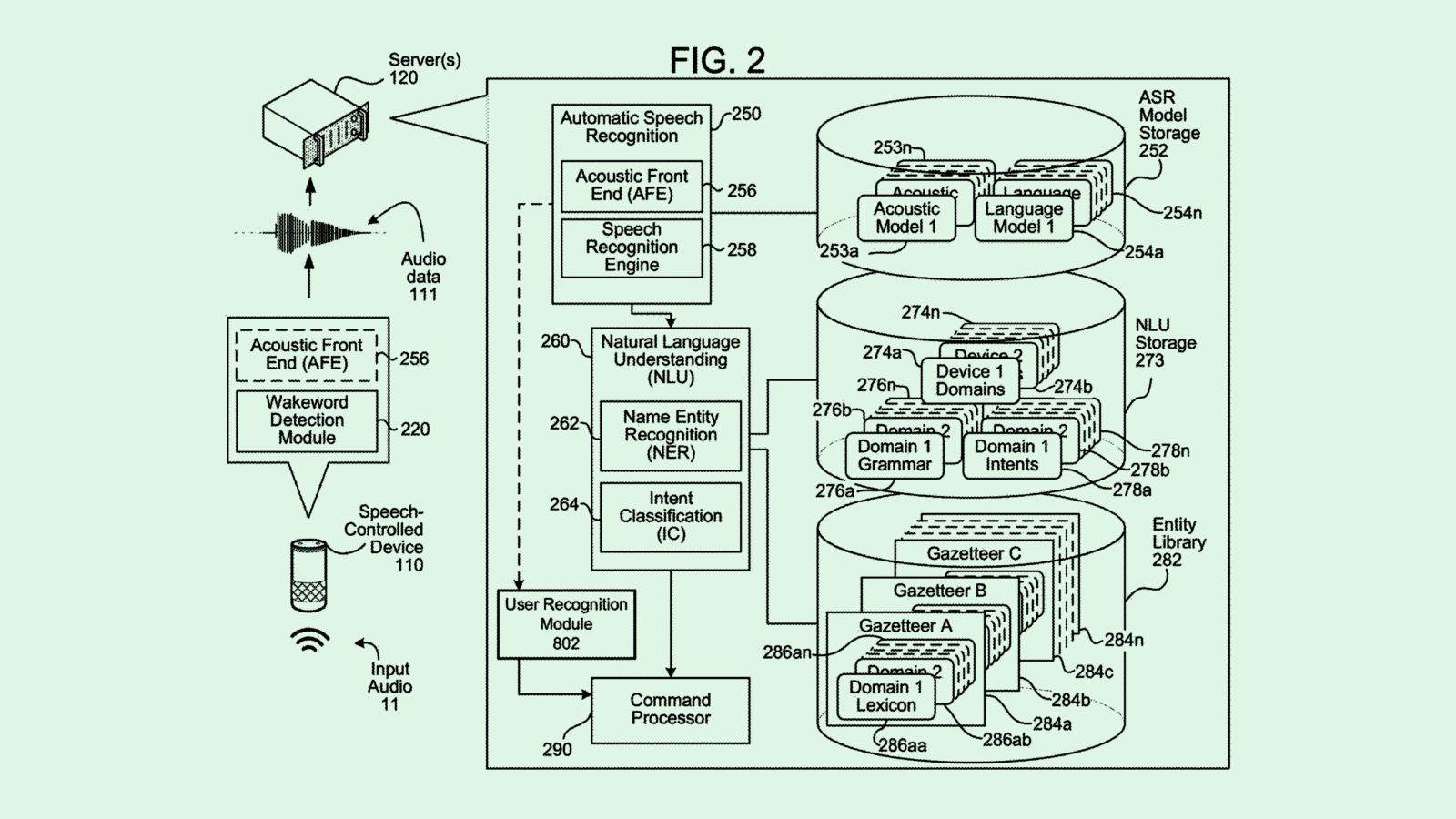

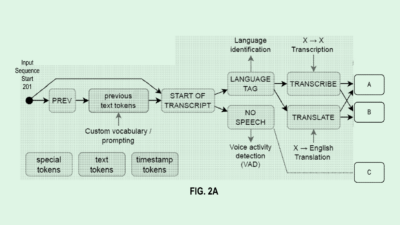

Firstly, it’s working on an intent-recognition system. When a user says something to a computing device (e.g. Siri), Microsoft will study the speech for the words that are being said, as well as the acoustic features of the speech. These acoustic features may give Microsoft more context into understanding a user’s intent. For instance, “I’m hungry” being said sarcastically has a different intent to someone saying it with a neutral tone. For the latter, the user might want suggestions on local restaurants.

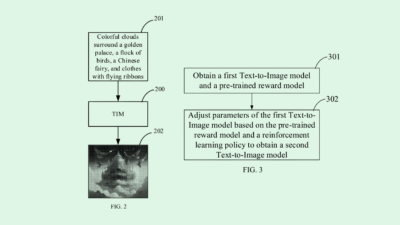

Secondly, Microsoft is working on emotional text-to-speech. Based on textual data, Microsoft will determine the emotions in the message, and then synthesise text to mimic human expression.

Understanding human emotion in dialog and being able to express written word in the appropriate emotions will make for more fluid, life-like human-machine interactions. The voice interactions we currently have with the likes of Siri are extremely primitive. We don’t have conversations with these interfaces, we simply give orders and wait for those orders to be understood. With GPT-3, we can have pretty realistic text conversations with machines. Throw an emotionally-expressive voice layer on top of that, and suddenly we might feel like we’re interacting with human, conscious entities.