Nvidia Patent Keeps Data Centers Energy Efficient

Nvidia wants to keep data centers from standing idle.

Sign up to uncover the latest in emerging technology.

Nvidia wants to make sure its servers aren’t wasting energy.

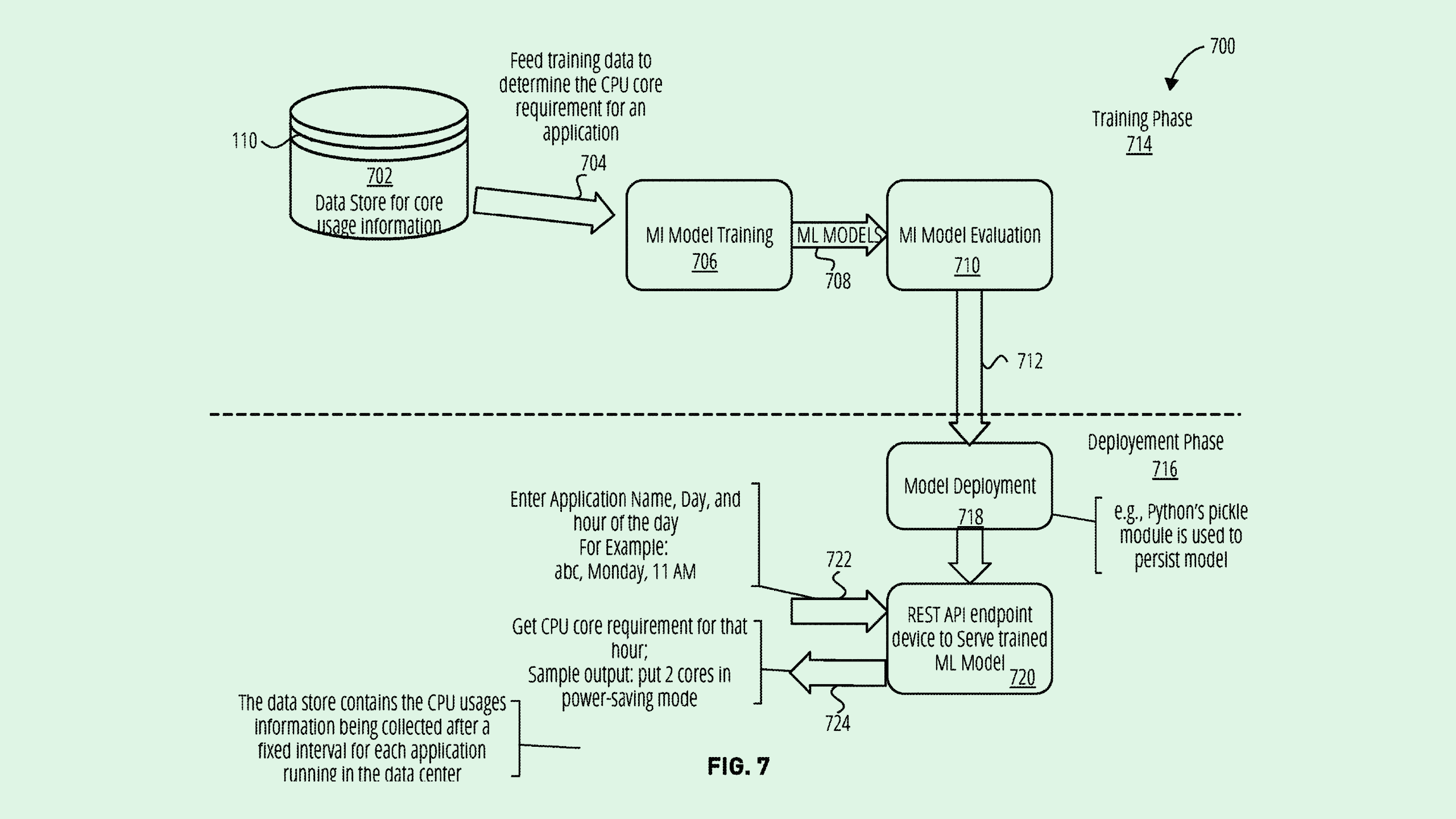

The company wants to patent a system for identifying “idle cores” in data centers using machine learning. Nvidia’s tech uses AI to determine which “cores,” or processing units, are being underutilized, and power them down accordingly.

“It is important to find idle or underutilized computing devices so that the usages of these computing devices can be more efficiently allocated,” Nvidia said. “In the data center or cloud environment, it is important to save power (energy) consumed by a server.”

Essentially, this system uses a machine learning model to monitor servers to see when processing units may be wasting power by idling when they’re not needed. Once identified, Nvidia’s system can put those processors into one of two modes: “power-saving mode” and “performance mode.” If the processor isn’t needed, it’ll go into power-saving.

Additionally, Nvidia’s system can determine when processors may be needed using machine learning-based prediction. This part of the system takes in historical data regarding the energy consumption of different jobs, applications, or tasks completed by these CPUs, and predicts how much energy is going to be used in the future.

The system may do this analysis and prediction at any given point in time – for example, looking at energy usage from one hour to predict the next hour. With these predictions, the system can preemptively put certain processors into power-saving mode, only keeping the exact number needed. If an unexpected uptick in power is needed, the servers can then be kicked back into performance mode.

While data centers and cloud services provide a huge amount of power for developing AI, the reverse is also true, said Trevor Morgan, VP of product at OpenDrives. Machine learning can prove to be incredibly valuable for automating the operation of data centers, especially when it comes to making things as efficient as possible, he said.

“You’re always worried about idle resources and using them — that’s the whole notion of load balancing,” said Morgan. “This (patent) goes in a different direction. This asks, ‘Where can we shut things down in order to have a better consumption of power while still handling the workload?’”

But turning off a few idle processors in one data center won’t save much power. When applied to hundreds of data centers, however, this kind of tech can result in significant energy efficiency, said Morgan. Plus, turning off these processors when they’re not in use can extend their life expectancy, something that may be particularly useful as cloud services and chip demand grows.

A patent like this makes sense for a chip giant like Nvidia. The company’s semiconductors have spread like wildfire throughout data centers, especially amid the AI boom. Its fourth-quarter data center revenue reached $18.4 billion, up 409% from the previous year. Given the amount of energy that data centers tend to suck up, this patent could be a method of solving a problem that its own technology is complicit in – and making more money while doing it.