Snap Patent Brings Emotion Detection to Workplace Surveillance

AI that’s meant to detect emotion is highly controversial.

Sign up to uncover the latest in emerging technology.

Snap’s latest patent application may want to keep a smile on employees’ faces.

The social media firm is seeking to patent “emotion recognition for workforce analytics.” Snap’s tech aims to measure “quality metrics” for individuals in the name of “workforce optimization,” with the filing using call-center employees as an example.

“Contact centers typically stress that their employees stay emotionally positive when talking to customers,” Snap noted in the filing. “Employers may want to introduce control processes to monitor the emotional status of their employees on a regular basis to ensure they stay positive and provide high quality service.”

Snap’s system of “video-based workforce analytics” watches the facial expressions of an employee or agent to be “recognized, recorded, and analyzed,” specifically when in a video chat.

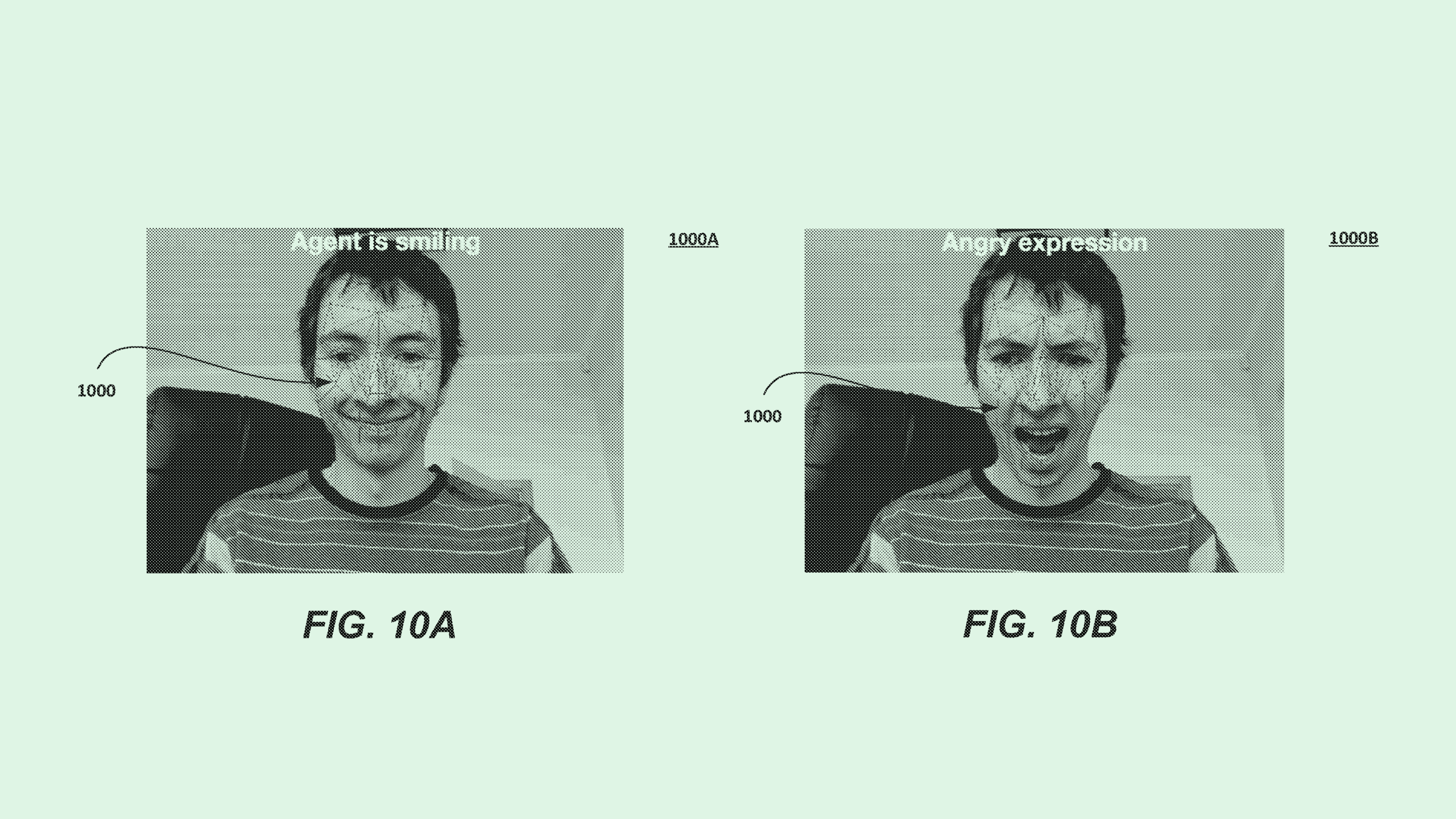

Aligning a user’s facial landmarks with what Snap calls a “virtual face mesh,” this system determines whether a user has a facial “deformation” connected with a certain emotion. These facial landmarks may be determined by an AI-based algorithm that compares the user’s facial landmarks to reference images. Basically, if you’re showing anything but a straight face, this system will be able to connect it with an emotion.

For example, a smile may signal a positive attitude, while a furrowed brow may be a sign of a negative one. Audio data may also be collected to determine speech and voice characteristics to inform emotion detection even further.

These emotions may be recorded in an employee record as “work quality parameters,” such as “tiredness, negative attitude to work, stress, anger, disrespect, and so forth,” Snap said. Snap noted that these emotions could be used to inform employer decisions related to “workforce optimization,” such as “laying-off underperforming employees, and promoting those employees that have a positive attitude towards customers.”

Emotion recognition is a common theme in Big Tech patent applications. Amazon has attempted to patent tech for its smart speakers, Microsoft has looked into emotion-detecting chatbots and meeting technology, and Nvidia has filed applications for expression-tracking tech for animation.

Microsoft has even sought to patent tech that similarly aims to track employee emotions — though it looks solely at text data through messages and emails, rather than facial recognition and audio data.

Though Snap is known for its social media and AR offerings, tech firms often file patent applications for inventions outside their wheelhouses. If granted, Snap could gain sole access to a powerful tool for emotional analytics.

However, emotion recognition technology is highly controversial: AI simply isn’t very good at understanding human emotions. Though using multiple forms of data in tandem could help inform an AI model’s output, happiness, sadness, or any other emotion still looks different for everyone.

Different cultures and regions have different forms of emotional expression, as do people who may be neurodivergent. This widespread variety in data makes it nearly impossible for an AI model to capture and understand every form of expression. Instead, systems like this may compare each individual’s emotional expression to whatever the developer deems a point of reference.

“All emotion recognition systems we’ve seen have had biases,” Calli Schroeder, senior counsel and global privacy counsel at the Electronic Privacy Information Center, said in an email.

Even if Snap’s system works perfectly, this kind of surveillance may not be favorable to the employees subjected to it. As workplace surveillance has become commonplace, the practice may be impacting workers’ mental health. A study from the American Psychological Association found that 56% of workers who experienced employee monitoring felt tense and stressed in their workplace. Adding emotion monitoring into the mix could worsen a workplace’s culture and erode trust further.

“There is no reason to have this technology besides increasing workplace surveillance and micromanaging,” Schroeder said.