Nvidia Catches Feelings With its Emotion Reading AI Patent

Nvidia wants to pick out specific emotions in your voice with deep learning, but reading your feelings may require more context.

Sign up to uncover the latest in emerging technology.

Nvidia wants to apply its AI expertise to more than just chips.

The company is seeking to patent a system that can infer emotion from “speech in audio data” using deep learning. Nvidia’s tech would use AI to figure out the probability that a person’s speech lines up with a set of “emotion classes.”

Nvidia said that other attempts to create AI that can read emotion often do not generalize well to speakers not used in training. Other systems also “determine a single emotion for an entire segment of audio, which does not capture any variations in emotional state of a speaker during that segment.”

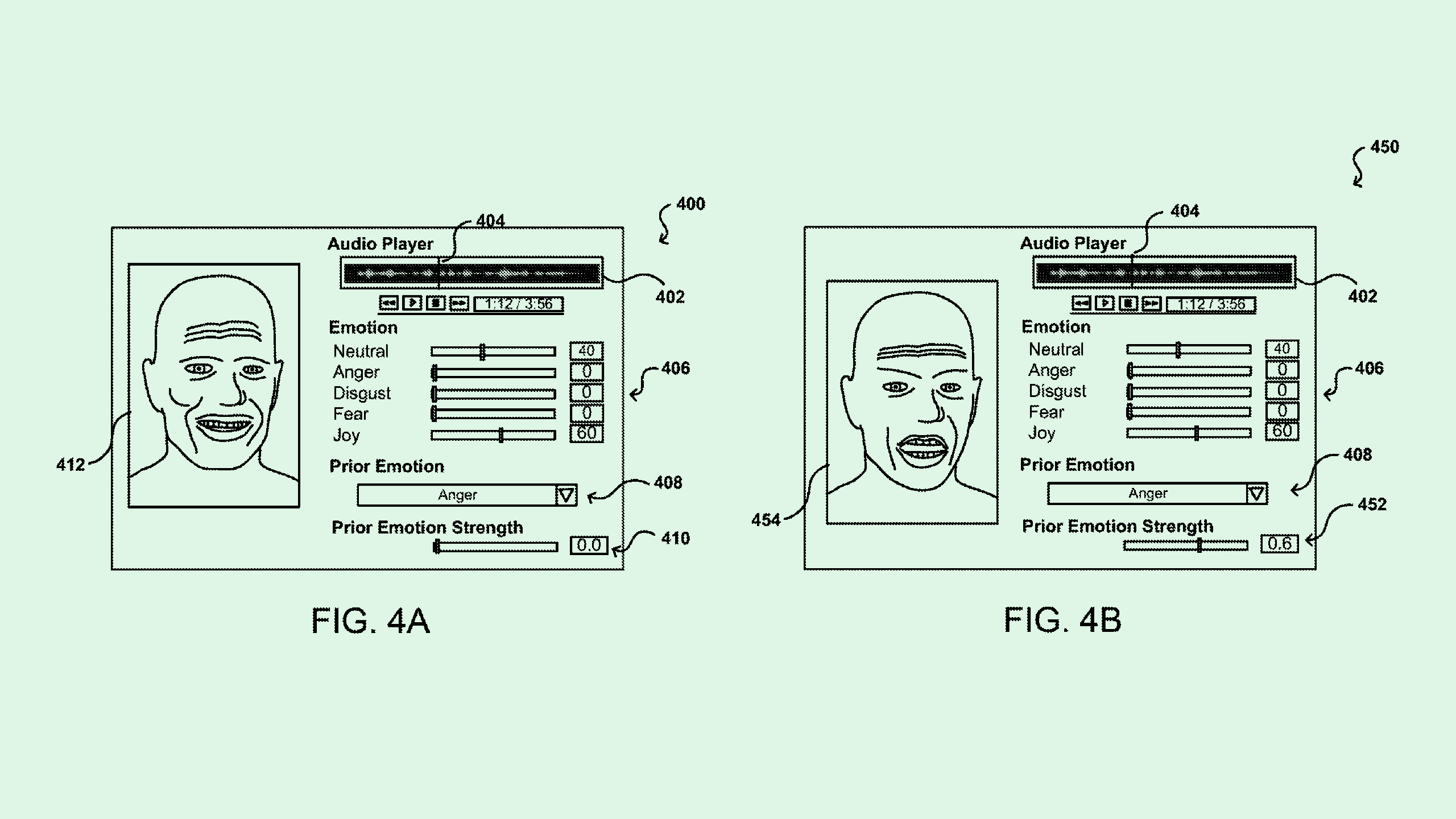

This tech takes an audio clip that’s gone through some “pre-processing” to remove background noise and passes it through an “emotional determination module,” which uses either a neural network or deep learning model to classify the different emotional states of the speaker. The system may pick up on a number of emotions, such as fear, joy, anger or disgust, and place each of them on a sliding scale that can shift throughout the speech.

While AI can make the initial emotional predictions, the user may also give the system prior emotional values that can be “blended” with these emotional determination values to make the predictions more precise.

While Nvidia pointed out a number of use cases for this tech – such as managing customers at a call center or adding context in conversational AI – the company also noted that this would be useful for generating animations that match up with the speaker’s emotional state. And, in addition to this patent, the company separately filed an application for “audio-driven facial animation” with machine learning-based emotional support.

This essentially picks up where the other patent leaves off, using speech input to generate “audio-driven facial animation that is emotion-accurate.”

Nvidia isn’t the first company to take a stab at emotion-detecting AI patents. Amazon has attempted to do this with its Alexa devices, Microsoft with its email and chat platforms, and even Disney with its CCTV cameras in parks.

Nvidia’s invention stands out, however, because it’s using a sliding scale of different emotional classes that fluctuate throughout a user’s speech, rather than one defining emotion to rule them all.

Still, emotion detection has historically been difficult, said Bob Rogers, PhD, data scientist and CEO of Oii.ai, because there isn’t a great “standard of truth” for how people present their emotions. There’s also great variability in the underlying data used to train emotion-detecting systems, and there’s tons of data needed to really grasp a full range of emotions.

Along with those variables, the way people emote differs cross-culturally and with neurodivergence. For example, people from some cultures may have more restrained emotional displays than others, and people with autism may present their own emotions differently.

“There’s not a great way to automatically generate ground truth on emotions,” said Rogers. “Some aspects can probably be surpassed, because some of those things can be captured as context variables. But if they’re not being added into a data stream in some way … you’re not going to get there.”

The best way to break these barriers could be combining different sources of data — such as voice, conversational or environmental context, facial expressions — to come to one conclusion, said Rogers. No single source will give you the full picture, he said.

While aligning tech and emotion has been a difficult road thus far, surpassing these obstacles could lead to a far more engaging experience with AI, said Rogers.

“I think there’s a strong sense among technologists and product developers that the sense of emotion will make these things much more sort of compelling to people,” he said. “That extra level of interaction we can have depends on emotional content.”