Microsoft Makes Chatbots Mindful with Emotion Detection Patent

The company’s patent for a wellness chatbot could require users to have a lot of trust in the company to protect their data.

Sign up to uncover the latest in emerging technology.

Microsoft wants its chatbots to do wellness checks.

The company is seeking to patent a way for conversational AI to provide “emotional care in a session.” Microsoft’s filing details a system which amounts to an emotional support chatbot that is meant to offer psychological and cognitive monitoring. Though the filing said this tech is for people of all ages, it notes that it may be particularly beneficial to older users.

“One challenge for these old people is how they can spend 20 or more years after their retirement in a healthy living style,” Microsoft said in the filing. “The emotional care provided by the embodiments of the present disclosure would be beneficial for the user to live in a healthy way.”

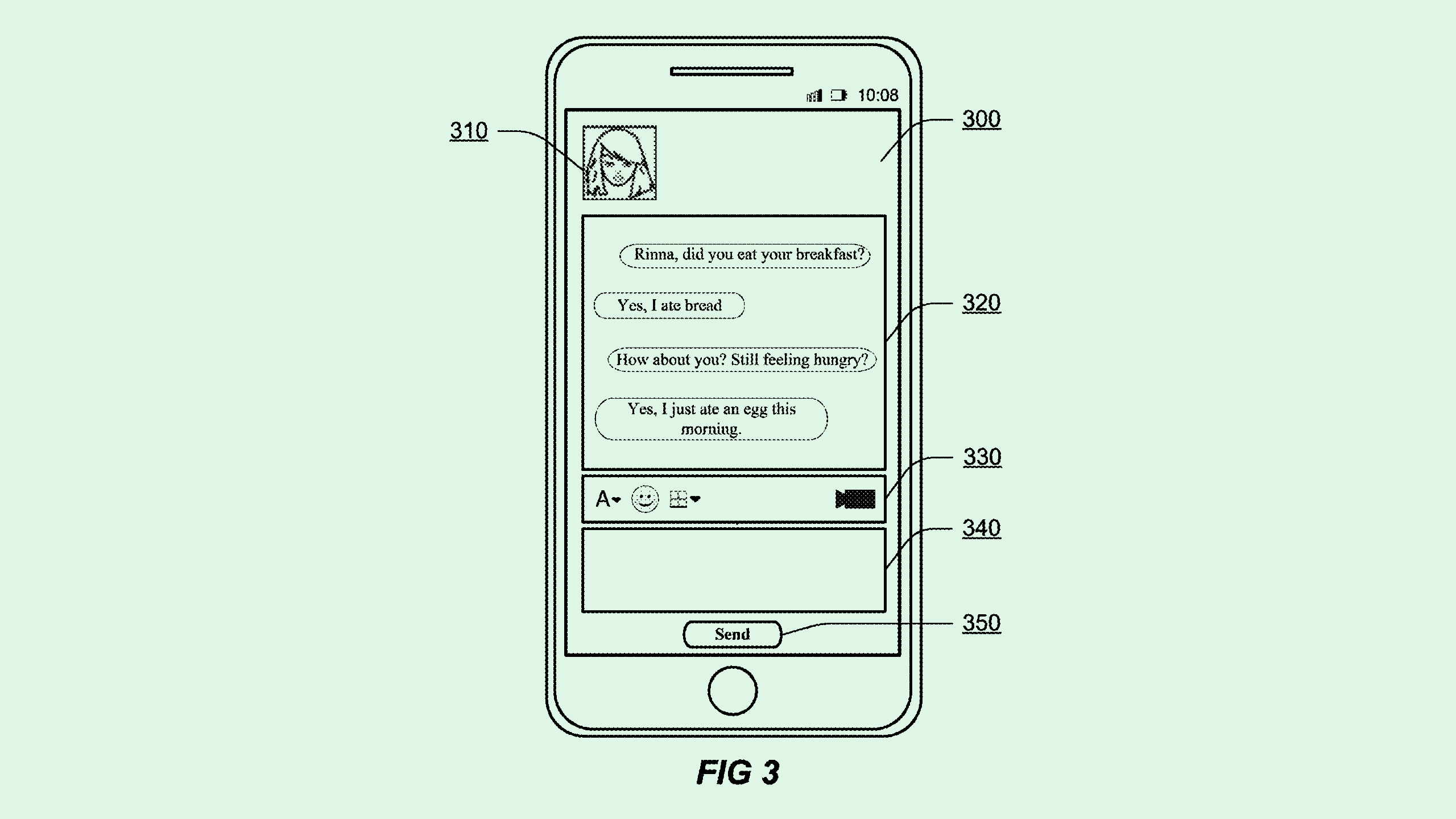

Here’s how it works: Microsoft’s chatbot takes into account user images, profile data and chatbot conversations to understand the user’s background. This data is put into a user’s “memory record,” which is used to inform the chatbot in its conversations, such as to “help the user to recall or remember his past experiences.”

In the conversations with the chatbot, the system will use an “emotional analysis model” to classify the user’s inputs into different emotional categories and respond accordingly. Microsoft also noted that the user and the classifier can attempt to analyze emotions in text, voice, image and video.

This system also includes a “test module” to monitor and decipher a user’s cognitive conditions, and that these tests “may be conducted in an explicit way or in an implicit way.” Microsoft’s filing also included a wide definition for “emotional care,” which may include emotional chatting, providing knowledge on different medical conditions, checking in on the user’s dietary habits, tracking specific psychological conditions and keeping a record on the user’s state.

Using AI for emotional detection and classification seems to be a running theme with some recent Big Tech patent filings: Nvidia wants to patent a way to glean emotions from audio data, Apple wants to visualize your stress with generative AI, Snap is working on emotion-based text-to-speech and Amazon wants to tell if you’re mad at Alexa.

Microsoft itself has sought similar patents, including one looking to regulate emotional tone in professional messages to coworkers. The tech giant also previously operated a chatbot called Xiaoice, a Chinese chatbot meant to offer empathetic conversations with its users, which it spun out into its own entity in 2020. Plus, emotionally sympathetic chatbots are already widely spread.

The main difference with this filing is its potential to test and monitor a user’s psychological and cognitive state, both with and without them realizing it. Therein lies the potential for problems, said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University.

Even if Microsoft has the best intentions in how this tech could be utilized, users need to have a high level of trust in the companies providing these services, said Green, as it requires them to offer up very sensitive and personal data during potentially vulnerable states of mind.

“The reason people trust prescription drugs, for example, is because they’ve gone through a long approval process,” said Green. “It seems to me like with a lot of AI products, we’re skipping straight to deployment to the public, when maybe actually they need to go through more testing.”

In addition, AI-based emotional detection is often inaccurate. Because people across cultures and generations emote in vastly different ways, Green said, creating an AI system that can capture and understand all of it is an incredibly difficult task. “It depends a lot on the data, and if you’re getting diverse data from a global perspective for many different ethnic groups.”