Adobe Patent Tackles Bias in Image AI Tools

The tech relies on oversampling of images with “minority attributes” to make sure the outputs actually reflect the inputs.

Sign up to uncover the latest in emerging technology.

Adobe wants to make sure it’s presenting itself the right way.

The software firm filed a patent application for “debiasing image to image translation models.” Adobe’s system seeks to balance output from generative image models for “image-to-image translation tasks” to properly represent and produce images that contain “minority attributes.”

“In many cases, certain attributes (i.e., minority attributes) occur less frequently in images of the training dataset,” Adobe said in its filing. “As a result, image translation models are biased towards majority attributes and have difficulty in producing the minority attributes in generated images.”

Adobe aims to remedy this problem by oversampling images that have certain minority attributes in an image datasets. Minority attributes can include a large variety of physical characteristics, Adobe noted, such as certain hair types or colors, baldness or wearing glasses.

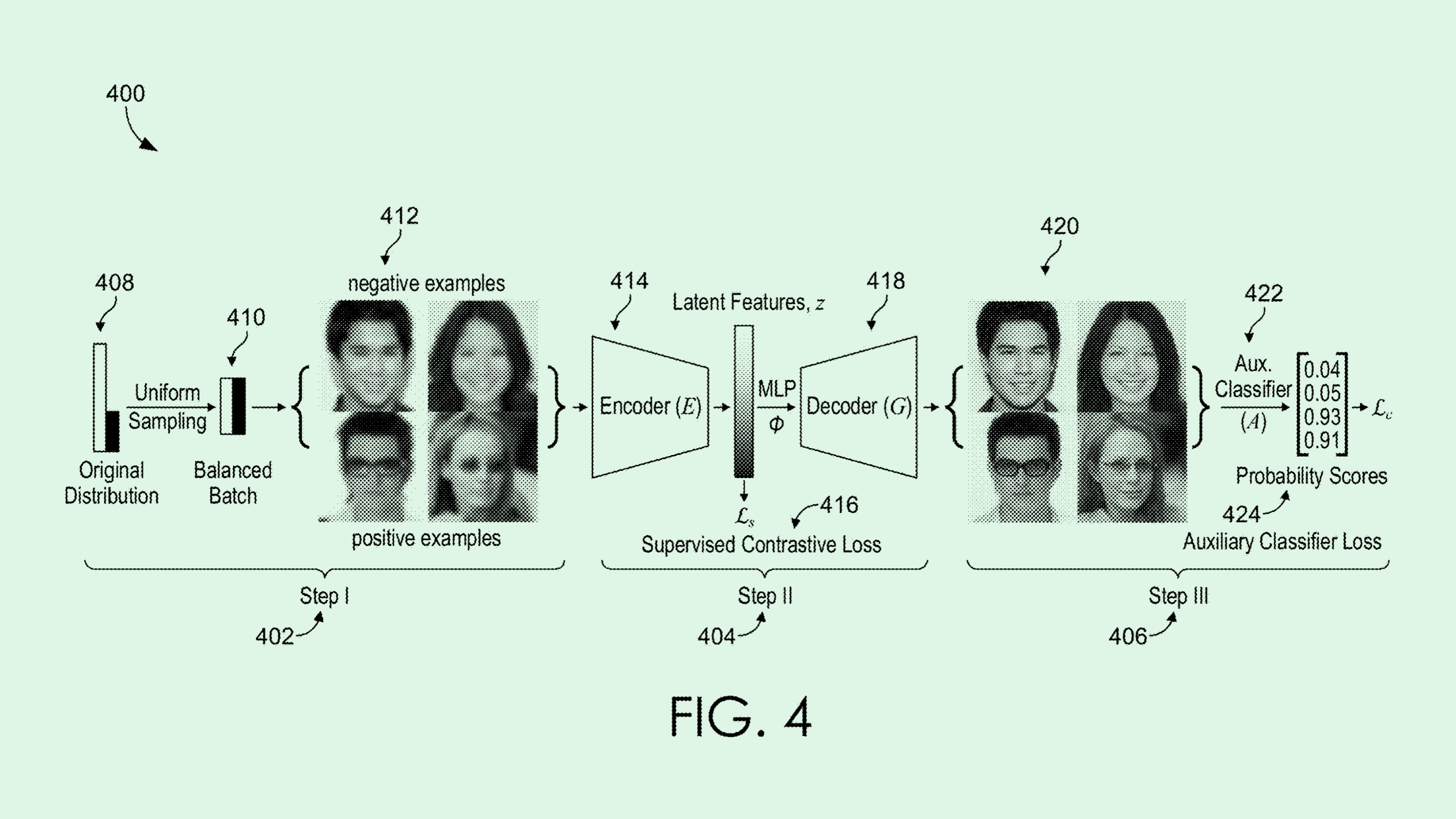

The image translation model is then trained on this dataset using “supervised contrastive loss,” a machine learning tactic which essentially teaches the model to create more meaningful representations of the data by grouping together similar attributes in the dataset and training, while pushing apart those that are dissimilar. It then uses another technique called “auxiliary classifier loss” to ensure that the images generated reflect the right attributes.

The outcome is a generative AI image editing tool that properly incorporates less common physical attributes when faced with those attributes as input.

As AI adoption continues to climb, the issue of bias in models has become a hot topic. Without proper care and attention, biases can replicate and grow stronger with use, leading to inaccurate outputs that reflect those biases.

Other tech firms have attempted to solve those biases with their own means. For example, Intel has sought to patent a system that recognizes “social biases” in the visual datasets that train AI models, and Sony filed an application for tech that audits a dataset for “human-centric” visual diversity.

Adobe’s method aims to fight bias by taking into account that no dataset is perfect, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. It’s incredibly difficult to get an entirely perfect and bias-free data set, he said, so figuring out where those errors may occur can help even it out.

Patents like these add to the growing AI initiatives from Adobe as it tries to carve out its own niche in the growing market. Over the past year, the company has launched enterprise generative AI tools through Sensei, and debuted its AI image generator with Firefly, which aims to compete with the likes of Dall-E and Midjourney by being safe for commercial use.

Its patent activity is littered with AI developments, including several that aim to tackle issues such as misinformation and “diversity auditing.” Its releases signal that, rather than hastily going after a broad spectrum of AI-related offerings, it wants to get AI right. “I think it’s a credit to their corporate culture that they’re really thinking about these things,” said Green.

In their popularity, AI image generators have been proven to replicate biases. And while this patent could help stamp those out, one factor that may need to be considered is the selectivity bias of the developers themselves, said Green. What may be seen as a “minority attribute” to one person may not be reflective of reality, he said. “Exactly what constitutes a trait that needs to be oversampled in order to adjust how the dataset is tuned?”

And oversampling this way has the potential to be a sound solution for bias, it reveals a defining factor of AI models as a whole: AI models do not reflect the way that humans think, said Green.

“It really reflects that this is a statistical system. It’s not a system that actually understands things, It just draws correlations,” said Green. “It highlights that AI really isn’t functioning in a way that some people might be thinking that it does.”