IBM’s Patent Cuts Bias in AI Lending

IBM wants to make sure AI lenders are playing fair with its patent to weed out bias in financial machine learning models.

Sign up to uncover the latest in emerging technology.

IBM wants to level the lending playing field.

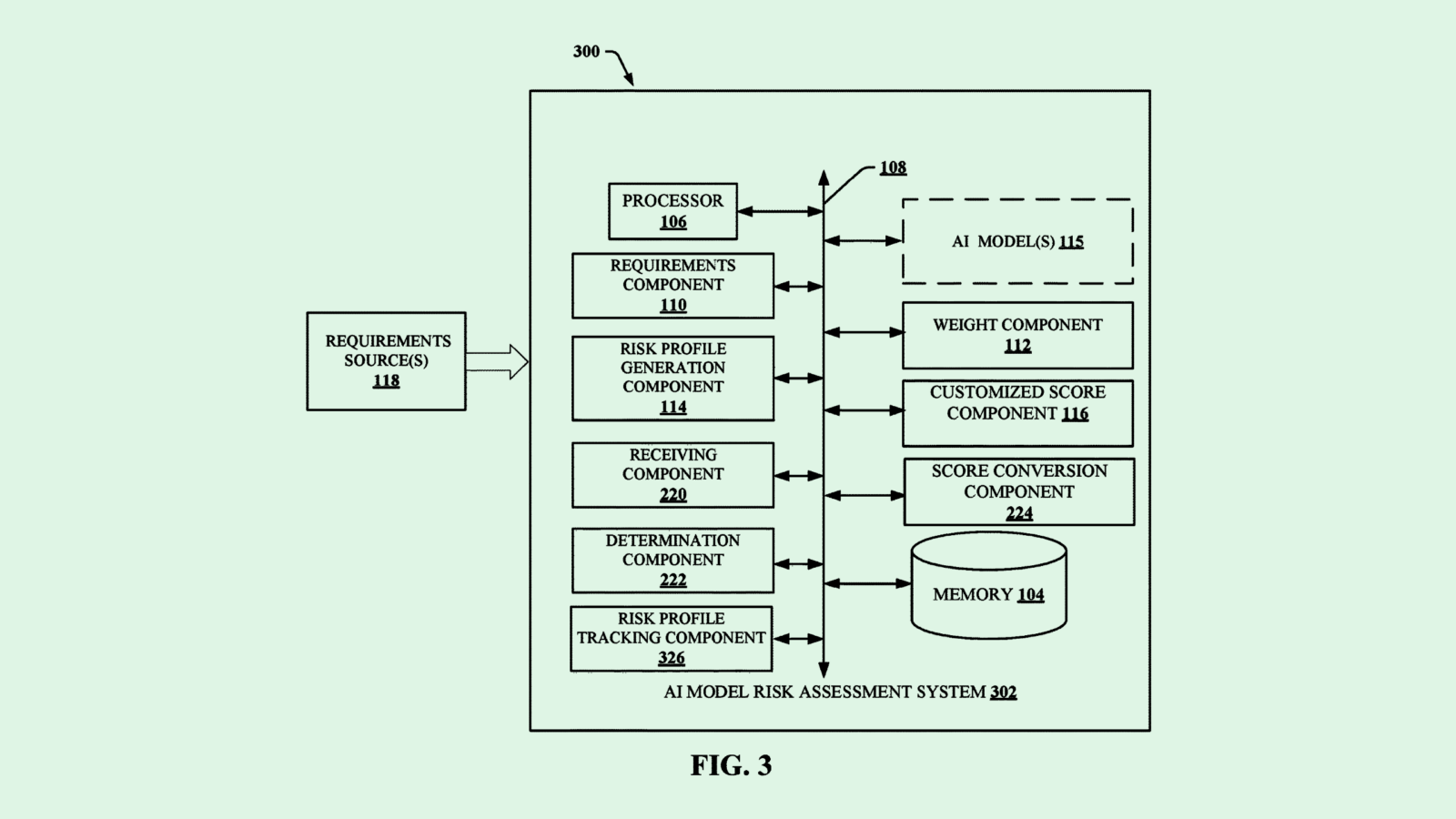

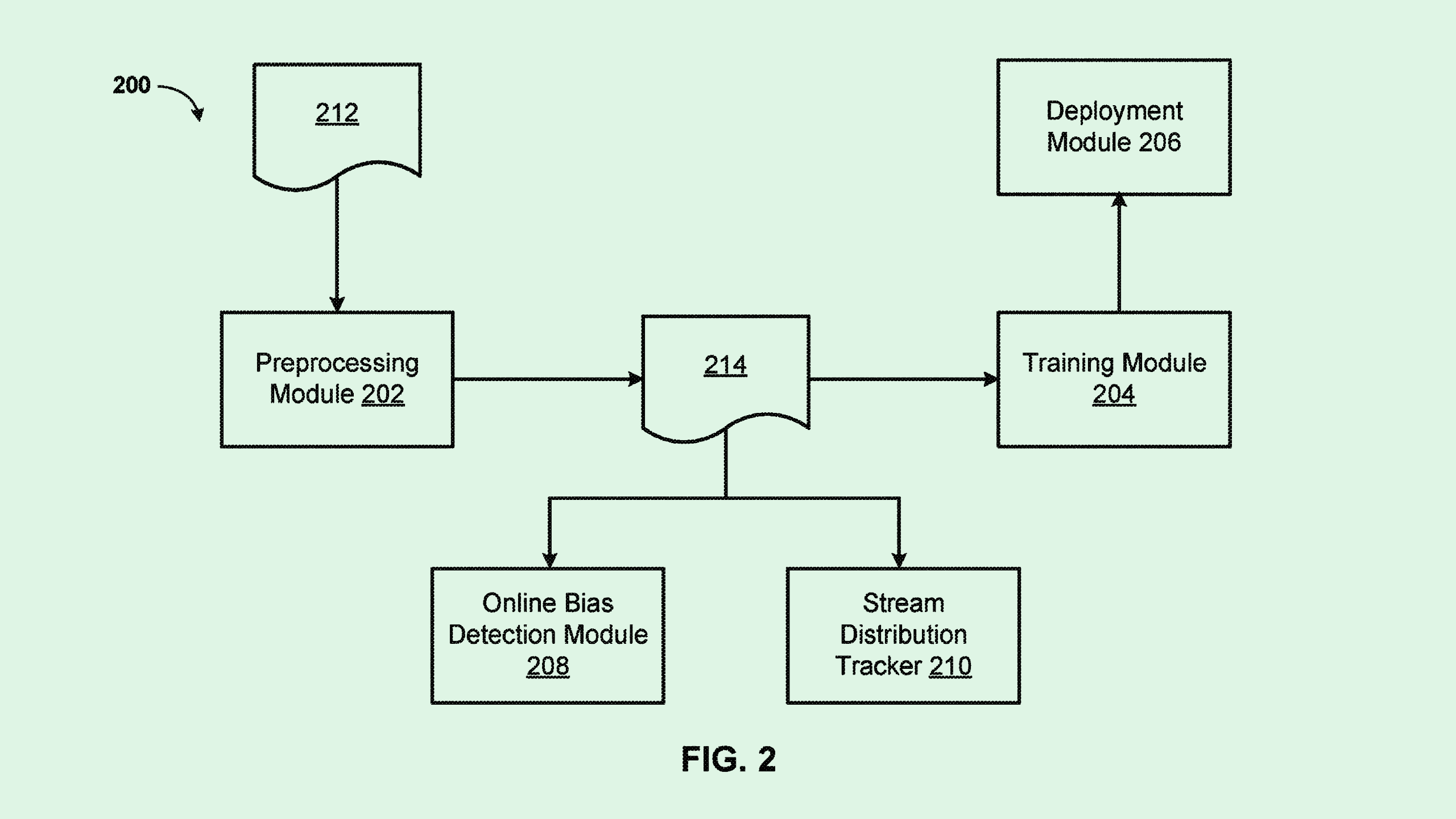

The tech firm filed a patent application for “online fairness monitoring in a dynamic environment.” IBM’s patent details a system to ensure that AI models in charge of making financial decisions are doing so without biases.

“AI models have been increasingly used to make sensitive decisions like money lending or visa approvals,” IBM noted. “Accordingly, there has been increased focus on ensuring that the deployed models are making fair decisions.”

IBM’s system essentially aims to decipher whether bias developed in a trained machine learning model based on continuous monitoring of how it treats certain “protected attributes.” Though IBM didn’t clarify how it defines protected attributes, in the context of bias in lending, this could be referencing factors like age, gender or race.

To determine if bias has developed, the system will test the reward probability for different attributes, meaning the likelihood of a positive outcome (such as approval for a loan or credit card) in the machine learning model’s decision. If the probability of a positive outcome falls below a certain threshold, the system notes that the model has developed bias against a protected attribute.

That reward probability threshold is determined by a “distribution of credit scores associated with one or more groups of the borrowers having a common attribute,” IBM noted. After detecting bias, the system will correct it by updating the distribution of credit scores to be more fair.

IBM’s system continuously updates this distribution and runs this bias check to determine if an AI model has developed biases over time by not evolving with new data. This monitoring overcomes situations where a machine learning model is fixed but the data is evolving, and “the model may become biased towards a particular group.”

Though IBM’s system targets finance specifically, it isn’t the first time we’ve seen tech firms tackle the issue of bias in their patent activity. Adobe has sought to patent a way to debias image translation models, and Sony and Intel have both sought to patent different ways to rid image datasets of social biases.

Biases in AI data can plant themselves models during training, and, if left unchecked, they can reproduce themselves and grow stronger over time. IBM’s system in particular targets this major potential issue with AI-based lending practices.

Though this bias may be unintentional, the financial consequences for this kind of discrimination can be extremely harmful. And financial institutions continuing to weave AI into their practices only makes the potential for bias even greater.

Regulators began to take notice of this issue last year as AI started to come into the public eye. The Consumer Financial Protection Bureau clarified in April that anti-discrimination laws apply to financial decisions made by AI tools. And in June, several government agencies proposed quality control standards for automated valuation models, which make financial decisions regarding real estate.

Federal Reserve vice chair of supervision Michael Barr noted at a conference in July that “while these technologies have enormous potential, they also carry risks of violating fair lending laws and perpetuating the very disparities that they have the potential to address.”