Intel Goes After Responsible AI with Ethics Index Patent

Intel’s ethics index aims to call out when people are using AI for the wrong reasons.

Sign up to uncover the latest in emerging technology.

Intel wants to make sure its AI models are being used the way they’re meant to be used.

The company is seeking to patent a “misuse index” for explainable AI. As the title of this patent suggests, Intel’s invention seeks to track discrepancies between how an AI model is used versus how it was trained.

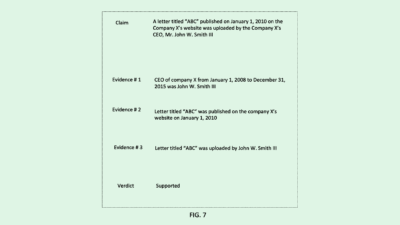

Intel’s tech aims to prevent legally and ethically inappropriate uses of AI by comparing the “inference data,” or the data input by a user that a model takes in to make its predictions, to the model’s training data. The system uses a “trust mapping engine” that’s given policy thresholds as guidelines for mapping training data to inference data.

Since these discrepancies can range from small to high risk, this engine then classifies this data within one of a number of categories, including minor misuses, major misuses, errors, exceptions or exemptions. This system may also take in surveys and input from the developers of the model about certain policies, procedures and exceptions.

Data that the system decides is related to misuses, whether major or minor, is then sent through the system’s “misuse index estimation logic,” which estimates how severe the case was. For example, in the context of facial recognition, using the tech to identify every passenger that comes through an airport may be viewed as a major misuse, whereas using it to identify pilots to match them with pilot licenses on record may be viewed as an exception.

Intel said in its filing that with the growth of AI comes the “greater-than-ever potential for misuse” of it, which leads to a bad public perception and negative connotation associated with the tech. “For example, there have been concerns regarding certain face recognition techniques, such as legal and ethical apprehensions relating to inappropriate use and generalization of face recognition models,” the company said.

This isn’t the first time we’ve seen an altruistic patent from Intel that attempts to soothe the ails caused by AI. The company has sought patents for tech to fight bias that occurs when training AI, as well as tech to prevent the power drain that model training can cause.

“The motivation behind the patent sounds like a good one, they want to make sure that AI is being used for the purpose for which it is designed,” said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University.

Ethical and responsible AI are hot topics right now, both inside and outside tech. The Biden administration released a sweeping executive order at the end of October addressing AI’s impact on equity, consumer privacy, and security. However, the feeling of urgency to get a foot in the door has caused some tech firms to move fast without thinking first about ethical implications.

And while Big Tech firms in particular have a responsibility to consider ethics, some have cut AI ethics staff in recent months, including Microsoft and X, formerly Twitter. But the fact that a few major tech companies like Salesforce, IBM, and Intel are trying to raise the ethics bar for the industry “could be overall beneficial,” said Green.

“Everybody’s reputations are linked to each other to a certain extent,” said Green. “One bad actor within the industry tarnishes the reputation of everyone, and that’s something that some organizations understand better than others.”

From a business perspective, Intel lags behind among competitors in the AI space – namely, Nvidia. The company not only beats out Intel in its chips market share, but its software ecosystem dominates among developers. Adding tools like this and other responsible AI could help Intel differentiate itself.