Nvidia May Add to Chatbot Capabilities With Latest Patent

Nvidia continues to patent tech to expand its AI ecosystem as other tech firms play catch-up.

Sign up to uncover the latest in emerging technology.

Nvidia wants to make chatbots that can handle the hard questions.

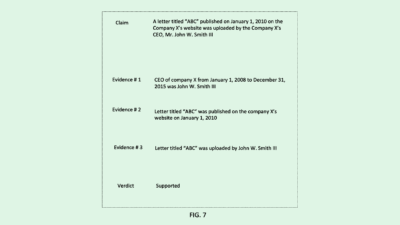

The company filed a patent application for pre-training language models using “natural language expressions” extracted from structured databases. A pre-trained model works by giving a language model a base of knowledge, then fine-tuning it to a particular task or domain.

While those models do well at their specific tasks, they often perform poorly when asked to do something outside of that domain, “thereby limiting the use of these models for new applications that do not have the necessary training data,” the company said. Nvidia’s system improves the effectiveness of language models by training them to handle queries outside of their lane.

Nvidia’s tech offers better pre-training of language models by preserving the “hierarchical relationships” in the training data when it’s converted from that structured database into plain text for training. Preserving those relationships allows the model to understand the context and nuance of how certain data elements are related to one another.

To put it simply, this system gives language models more context to answer queries, without requiring a lot more training data. These models, Nvidia said, can process information for “one or more different domains and then provide responses to various input queries.”

“As a result, improvements to search are enabled that facilitate identification of novel information from a corpus of data when compared to traditional semantic searching techniques,” the company said.

Nvidia has stood front and center on the AI stage, gaining particular attention over the past year. Its data center revenue (which includes its AI work) in the third quarter reached $14.51 billion, up 279% year over year. The company dominates the AI chips space, holding an estimated 80% of the market share for AI chips and 87% of the discrete GPU market as a whole.

But AMD may be coming for Nvidia’s crown. Meta, OpenAI and Microsoft said at AMD’s investor event on Wednesday that they’d use the company’s newest AI chip, the Instinct MI300X. While Nvidia’s chips run around $40,000 each, AMD CEO Lisa Su said its chip would have to undercut that to convince its customers to make the switch. The announcement signals that companies may be moving away from Nvidia’s expensive GPUs to lower the cost of AI development.

That said, Nvidia has command over more than just AI hardware. Its development kit has become a premium choice for developers, as it’s easy to use and offers everything within one ecosystem.

Its patent activity shows that it’s interested in growing its software work, with applications out for neural network synthetic data, power efficient automated model development, conversational AI tools and more.

With this patent, beefing up its language models offerings in particular makes sense: Since the launch of OpenAI’s ChatGPT last year (which was trained using Nvidia’s chips), chatbots have dominated the conversation about AI in general. And every big tech firm from Amazon to Google to Meta has thrown their hat into the ring over the past year. Plus, the market is only poised to grow bigger, from an estimated $5.4 billion this year to $15.5 billion by 2028.