PayPal “Fairness-Aware” Model Patent Highlights Bias Issues in Financial AI

The filing highlights the importance of eliminating bias in AI-powered financial platforms.

Sign up to uncover the latest in emerging technology.

As the tech industry continues full-steam ahead on AI, PayPal may be looking at ways to do it right.

The company seeks to patent a system for adjusting training data sets for “fairness-aware artificial intelligence models.” This patent aims to ensure that its AI models, particularly those used in transaction decision-making, are not trained on biased data.

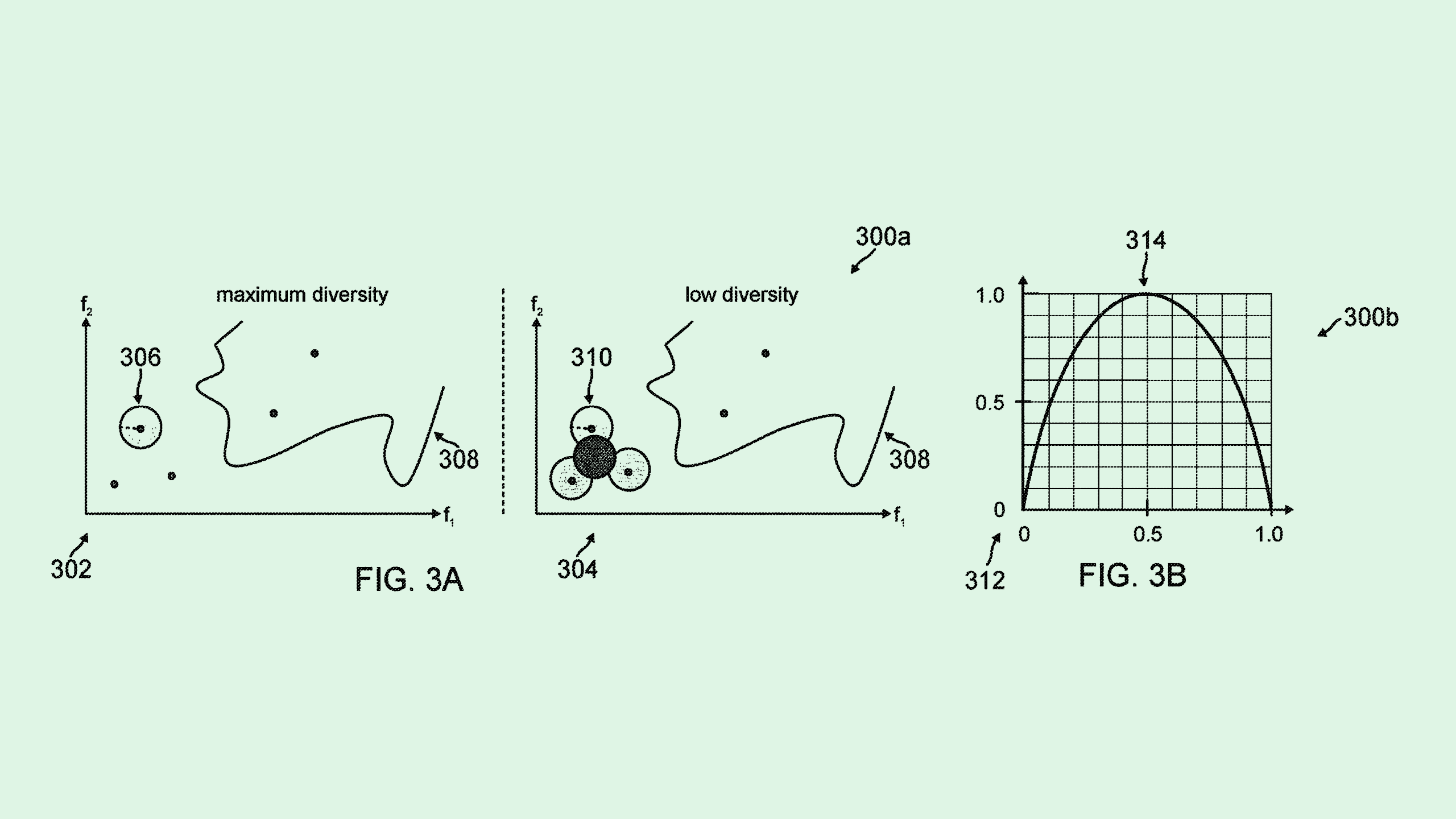

PayPal’s system picks a sample of data from a larger training data set to create a control group of training data that “acts as a baseline for AI model training.” This sampling is created specifically for fairness, with each data point given two different scores: a diversity score and a “model attribution” score, which determines how informative a data point is to the model as a whole.

This data set is then used to train or retrain models to “adjust, minimize, and/or eliminate bias in the training data set.” These models “provide better decision-making and predictive outputs by weighing many diverse and distributed data points, for example, by preventing mistakenly allocating outputs when overly training on the same or similar data,” PayPal said in the filing.

PayPal noted that this sampling scoring method may be generalized and plugged into different models, use cases, or training algorithms – meaning it can be reprogrammed to test for more than just bias.

This isn’t PayPal’s first rendezvous with AI. The company has filed several patents that could implement AI throughout its fraud-detection practices, as well as patents to track user behavior with neural networks. The company also announced plans earlier this year to roll out an AI platform that allows merchants to reach customers more easily.

But the “move fast, break things” ethos of Silicon Valley has extended to AI, leading to a landscape of tech firms that are extremely data-hungry as they continue to grow massive foundational models. And the growth at all costs mentality has led to ethical issues, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University.

“Most people take the approach of, ‘we can’t do ethics if we don’t have the AI in the first place,’ so they very much concentrate on AI,” said Green. “But sometimes (ethics) comes as an afterthought, which is always a risky approach to it.”

One of those issues is bias – or, as this patent calls it, “fairness.” AI models often exhibit and exaggerate biases that aren’t caught in training. This can have particularly dire consequences when those AI models are used in financial decision-making. And regulators like the Consumer Financial Protection Bureau and the Federal Reserve have taken notice.

“Historically the financial industry has had serious issues with bias and lending,” Green said. AI models that replicate those biases can cause significant harm on a wide scale.

Many companies are trying to determine how to squash bias in their own AI tools, tackling the issue in image models and lending. Akin to the focus of PayPal’s patent, the solution to bias is often in fixing the training data itself, since a model is only as good as the data it’s fed. “It’s good to see companies filing these sorts of patents that show they’re doing the work,” said Green. “It sends an important signal that they find it to be worthwhile work.”