JPMorgan Chase Patent Highlights Risk of AI Bias in Banking

A growing footprint in AI increases risk — especially when it comes to lending and risk analysis.

Sign up to uncover the latest in emerging technology.

JPMorgan Chase may want to track when its algorithms are making questionable decisions.

The financial institution filed a patent application for “algorithmic bias evaluation of risk assessment models.” JPMorgan’s tech tracks when risk assessment models — used to determine the risks associated with lending and investment — are acting unethically or exhibiting bias.

“There has been a growing focus on model bias, including fairness in the provision of financial products and services beyond credit and, although the focus on model bias has been increasing in recent years, this trend has accelerated over the past year,” the bank said in its filing.

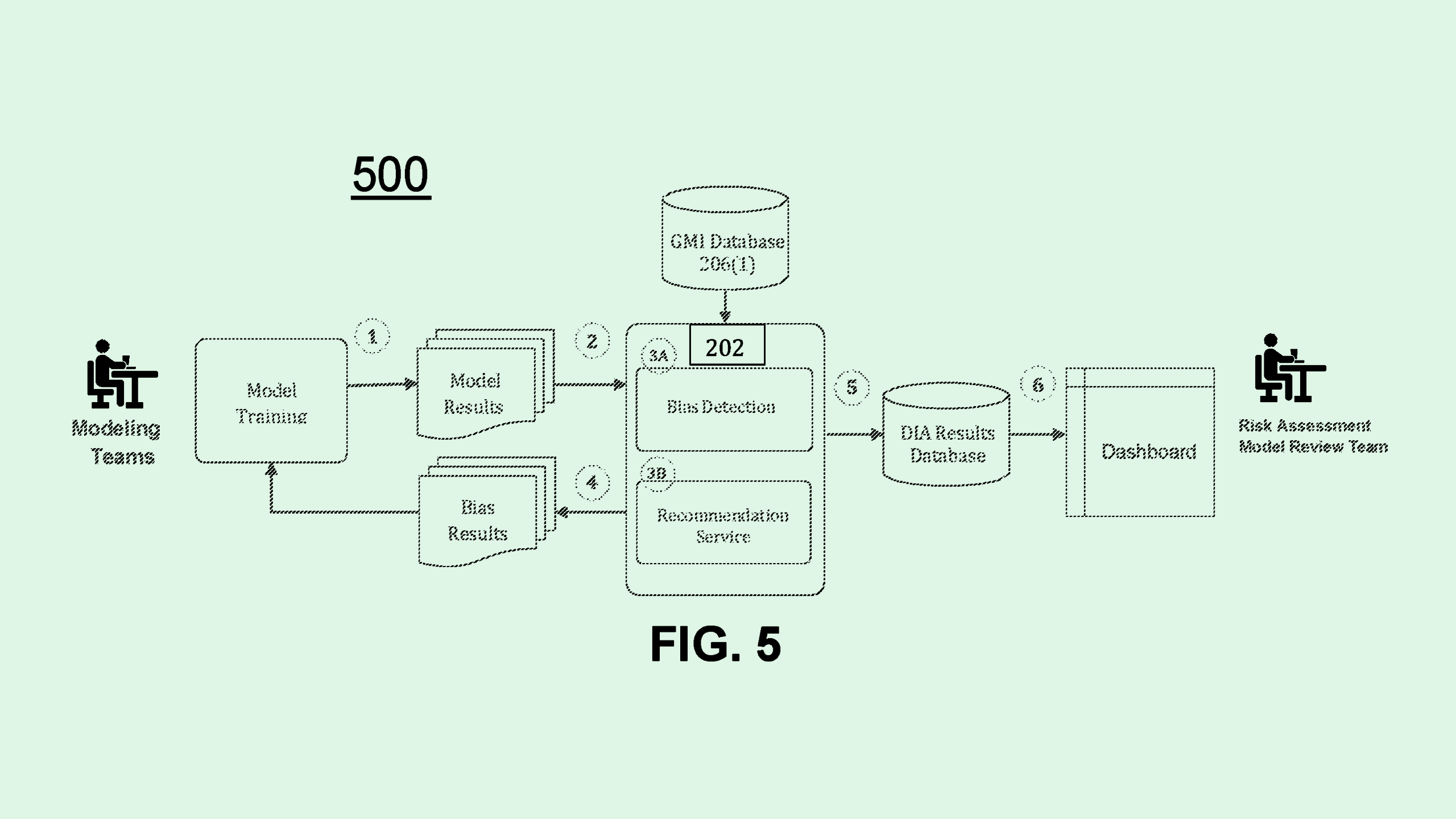

First, a model is evaluated to get a sense of its predictions and assessments. The results are transmitted to a “disparate impact analysis service,” which is responsible for analyzing the model’s score for ethical standards and bias.

Then, the analysis service connects with a “government monitoring information” database, which picks out information related to demographics such as ethnicity or gender. That data corresponds with what the filing calls “ethics and compliance initiative” information, which tracks how a model stacks up to ethical or regulatory standards.

Finally, the analysis service analyzes the model’s score compared to the demographic information and ethical standards to determine whether the risk assessment model is operating as it should. JPMorgan noted that this service is scalable for models of all sizes.

JPMorgan has been loud about its keen interest in AI. The company’s patent history is loaded with IP grabs for AI inventions, including volatility prediction, personal finance planning, and no-code machine learning tools. The company also launched an AI-powered cash-flow management tool, a product called “IndexGPT” for thematic investing, and rolled out an in-house AI assistant called LLM Suite to its more than 60,000 employees.

But a growing footprint in AI increases risk — especially when it comes to lending and risk analysis. Bias has long been an issue in AI-based lending tools, which often discriminate against marginalized groups. In some contexts, the issue has caught the attention of regulators: The Consumer Financial Protection Bureau in June approved a rule that ensures accuracy and accountability of AI involved in mortgage lending and home appraisal.

By not tracking bias in AI models, especially customer-facing ones, financial institutions create “economic headwinds” for specific groups, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. This opens them up to risks of both regulatory clapback and reputational harm.

“Bias means that your machine learning model is messed up — it’s not working correctly,” said Green. “Ultimately, it ends up creating bad business. There are lots of places where automated systems do bad things to people, and it’s very hard to get those things fixed.”

Patents like these may hint that JPMorgan is seeking to mitigate bias before models reach customers’ hands — though locking down IP on inventions aimed at fighting bias may prevent other financial institutions from bettering their own systems in this way, Green added.