Adobe AI Patent May Hint at Generative Video Tools

Adobe wants to predict your video edits through your messages.

Sign up to uncover the latest in emerging technology.

Adobe wants to be kept in the loop.

The company filed a patent application for “predicting video edits from text-based conversations” using neural networks. As the title of the patent suggests, Adobe’s tech essentially aims to pre-emptively edit videos for users by guessing what needs to be done from their messages.

The goal is to streamline the editing process by reading messages between two or more parties and figuring out if those messages contain video edits. Adobe noted that these messages can be between two users either within the video editing system or in an external messaging system.

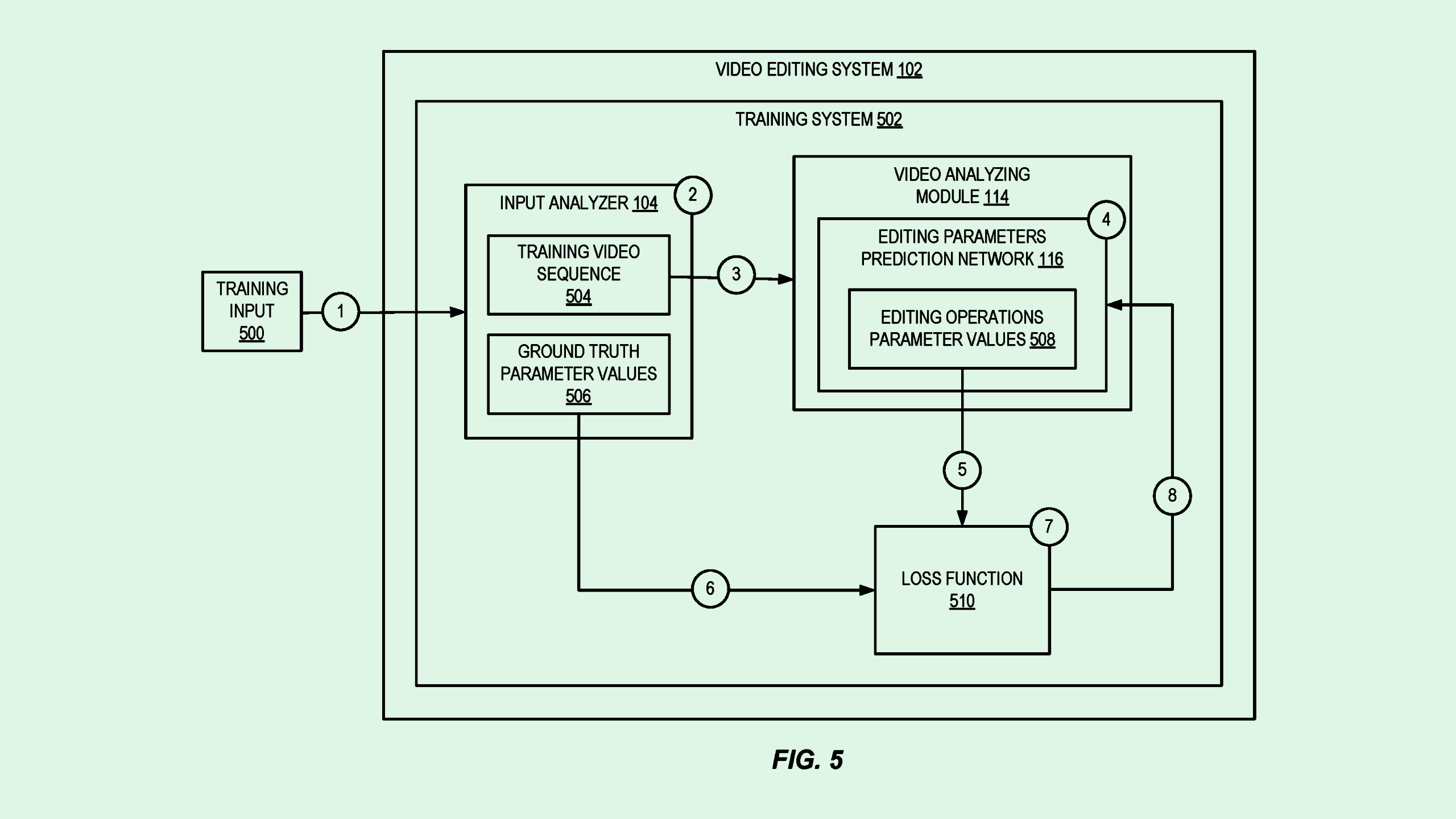

After collecting this data, the system uses neural networks to map content from the messages to different editing operations and to predict the “parameter values” of the editing operations to the video sequence. For example, if one user says in a conversation that the brightness of a shot is too low, Adobe would use that information to make the shot brighter.

The company noted that, while existing text-based editing solutions offer the ability to modify image data, these tend to be time and resource-intensive for editing videos. These systems also tend to require detailed and explicit instructions, and generally don’t pick up context from conversation “which generally do not contain explicit textual descriptions of edit operations.”

It’s no secret that Adobe has been putting the pedal to the AI metal. The company has sought to claim several inventions as its own, filing applications for data visualization tools and AI summarization, while also taking on issues like hallucination and bias.

The company has been loading its app suite with AI integrations, mostly building on top of Firefly, its generative image model released last March. The company has embedded the model throughout its image-focused programs like Photoshop, Lightroom, Illustrator, and Indesign.

With its AI efforts, the company’s goal is seemingly to democratize design, making it easy for anyone to access with little to no training or experience. “I think generative AI is going to attract a whole new set of people who previously perhaps didn’t invest the time and energy into using the tools to be able to tell that story,” Adobe CEO Shantanu Narayen told The Verge’s Nilay Patel on the Decoder podcast last week.

And soon, the tech may make its way to video: in mid-April, the company previewed several generative AI tools for Premiere Pro, which aim to “streamline workflows and unlock new creative possibilities,” the company said. New capabilities, coming at some point this year, include extending scenes, adding and removing objects, and text-to-video tools. This patent could offer a glimpse at what may be in store.

While the company noted that it’s in “early explorations” of bringing third-party generative models into its platform, it’s also building its own video model using Firefly, potentially competing with OpenAI’s Sora and Google’s recently announced Veo.