Intel’s AI Watermarking Patent Signals Big Tech’s Responsible AI Push

A patent from Intel to verify AI-generated content signals that tech firms may be paying attention to the consequences of their models.

Sign up to uncover the latest in emerging technology.

Intel may be researching its own way to discern real from fake.

The company filed a patent application for “immutable watermarking for authenticating and verifying AI-generated output.” Aiming to improve transparency and traceability, Intel’s tech essentially sticks an unchangeable digital signature onto outputs generated by AI models.

“The exponential growth of AI has introduced the problem of determining the trustworthiness and consequently the reliability of information and/or data generated from AI sources,” Intel said in the filing. “Inability to distinguish between AI-generated output and human-generated output is leading to security and ethical risks.”

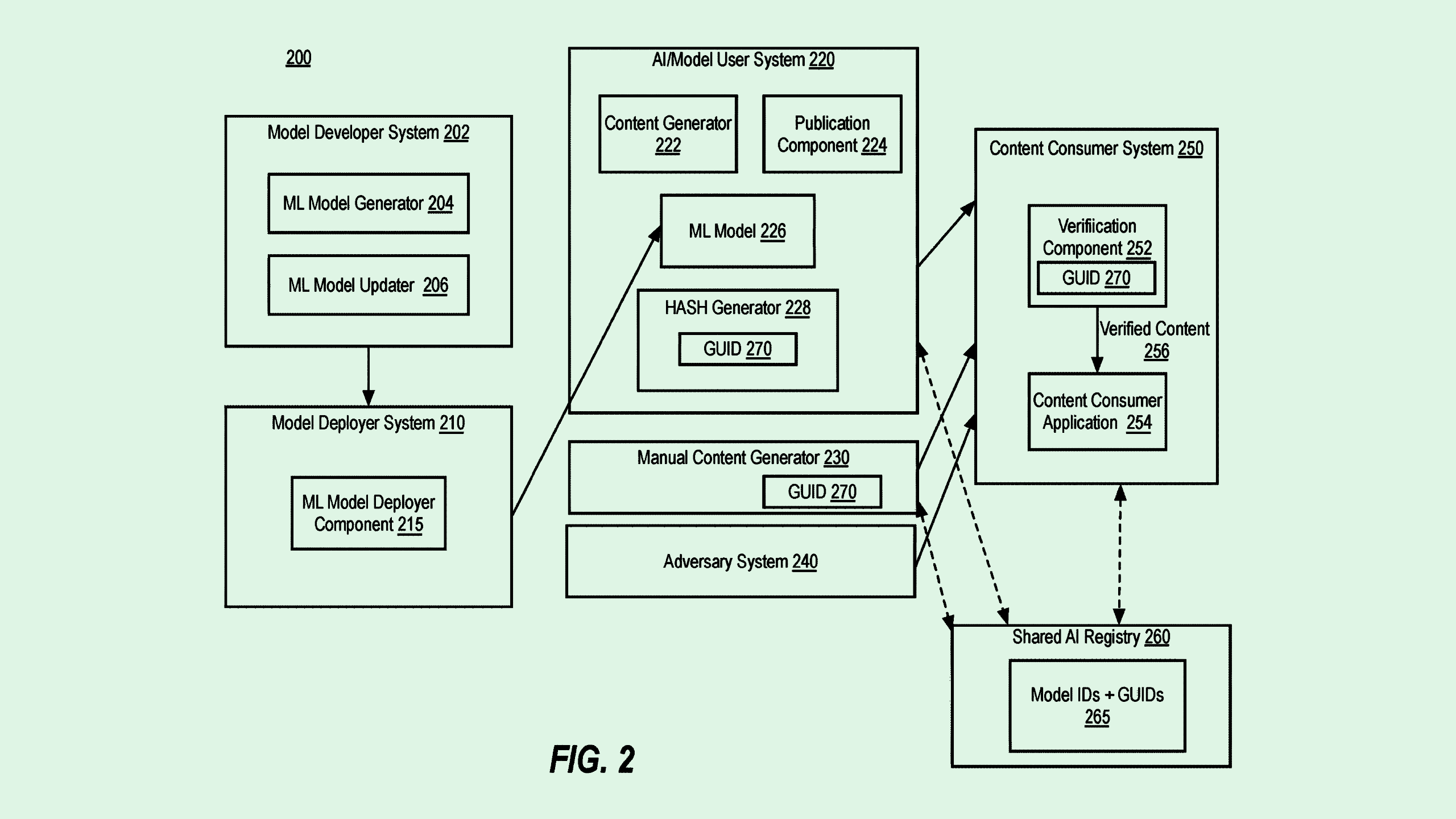

Intel’s system would give all content that passes through it a digital signature with a “globally unique identifier” of the AI model that created it. To differentiate, content created by actual humans may be assigned “generic” identifiers.

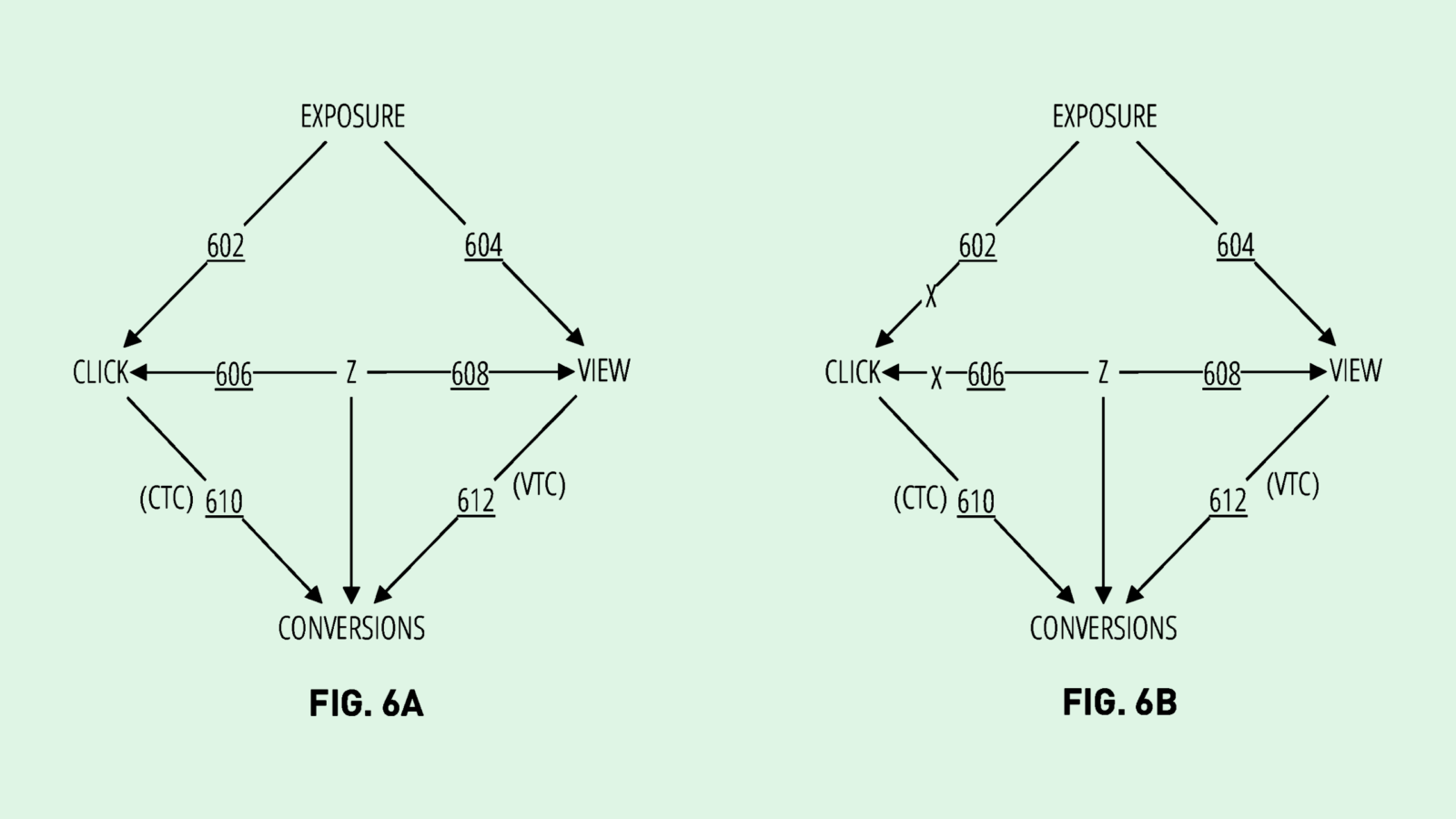

The system then verifies the extracted identifier against a trusted “shared AI registry,” which maintains a record of unique identifiers for lots of different AI models. Along with determining that the content is AI generated, Intel’s system traces back exactly which AI model the content may have come from.

Intel noted several potential implementations for this technology, including on a publishing platform with built-in watermarking to distinguish AI-generated publications from those by human authors and prevent the “publishing and/or consumption of unverified content.” Intel also said that this could work across a variety of media, including text, images, and video.

This isn’t the first time we’ve seen tech firms take an interest in catching AI-generated content: Sony previously filed a patent application for a way to use blockchain to mark deepfake content, and Nvidia sought to patent a way to watermark AI-generated audio.

Google upgraded its own invisible AI watermarking technology, called SynthID, to help track down generative text and video. OpenAI has reportedly had a similar method in the works for a year that’s “99.9% effective” and resistant to paraphrasing, according to The Wall Street Journal, but shelved rollout plans after users said they’d stop using the company’s models.

Despite OpenAI’s hesitance, AI watermarking tools could be an integral tool in identifying the source of realistic-looking — but ultimately synthetic — content, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. “There’s been a huge question of how [we are] going to be able to tell real things from fake things in the future,” said Green.

The problem, however, is the rapid progression with which generative AI has skyrocketed, said Green. The wide availability of these platforms has given anyone the tools to easily create and spread misinformation. “The solutions didn’t come out fast enough,” said Green.

“This should have been a solution that was built into these generators before they launched,” Green said. “This is playing catch-up with something that they knew was going to be a problem. But at the same time, better late than never.”

The other problem is that watermarking AI-generated content itself may not be enough, said Green. As this patent hints, watermarks may need to be mandated for human-made content, too. “There are several other steps that need to be taken, and some of them may result in surveillance that maybe we don’t actually want to have.”

While Intel hasn’t been one of the AI companies to create widely-available generative models itself, its patent history is filled with inventions aimed at creating more ethical and responsible models. As the chip manufacturer struggles against the likes of Nvidia and AMD to maintain AI relevance, offering any tech that focuses on responsible AI could be a means of differentiating itself — and improving its image.